Quick Recap: Your credit scoring model can deny a customer their mortgage. Your fraud detection model can freeze accounts instantly. Your AML system can flag innocent people as criminals. These systems need permission controls—not everyone should access them. Access governance means defining who can see the model, change it, or use its predictions. When controls are missing, mistakes scale fast.

Your security team runs an audit of model access.

They ask a simple question: "Who has access to the production fraud model?"

The answer is uncomfortable.

Your senior engineer: "Me, and probably everyone on the data team."

Security team digs deeper. Pulls the access logs.

They find:

47 people with some form of access

12 of them left the company (still have keys)

3 contractors from a vendor still have production credentials

Nobody knows why the summer intern had admin access

Your CTO says: "We trust our team. Nobody's going to break it."

Security responds: "It's not about trust. It's about what happens when someone makes a mistake. Or when someone's laptop gets stolen. Or when that contractor's credentials are compromised."

One accidental change to the fraud model. Wrong prediction threshold. Thousands of legitimate transactions blocked. Customer outrage. Regulatory investigation.

One data scientist exports customer data to debug something locally. Laptop stolen. Data leaked.

This is what "no access governance" looks like in practice. Not malice. Just absence of boundaries.

What Access Governance Actually Means

Access governance is simple in principle. Harder in practice.

Principle: Only people who need access get access. Access is logged. Changes are tracked.

In practice: Defining "need to access" and enforcing it without breaking productivity.

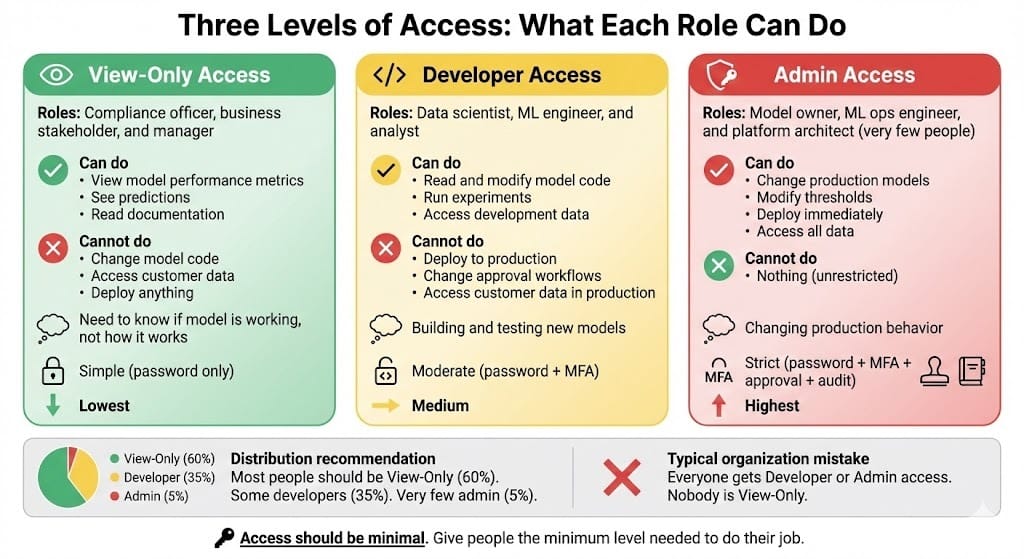

Three Levels of Access

Level 1: View Access

Can see the model exists

Can view performance metrics

Cannot change anything

Cannot export data

Use case: Business stakeholders, compliance team

Level 2: Develop/Debug Access

Can read model code

Can run experiments locally

Can propose changes

Cannot deploy to production

Use case: Data scientists, ML engineers

Level 3: Admin/Deploy Access

Can change production models

Can modify thresholds

Can access customer data

Changes take effect immediately

Use case: Model owner, ML ops engineer (very few people)

Most organizations fail because too many people have Level 3 access.

The Risk When Boundaries Are Missing

Missing access governance creates these problems:

Risk 1: Accidental Changes

Senior engineer tweaks model threshold to debug something

Forgets to revert change before leaving

Change goes to production

Model behavior breaks

Investigation takes days

Risk 2: Data Exposure

Data scientist exports customer data to laptop for analysis

Laptop stolen

Customer data leaked

Breach notification required

Regulatory fine incoming

Risk 3: Malicious Insiders

Disgruntled employee has model access

Changes approval thresholds to deny customers

Or approves ineligible customers for bribes

Regulatory investigation

Criminal charges possible

Risk 4: Compromised Credentials

Contractor's credentials leaked or sold

Attacker uses credentials to access production

Attacker exports data or changes model

You don't know until audit or customer complains

Risk 5: Regulatory Violation

Regulator asks: "Who can access this model?"

Your answer: "Uh... probably 30 people?"

Regulator finding: "Inadequate access controls"

How Access Governance Works in Practice

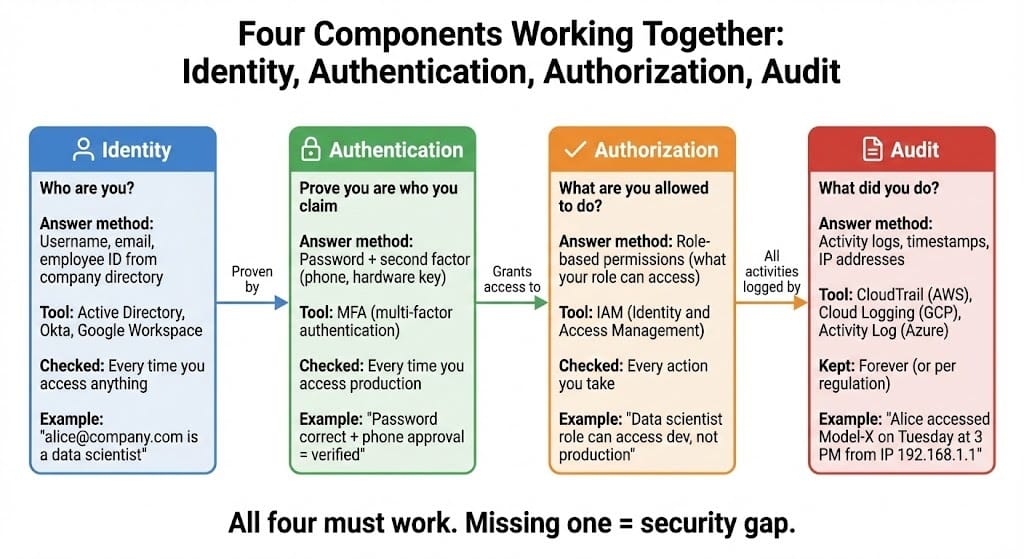

The Four Components

Component 1: Identity

Who are you? Username, email, employee ID

Verified through directory (Active Directory, Okta)

Single source of truth about who works here

Component 2: Authentication

Prove you are who you claim. Password, MFA, certificate

Multi-factor authentication required for production access

No password sharing allowed

Component 3: Authorization

What are you allowed to do? Role-based access control

Data scientist role: can read/write to dev environment

ML ops role: can deploy to production

Analyst role: can view metrics only

Component 4: Audit

What did you do? Activity logs of every access

Who accessed model at 3 AM on Sunday?

Who changed the threshold and when?

Logs kept forever (or per regulation)

All four must work together.

Real Implementation Pattern

Here's what production teams actually do:

Step 1: Define Roles

View-only: Compliance, business stakeholders

Developer: Data scientists, engineers

Operator: ML ops, DevOps

Admin: Model owner (one person usually)

Step 2: Map to Systems

View-only: Can read dashboards, cannot touch code

Developer: Can access dev environment, cannot touch production

Operator: Can deploy to production, cannot modify approval workflows

Admin: Can do anything, but changes require second approval

Step 3: Technical Implementation

Use your cloud provider's IAM (AWS IAM, GCP IAM, Azure AD)

Or open-source tools (HashiCorp Vault, Keycloak)

Never store passwords in code

API keys rotated every 90 days

Step 4: Logging and Monitoring

Every production access logged

Every model change logged

Unusual access patterns trigger alerts

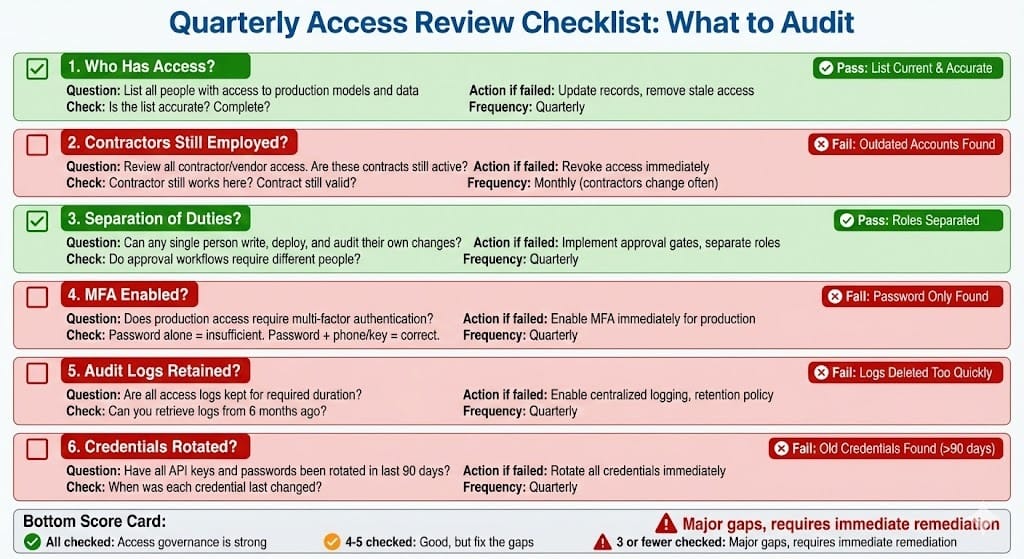

Quarterly access reviews (who still needs access?)

Step 5: Regular Audits

Who has what access today?

Remove access when people leave

Revoke contractor access when contracts end

Test that access controls actually work

BFSI-Specific Patterns

Pattern 1: Separation of Duties

One person shouldn't be able to change a model AND deploy it AND cover their tracks.

Bad approach (one person has all three):

Alice writes new model logic

Alice deploys it to production

Alice modifies audit logs to hide the change

No one knows what happened

Good approach (three different people):

Alice writes new model logic and submits for review

Bob reviews and approves the change

Charlie deploys to production

All actions logged separately

Each person can see what others did

Regulators love this. Prevents solo malfeasance.

Pattern 2: Production vs Non-Production Isolation

Your model development should never touch production data.

Isolation means:

Developers never have production credentials

Production data never leaves production

Testing uses synthetic or anonymized data

Production access requires MFA + approval

Non-production access is easier (faster development)

Why this matters: If a developer's laptop is stolen, it has access to dev environment only. Production stays safe.

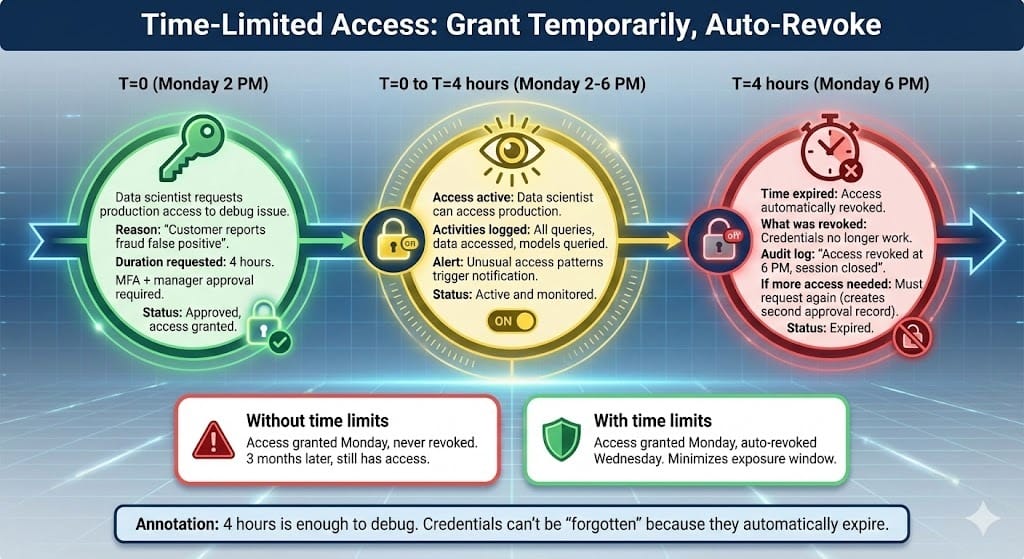

Pattern 3: Time-Limited Access

Don't give permanent access. Give time-limited access.

Example:

Data scientist needs to debug a production issue

Grant access for 4 hours only

After 4 hours, access auto-revokes

If more time needed, request extension (creates audit trail)

Why this matters: Reduces window of exposure. Credentials can't be forgotten because they automatically expire.

Common Mistakes

Mistake 1: Trusting Everyone Equally

❌ "Everyone on the team is trustworthy, so everyone should have access" ✅ "Everyone is trustworthy, but their credentials might not be. Limit access anyway."

Stolen credentials don't mean the person is malicious. But attacker can do damage with their access.

Mistake 2: Hardcoding Credentials

❌ Storing API keys in code or config files ✅ Using secrets management (Vault, AWS Secrets Manager)

Hardcoded credentials in git = permanently compromised.

Mistake 3: Never Revoking Access

❌ "Alice works here, give her access. When she leaves in two years, nobody remembers to remove it." ✅ Quarterly review of all access. Remove access for people who left.

Former employees still have credentials? Major vulnerability.

Mistake 4: No Audit Logging

❌ "Who accessed the model? We don't have logs." ✅ Every access logged, timestamps, IP address, action taken

Without logs, you can't investigate. Regulators will ask.

Mistake 5: Same Access for Dev and Prod

❌ One credential works for both dev and production ✅ Separate credentials. Production requires extra authentication

If one is compromised, at least production stays safe.

Looking Ahead: 2026-2030

2026: Access governance becomes regulatory requirement

Regulators expect documented access controls

Quarterly access reviews become auditable requirement

Institutions without controls face findings

2027-2028: Zero-trust architecture becomes standard

Assume no credential is safe

Every access requires fresh authentication

Micro-segmentation (access granted for specific task, specific time)

2028-2029: Automated access management emerges

Systems automatically detect and revoke stale access

Role changes trigger automatic permission updates

Unused access automatically flagged for review

2030: Identity as security foundation

Cryptographic proof of identity (not passwords)

Access decisions made with real-time risk assessment

"Zero standing privileges" becomes standard (request access as needed)

HIVE Summary

Key takeaways:

Access governance isn't about trust. It's about limiting damage when credentials are compromised (and they will be).

Three levels work: view-only (see metrics), developer (change code), admin (deploy). Most people should be view-only or developer. Very few should be admin.

Four components must work together: identity (who are you), authentication (prove it), authorization (what you can do), audit (what you did).

Separation of duties prevents solo malfeasance: one person writes code, different person deploys, third person audits.

Time-limited access reduces exposure: grant access for 4 hours, not forever. Auto-revoke when time expires.

Start here:

If no access controls exist: Start with role definitions today. Who should have what access? Document it.

If informal controls exist: Implement technical controls. Use IAM (AWS/GCP/Azure). Set up MFA for production.

If controls exist but not audited: Run access audit this week. Who still needs access? Remove stale access.

Looking ahead (2026-2030):

Access governance becomes regulatory mandate (not optional best practice)

Zero-trust architecture becomes standard (assume credentials are compromised)

Automated access management reduces manual burden

Cryptographic identity replaces passwords

Open questions:

How often should access be reviewed? Monthly? Quarterly? Annually?

When does "user left company" translate to "access revoked"? Immediately? End of day? End of week?

Can you grant production access without MFA? (Regulators increasingly say no.)

Jargon Buster

Access Governance: System of rules controlling who can access what. Includes identity verification, permission levels, and audit logging. Why it matters in BFSI: Prevents unauthorized access to models and data. Regulatory requirement.

Role-Based Access Control (RBAC): Assigning permissions based on job role, not individual. Data scientist role gets certain access. Analyst role gets different access. Why it matters in BFSI: Scales better than individual permissions. Easier to audit.

Multi-Factor Authentication (MFA): Proving identity with more than one method (password + phone, password + hardware key). Why it matters in BFSI: Single stolen password isn't enough to access production. Attacker needs multiple factors.

Audit Trail: Log of who accessed what, when, and what they did. Why it matters in BFSI: Proves compliance with access controls. Helps investigate incidents. Required for regulatory exams.

Credentials: Proof of identity (password, API key, certificate). Why it matters in BFSI: Stolen credentials = attacker can access your systems. Credentials must be protected and rotated.

Separation of Duties: Different people perform different roles (one writes code, different person deploys). Why it matters in BFSI: Prevents solo malfeasance. Requires conspiracy to break rules.

Zero-Trust Architecture: Security model assuming all credentials could be compromised. Verify everything, trust nothing. Why it matters in BFSI: More secure than "trust internally, verify externally" model.

Time-Limited Access: Access that expires automatically. Request access for 4 hours. After 4 hours, automatically revoked. Why it matters in BFSI: Reduces window of exposure. Prevents "forgotten" access.

Fun Facts

On Forgotten Contractor Access: A major US bank discovered they still had access credentials from contractors who left 18 months earlier. The contractors' company was sold. New owners now had those credentials. When access audit ran, they found 3 contractor accounts, 5 developer accounts from people who'd left, and 2 test accounts that were supposed to be deleted. Total: 47 people with some level of access to production models. Regulatory fine: $500K for inadequate access controls. The lesson: quarterly access reviews catch this before regulators do.

On Credential Theft: A financial services company had a data scientist whose laptop was stolen from a coffee shop. Laptop had hardcoded AWS credentials for production. Attacker used credentials to access models, export customer data, and modify a fraud detection threshold. Bank didn't realize for 6 days until customer complained about false fraud blocks. Regulatory investigation, credit monitoring offered, reputation damage. Cost: $2M+. The lesson: hardcoded credentials = permanently compromised when device is lost.

For Further Reading

NIST Cybersecurity Framework: Access Control (National Institute of Standards and Technology, 2024) - https://nvlpubs.nist.gov/nistpubs/cswp/nist.cswp.04162018.pdf - Federal guidance on access governance. Standard reference for what regulators expect.

Zero Trust Architecture (NIST Special Publication 800-207, 2024) - https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-207.pdf - Modern security framework assuming credentials could be compromised. Where industry is moving.

AWS Identity and Access Management Best Practices (Amazon Web Services, 2025) - https://docs.aws.amazon.com/IAM/latest/UserGuide/best-practices.html - Practical guide for implementing RBAC in cloud. Applicable to GCP and Azure too.

HashiCorp Vault for Secrets Management (HashiCorp, 2025) - https://www.vaultproject.io/ - Open-source tool for managing credentials, API keys, and access. Industry standard.

Federal Reserve Guidance on Access Controls (Federal Reserve Board, 2024) - https://www.federalreserve.gov/supervisionreg/srletters/sr2024_guidance.pdf - Regulatory expectations for financial institutions. What examiners audit.

Next up: Week 8 Wednesday explores model lifecycle management with MLflow—how to version models, track what changed between versions, and roll back to previous versions when new ones fail.

This is part of our ongoing work understanding AI deployment in financial systems. If you've implemented access controls and discovered stale access during audit, share what you found and how you cleaned it up.

— Sanjeev @ AITechHive.com