Quick Recap: Most banks have incident response for infrastructure but not for AI failures. A model making biased decisions at scale needs a different playbook than a database outage. Here's how to build one that actually works when regulators are watching.

The Conversation Nobody Wants to Have

Your credit risk model has been running perfectly for 18 months. Then one Tuesday, confidence drops 25% overnight. Not a catastrophe—the systems still work, APIs respond fine, no bugs in the logs. But the model is less sure about its decisions.

Your ML lead's first question: "Should we halt it?"

Your Risk Manager's first question: "Have we notified the regulator?"

Your Compliance Officer's first question: "Is this a fairness issue? Did something change in how we're approving by demographic?"

Your General Counsel's first question: "What do we tell customers who were denied based on this model?"

Notice the problem? Four different stakeholders, four different priorities, no shared playbook.

Here's what most banks do: Emergency Slack call. Someone says "Let's investigate." Someone else says "Maybe we should pause approvals?" Compliance officer is Googling whether this requires regulatory notification. Thirty minutes later, you've made zero decisions but everyone's stressed.

Banks that have figured this out have one thing: A pre-built escalation matrix that removes the guesswork. When alert fires → Classification happens → Action is predetermined. No judgment calls at 10 AM on a Tuesday.

Why AI Incidents Demand Different Responses

Infrastructure incidents are binary. Your database is down or it's up. Your API is responding or it's not. You fix it, verify it works, restore service. Clear cause, clear effect, clear resolution.

AI incidents live in the gray zone.

The model might be running perfectly fine from a systems perspective. Database is healthy. API responds in 45ms. No errors in logs. But the model is making systematically worse decisions. Or it's treating different demographic groups unfairly. Or it's so unconfident that it's basically useless. None of these are system failures. All of them are business failures.

Why this matters in BFSI: Fed guidance (2025) requires banks to identify material AI issues within 24 hours. But here's the kicker—infrastructure incidents you discover because users start complaining. AI incidents can run silently for weeks. A credit model approving loans it shouldn't? Nobody notices until defaults spike six months later. A fraud model getting less accurate? The fraud team thinks market conditions changed. An embeddings model drifting? Your search results just get worse, gradually.

The other difference: Infrastructure incidents have one audience (ops teams). AI incidents have multiple audiences simultaneously:

Compliance (fairness implications?)

Risk (financial exposure?)

Legal (customer liability?)

Regulators (notification required?)

Business (revenue impact?)

All asking different questions. All needing different answers. All on different timelines.

The Three-Layer Detection System

You can't respond to incidents you don't see. That means automated detection with clear severity signals.

Layer 1: What Gets Monitored

For each production model:

Performance metrics: Accuracy, precision, recall, AUC-ROC (is the model still discriminating correctly?)

Confidence metrics: Average prediction confidence (is the model sure about decisions?)

Distribution shifts: Input feature distributions (are we getting different applicants than in training?)

Fairness metrics: Approval rates by demographic, accuracy by demographic (is something becoming unfair?)

Output drift: Prediction distributions (suddenly approving way more? Or way less?)

Latency: Inference speed (is the model slowing down?)

Layer 2: Alert Thresholds

Not all changes are equal. You need severity levels:

GREEN (Normal):

- Accuracy: 88-92%

- Confidence: 75-85%

- Fairness disparity: <2%

- Drift score: <0.05 (minimal change)

YELLOW (Watch):

- Accuracy: 80-88% or 92-96%

- Confidence: 70-75% or 85-90%

- Fairness disparity: 2-5%

- Drift score: 0.05-0.10

RED (Act):

- Accuracy: <80%

- Confidence: <70%

- Fairness disparity: >5%

- Drift score: >0.10Why this matters: You can't alert on every 1% change. You need signal vs. noise separation. Green is normal statistical variation. Yellow is "pay attention, something's shifting." Red is "stop everything and investigate."

Layer 3: Classification Framework

When an alert fires, you don't immediately panic. You classify the incident on three axes to determine escalation speed.

Axis 1: Autonomy Level (How much does the model decide?)

Level 1: Decision support only. Humans review every decision.

Level 2: Recommended decision. Humans typically approve, but model drives the recommendation.

Level 3: Autonomous decision. Model decides, humans see the outcome logged after the fact.

Level 4: Autonomous + irreversible. Model decides, decision executes immediately, hard to reverse.

Axis 2: Impact Scope (How many decisions/customers?)

Scope A: <1% of daily decisions

Scope B: 1-10% of daily decisions

Scope C: 10-50% of daily decisions

Scope D: >50% of daily decisions

Axis 3: Decision Criticality (How important is this decision?)

Critical: Credit approvals, capital allocations, sanctions screening

High: Fraud alerts, transaction monitoring, anti-money laundering holds

Medium: Customer service routing, product recommendations

Low: UI personalization, content ranking

The combination determines your action:

Level 4 + Scope D + Critical = STOP IMMEDIATELY

Level 4 + Scope C + Critical = HALT WITHIN 1 HOUR

Level 3 + Any + Critical = NOTIFY RISK & LEGAL (within 2 hours)

Level 2 + Any + Any = FLAG & MONITOR (next business day investigation)

Level 1 + Any + Any = LOG & INVESTIGATE (standard priority)

The Escalation Matrix (The Actual Decision Rules)

Here's where the magic happens. This is the table you print, laminate, and put in every incident war room.

Detection Level | Autonomy | Scope | Criticality | Primary Action | Who Gets Called | Timeline | Key Documentation |

|---|---|---|---|---|---|---|---|

Red | Level 4 | D | Critical | HALT model immediately. Switch to manual decisions or previous model version. | IC, ML Lead, Risk VP, Compliance | 0-15 min | Incident ticket, action taken, who approved halt |

Red | Level 4 | C | Critical | PAUSE autonomous decisions. Audit recent predictions before resuming. | ML Lead, Risk Manager | Within 1 hour | Root cause hypothesis, audit results |

Red | Level 4 | B | Critical | PAUSE new decisions. Investigate root cause same business day. | ML Lead, Risk | Within 4 hours | Investigation summary, remediation proposal |

Red | Level 3+ | Any | High | NOTIFY Risk & Compliance. Prepare for customer communication if decisions are reversible. | Risk Committee, Compliance, Legal | Within 2 hours | Incident report, customer communication draft |

Yellow | Level 4 | Any | Critical | INVESTIGATE same shift. Don't halt unless it escalates to Red. | ML Lead, Data Engineer | Within 8 hours | Technical investigation memo |

Yellow | Level 3 | Any | Any | MONITOR closely. Schedule proper investigation next business day. | ML Lead | Next business day | Investigation ticket, priority flagged |

Yellow | Level 1-2 | Any | Any | LOG incident. Standard priority investigation. | ML Team | Standard | Incident log entry |

The Runbook: What People Actually Do

When the escalation matrix says "Call Risk VP," what happens next? Here's the step-by-step that actually works:

For Incident Commander (First Responder)

When paged (Do this in order, in parallel):

Verify the alert is real (2 minutes)

Log into monitoring dashboard

Confirm the metric change yourself (not a dashboard bug)

Note the exact time the alert fired vs. when the issue started

Document in Slack: "Alert verified at 10:47 AM. Confidence dropped from 88% to 63% starting ~10:30 AM"

Gather the facts (3 minutes)

What model? (name, version hash, environment)

What changed? (confidence? fairness? accuracy?)

How long has it been running this way?

How many decisions affected? (Use Scope A-D)

Is the model still running or paused?

Document in template:

MODEL: [Name] | VERSION: [Git hash or version number]

METRIC: [Confidence/Fairness/Accuracy] changed by [amount]%

SINCE: [Time]

SCOPE: [A/B/C/D - est. number of affected decisions]

STATUS: [Still running / Paused / Halted]Classify using the matrix (2 minutes)

Determine autonomy level (is this a Level 3 or Level 4 model?)

Determine scope (A/B/C/D)

Determine criticality (Critical/High/Medium/Low)

Look up the row in the escalation matrix

Note the timeline and who to call

Execute the immediate action (1 minute)

Red + Critical: Don't ask permission. Halt the model now.

Red + High: Notify stakeholders while investigating.

Yellow: Notify but don't halt.

Green: Log it.

Notify stakeholders (2 minutes)

Post in #ai-incident-response Slack channel with this format:

🚨 AI INCIDENT: [Model Name]

Severity: [Red/Yellow/Green] | Level [1-4] | Scope [A-D] | [Criticality]

Detection: [What changed - confidence/fairness/accuracy]

Action: [Halted/Paused/Investigating/Logged]

Who's involved: [IC, ML Lead, Risk Manager, Compliance]

Next update in: 15 minutesSuccess metric: Situation report ready, stakeholders notified, decision made, within 15 minutes of alert.

For ML Lead (Technical Investigator)

When called on the incident (parallel with IC's actions):

Get situation context from IC (2 minutes)

Alert type, severity, scope, action taken

Ask: "Is this halted already?"

Set up war room (Zoom or Slack thread for coordination)

Investigate the three layers immediately (15-45 minutes): Data layer (Did input change?):

Query: Feature statistics from last 24 hours vs. baseline

Look for: Nulls spike, outliers, distribution shifts

Talk to data ops: "Did anything change upstream?"

Example: "Feature X is now null 40% of the time" = data quality issue

Model layer (Did the model change?):

Check: Recent deployments/retrains? Model code changes?

Check: Feature calculation pipeline working?

Look for bugs that shipped yesterday or changes that went live

Example: "Model v2.1 deployed 6 hours ago, confidence dropped 2 hours later" = timing correlates

Output layer (What's actually happening?):

Sample recent predictions (10-20 examples)

Compare to baseline from 1 week ago

Check decision thresholds/configs

Example: "Threshold was at 65%, someone changed it to 55%" = configuration issue

Form a hypothesis (by 30-minute mark):

Write one sentence: "The model itself is fine. Feature X went null due to upstream system change."

Or: "Model v2.1 has a bug in feature scaling."

Or: "Market conditions shifted. Model is correctly less confident."

Propose remediation (by 45-minute mark):

If data issue: "Filter null values" or "Revert upstream change"

If model issue: "Rollback to v2.0" or "Hotfix feature scaling"

If expected behavior: "Raise confidence threshold? Accept new distribution?"

Update status (every 30 minutes):

Post in war room: "Investigating [data/model/output]. Hypothesis: [one sentence]. ETA: [time]"

Success metric: Hypothesis with root cause + proposed fix within 1 hour. Confidence that the fix is right.

For Risk Manager (Business Impact & Governance)

When called on critical incidents:

Assess business impact (15 minutes):

How many customers affected? (Scope A = 100, Scope B = 1000, etc.)

What type of decisions? (Credit = high impact, fraud = different impact)

Are decisions reversible? (Can we call back denials? Can we refund charges?)

Worst-case financial impact?

Determine regulatory obligation (within 30 minutes):

Is this material? (Fed considers material = potential regulatory concern)

Does it require 24-hour notification? (Only if material + autonomous decision)

Do we need customer communication? (If decisions were unfair/discriminatory)

Decision: Notify regulator now? Monitor and update within 24 hours? No notification needed?

Approve or reject remediation (within 1-2 hours):

ML Lead proposes a fix

Risk Manager evaluates: Is this fix acceptable?

Question: Does it restore fairness? Does it fix the accuracy problem?

Options: Approve → Resume model. Reject → Rollback or escalate further.

For critical incidents, you might require: "Third-party validation before resume."

Document decisions (within same day):

Create incident record (date, model, issue, root cause, action taken)

Update model risk register

Schedule post-mortem meeting

Common Scenarios & Exact Responses

Scenario 1: Confidence Drops 30%

Alert fires: Red | Level 4 | Scope B | Critical

IC action: Pause new approvals immediately (5 min) ML investigation: Check for data quality issues or recent changes (30 min) Hypothesis: Feature X null rate jumped from 5% to 35% Root cause: Upstream system schema change (missing field) Remediation: Apply data filter (exclude records with null Feature X), resume Timeline: Full resolution by 1 hour

Risk assessment: No regulatory notification (data quality fix is operational, not material)

Scenario 2: Fairness Disparity Jumps from 2% to 8%

Alert fires: Red | Level 4 | Scope C | Critical

IC action: Pause new approvals immediately (5 min) ML investigation: Run demographic breakdown analysis (30 min) Discovery: Approval rate for Female applicants dropped from 50% to 40%. Male stayed at 50%. Root cause: Retraining happened yesterday on data with more defaults in Female segment (legitimate risk or bias?) Risk assessment: Potential discrimination issue. Notify Legal immediately. Regulatory angle: This requires 24-hour notification to Fed if not resolved. Decision: Revert model to v2.0 (knew it was fair) OR retrain with fairness constraints Timeline: Critical resolution, legal approval required before resume

Scenario 3: Accuracy Drops Slowly (Caught in Monitoring)

Alert fires: Yellow | Level 3 | Scope B | Medium

IC action: Notify ML Lead, flag for investigation (doesn't require immediate halt) ML investigation: Compare model accuracy on recent data vs. training data Discovery: Model accuracy on data from past 30 days is 89%, was 94% in training Root cause: Market conditions shifted (different applicant profile, not model bug) Decision: This is expected. But do we keep it running at 89% or retrain? Timeline: Investigation by end of day, decision by next morning

Building the Runbook: Implementation Checklist

Step 1: Document Your Models (Weeks 1-2)

For each production model, you need:

Model name, version, what it does

Autonomy level (1-4)

Impact scope (A-D)

Criticality level (Critical/High/Medium/Low)

Owner (who owns it day-to-day?)

Key metrics to monitor

Alert thresholds (Green/Yellow/Red)

Backup/fallback option (what happens if model fails?)

Table goes into model registry accessible during incident

Step 2: Build Automated Detection (Weeks 3-6)

Set up monitoring (Prometheus/Grafana for metrics)

Configure alerts (PagerDuty, email, Slack integration)

Test alerts with dry runs

Ensure stakeholders can access dashboards in real-time

Establish alert delivery (who gets paged? Slack? Email? Phone?)

Step 3: Create Incident Response Infrastructure (Weeks 7-8)

Slack channel: #ai-incident-response (monitored 24/7)

Runbook in shared wiki (not someone's Notion page)

On-call rotation with clear handoffs

Escalation contact list (who to call if things get worse?)

War room setup (Zoom, Slack, docs all ready to go)

Step 4: Run War Games (Week 9)

Simulate incidents quarterly

Test escalation matrix in real scenario

Find gaps ("Oh, we never defined who Risk Manager is")

Update runbook based on learnings

Train new team members by doing a simulation

Step 5: Train & Document (Week 10)

All on-call team members get trained (2-hour session)

Walk through each role's responsibilities

Practice the decision-making under time pressure

Create quick-reference cards for each role

Share post-mortem learnings with team

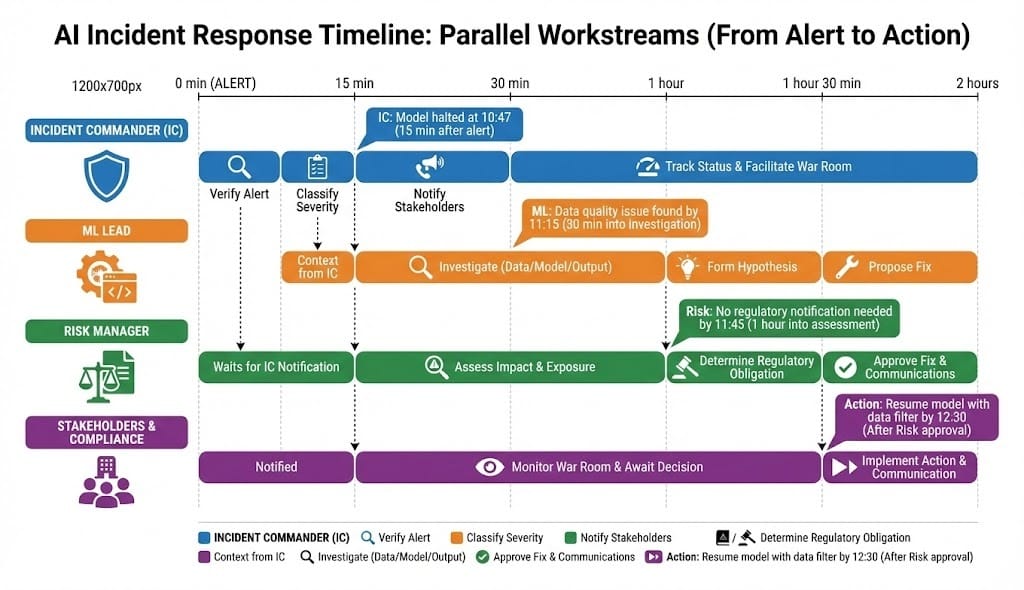

📊 INFOGRAPHIC PROMPT 4: Runbook Checklist - From Alert to Resolution

What to show: Single-page action checklist for incident commander.

Layout: 800x1000px, vertical checklist format (laminate-ready)

Sections (top to bottom):

Phase 1: Detection (0-5 min)

☐ Alert received, verify it's real

☐ Note time alert fired and time issue started

☐ Get model name, version, what changed

☐ Estimate scope (A/B/C/D) and autonomy level

Phase 2: Classification (5-10 min)

☐ Determine autonomy level (1/2/3/4) — look up in model registry

☐ Determine criticality (Critical/High/Medium/Low) — look up in model registry

☐ Cross-reference escalation matrix for action

☐ Execute action (Halt/Pause/Investigate/Log)

Phase 3: Notification (10-15 min)

☐ Post in #ai-incident-response with template format

☐ Call team members based on escalation matrix

☐ Assign investigation to ML Lead

☐ Assign impact assessment to Risk Manager

Phase 4: Investigation (15-60 min)

☐ ML: Check data quality (nulls, outliers, shifts)

☐ ML: Check model (deployments, code changes, configs)

☐ ML: Check outputs (actual decisions, thresholds)

☐ Form hypothesis with root cause

☐ Propose remediation

Phase 5: Decision (60-120 min)

☐ Risk Manager: Assess business impact

☐ Risk Manager: Determine regulatory obligation

☐ Risk Manager: Approve or reject remediation

☐ Execute remediation or escalate

Phase 6: Closure (same day)

☐ Document incident (date, model, root cause, action)

☐ Update model risk register

☐ Schedule post-mortem meeting

☐ Notify stakeholders of resolution

Contact card (bottom of sheet):

Incident Commander on-call: [Phone/Slack]

ML Lead on-call: [Phone/Slack]

Risk Manager on-call: [Phone/Slack]

Escalation if all unreachable: [CFO/COO number]

Looking Ahead (2026-2030)

2026-2027: Regulators shift from "Do you have an incident response plan?" to "Show us your metrics."

Fed wants to see: Average detection time, average resolution time, regulatory notification accuracy

Your runbook becomes compliance evidence. You'll present incident metrics in exams.

2027-2028: AI incidents become more common, not less.

50% more models in production by 2028 (industry growth)

More models = more drift, more fairness issues, more incidents

Banks with best incident response will have competitive advantage (regulators trust them faster for new models)

2028-2030: Automation in incident response increases.

Auto-pause triggers when Red alert fires (no human decision needed for autonomy level 4)

Auto-rollback to previous model version

Automated fairness remediation (retrain with constraints)

But: Human approval still required for all critical decisions. Regulators won't accept "the system paused itself"

HIVE Summary

Key takeaways:

AI incidents require different escalation than infrastructure incidents. A halved confidence isn't the same as a database outage—it's a business failure, not a system failure.

Pre-built escalation matrix removes judgment calls. Alert fires → Classification happens → Action is predetermined. No 30-minute emergency calls trying to figure out what to do.

Three axes determine escalation: Autonomy level (how much does the model decide?), Impact scope (how many decisions?), and Criticality (how important?). Combination determines timeline and who gets involved.

Detection must be automated with clear thresholds (Green/Yellow/Red). Humans can't monitor 20 models simultaneously. Alerts must be tuned to signal vs. noise.

Incident Commander role is critical—someone owns the first 15 minutes and makes the initial decision (halt/pause/investigate). Everything else flows from there.

Start here:

If you have production AI models but no incident response: Audit your models first. Document autonomy level, scope, criticality for each. That's the foundation.

If you have alerts but no escalation rules: Build your escalation matrix this month. Laminate it. Put it in your war room. Share with on-call team.

If you've had an AI incident: Post-mortem isn't about blame. It's about building a runbook so the same incident doesn't happen twice. Do that immediately.

Looking ahead (2026-2030):

Fed increasingly expects incident response metrics. Detection time, resolution time, notification accuracy. These become compliance KPIs.

Auto-pause and auto-remediation will emerge, but human judgment remains for critical decisions.

Banks with mature incident response will deploy AI faster (regulators trust them more).

Open questions:

How do you define "material issue" requiring regulatory notification? (Fed says 24 hours, but what triggers that clock?)

When a model is paused, should it fall back to a previous version or manual decision-making? (Different banks choose differently.)

How do you prevent alert fatigue when 20+ models all have yellow alerts? (Aggregation and prioritization are active research problems.)

Jargon Buster

Escalation Matrix: A table mapping alert severity + incident characteristics to specific actions and stakeholders. Why it matters in BFSI: Regulators expect clear decision rules, not judgment calls. A documented matrix shows auditors that you think systematically about AI incidents.

Drift Detection: Automated monitoring that flags when model inputs (features) or outputs (predictions) change significantly from training baseline. Why it matters in BFSI: Fed guidance requires "continuous monitoring" of models. Drift detection is how you do continuous monitoring without 24/7 manual reviews.

Autonomy Level: Classification of how much a model decides independently vs. requiring human review. Level 1 = human always reviews. Level 4 = model decides autonomously. Why it matters in BFSI: Level 4 incidents need faster response (decisions executing immediately). Level 1 incidents can wait until next business day (humans review anyway).

Root Cause Analysis: Investigation answering "why did this happen?" not just "what happened?" Example: Model confidence dropped (what) because feature X went null due to upstream schema change (why). Why it matters in BFSI: Regulators ask "did you understand what broke?" Not understanding root cause means you can't prevent it again.

False Positive Alert: Alert that triggers without a real incident. Example: Model confidence drops 8% because applicant pool shifted (normal), not because model broke. Why it matters in BFSI: Too many false positives = teams ignore alerts = you miss real incidents. Alert thresholds must be carefully tuned.

War Room: Temporary team assembled for serious incidents—Incident Commander, ML Lead, Risk Manager, sometimes Legal. Coordinating in Slack channel or Zoom call. Why it matters in BFSI: Serious AI incidents need real-time coordination. Communication delays = longer resolution = bigger business impact.

Regulatory Notification: Telling your regulator (Fed, OCC, EBA, FCA) about a material incident. Why it matters in BFSI: Fed expects 24-hour notification for material issues. Failing to notify = additional violation on top of the original incident. Being slow = "we didn't take this seriously" signal.

Runbook: Written, step-by-step procedure showing what to do when incident happens. Not a guide. Specific actions: "When this alert fires, do X, Y, Z in that order." Why it matters in BFSI: At 3 AM, people don't think clearly. A runbook removes thinking—follow the steps. Regulators expect documented runbooks, not ad-hoc responses.

Fun Facts

On Alert Fatigue: A major US bank set up 47 separate model monitoring alerts across credit, fraud, and compliance systems. Within 3 months, 62% of alerts were ignored because they fired constantly on non-critical changes. They discovered that 78% of alerts could be consolidated into 5 meaningful thresholds. The lesson: More alerts ≠ more insight. Fewer, better-tuned alerts catch more real incidents because teams actually pay attention.

On Fairness Incident Response: One bank discovered their loan denial model had a 9% fairness disparity (women getting denied more than men) during a quarterly audit—but their monitoring system had been running for 6 months without flagging it. Root cause: They measured "monitoring dashboard accuracy" (is the dashboard showing correct numbers?), not "model fairness" (is the model making fair decisions?). They added direct model behavior checks after that. Lesson: Monitor the monitor. Your monitoring system can have bugs too.

For Further Reading

NIST Incident Response Framework (NIST, 2024) | https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-61r2.pdf | Government playbook for incident response. Mostly IT-focused, but escalation matrix approach applies to AI incidents.

Fed Guidance on Model Risk Management (Federal Reserve, 2025) | https://www.federalreserve.gov/publications/files/bcreg20250124a.pdf | Specific requirements for model monitoring, documentation, and regulatory notification. Core compliance reading.

EBA Guidelines on AI Governance (European Banking Authority, 2026) | https://www.eba.europa.eu/regulation-and-policy/artificial-intelligence/guidelines-artificial-intelligence-governance | European expectations for AI incident handling and monitoring requirements.

Building Observable ML Systems (Google Research + Netflix, 2025) | https://research.google/blog/detecting-drift-in-ml-systems/ | Technical deep-dive on drift detection, monitoring architecture, and production patterns at scale.

Post-Mortem Culture and Blameless Incident Analysis (Google SRE Book, adapted for ML 2024) | https://sre.google/books/ | How to run effective post-mortems that drive improvement, not blame. Critical for building psychological safety in incident response.

Next up: Week 17 Sunday dives into "How Risk Committees Interpret AI Outputs"—because your beautiful incident response matrix means nothing if the risk committee doesn't trust it or understand what they're looking at.

This is part of our ongoing work understanding AI deployment in financial systems. If you're building runbooks or rebuilding incident response for AI models, share your experience—what worked, what didn't, what regulatory feedback you got?