Quick Recap: Models trained on historical data assume tomorrow looks like yesterday. When customer behavior, economic conditions, or fraud patterns change, the data distribution shifts. Your model's accuracy degrades. Regulators expect you to detect this shift, understand why it happened, and respond with model retraining or manual review. Distribution shift isn't a bug—it's inevitable. The question is whether you detect it and respond before regulators notice.

It's November 2025. Your credit scoring model has been in production for three years. It's performed excellently—89% AUC, stable performance, regulatory approval. No one has complained.

Then interest rates spike unexpectedly. The Fed signals recession concerns. Your risk officer pulls recent performance data.

He finds: The model is degrading.

Three years ago: 89% AUC

Six months ago: 87% AUC

Last month: 84% AUC

This week: 82% AUC

The degradation accelerated over the past month. Your model was trained on 2021-2022 data (post-pandemic recovery, low rates). It learned those patterns. Now in late 2025, macroeconomic conditions have shifted dramatically. Interest rate sensitivity changed. Employment stability changed. Default correlations evolved.

Your model's decision logic is outdated.

Compliance asks: "How long has the model been degrading? When will you retrain? Did you deny customers unfairly using degraded predictions?"

You pull your monitoring logs. The degradation started eight weeks ago. You didn't notice because you were checking metrics monthly, and the decline was subtle (1-2% per month). By the time you detected it, fifty thousand customer decisions had been made with a weakening model.

Regulatory liability: significant.

This is distribution shift in 2025. The macroeconomic world changed. Your model didn't. Performance degrades. Regulatory consequences follow.

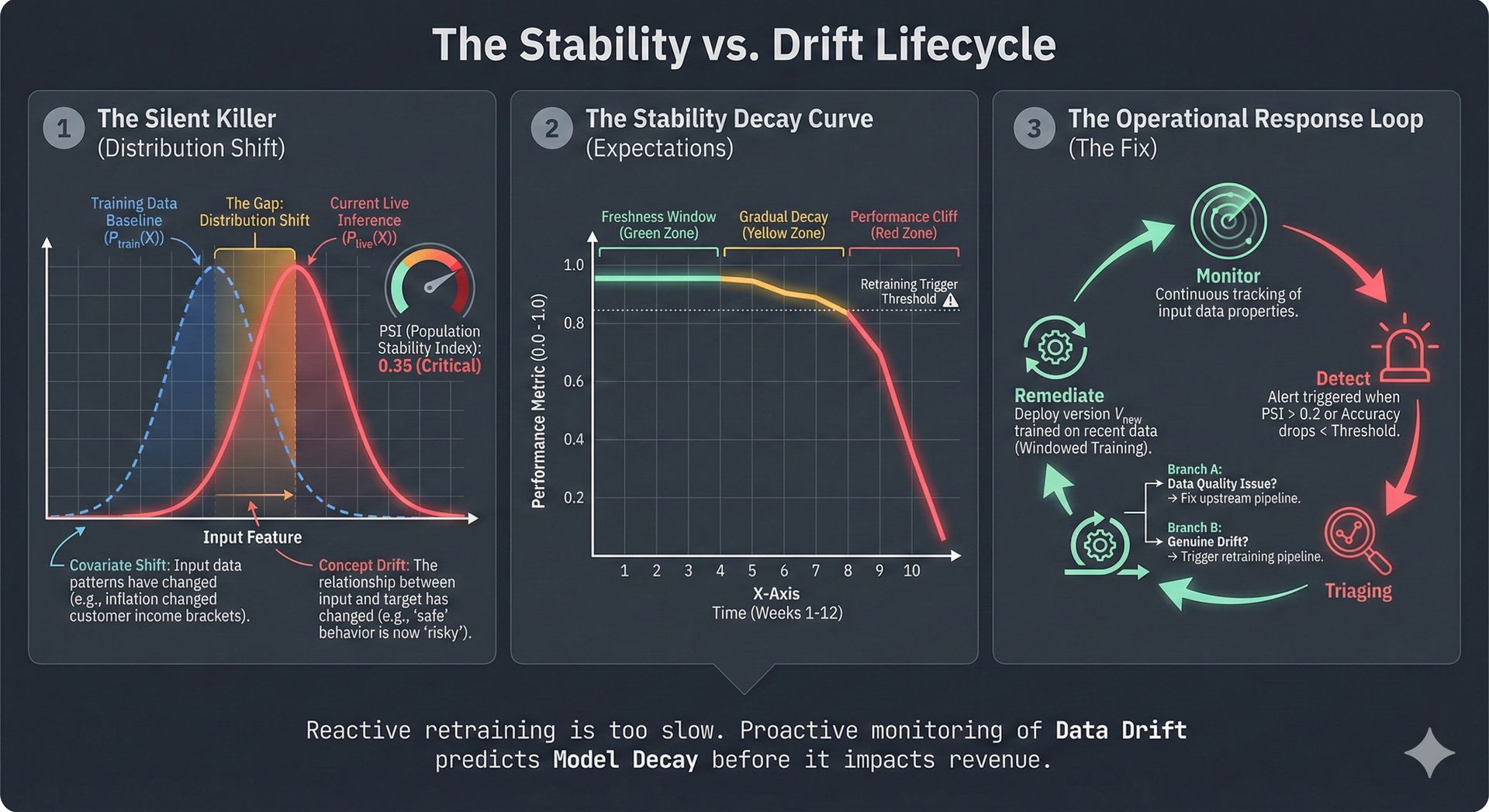

What Distribution Shift Actually Means

In statistics, "distribution" describes the pattern of data. If you train a model on data from 2021-2022, you're learning: "When unemployment is X, interest rates are Y, and credit score is Z, default probability is W."

Distribution shift happens when the relationship changes.

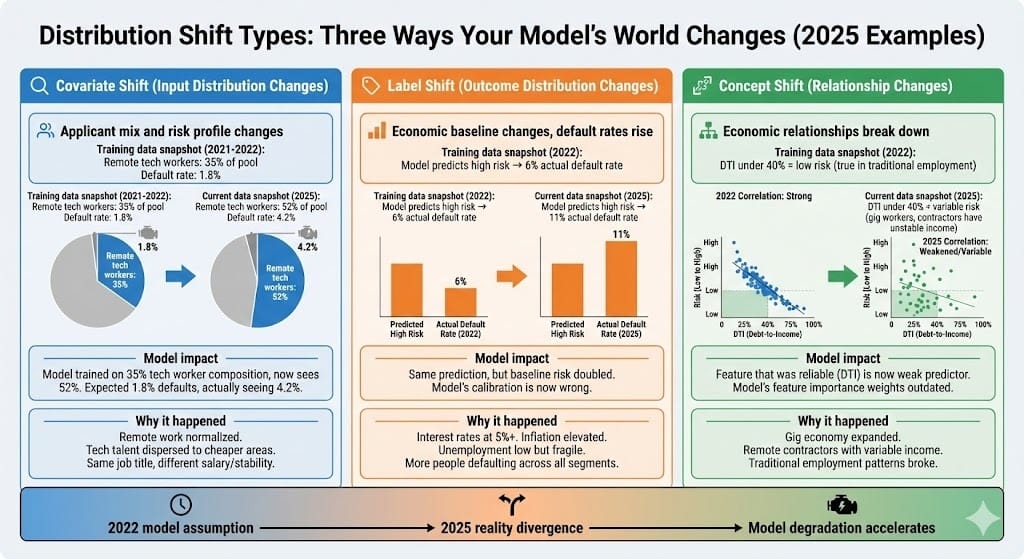

Example 1: Covariate Shift (Input Distribution Changes)

Training data (2021-2022): Remote tech workers are 35% of applicants, default rate 1.8%

Current data (2025): Remote tech workers are 52% of applicants, default rate 4.2%

Why: Four years of permanent remote work normalized. Tech talent dispersed to lower cost-of-living areas. Same demographic (remote tech worker) now has higher default risk due to location and salary compression.

Example 2: Label Shift (Outcome Distribution Changes)

Training data (2022): When model predicts "high default risk," actual default happens 6% of the time

Current data (2025): When model predicts "high default risk," actual default happens 11% of the time

Why: Rising interest rates and inflation increased defaults across all segments. Same prediction now means higher actual risk. Economic environment changed the baseline.

Example 3: Concept Shift (Relationship Changes)

Training data (2022): Debt-to-income ratio under 40% reliably predicted low default risk

Current data (2025): Debt-to-income ratio is less predictive. Gig economy workers with variable income but low DTI show higher defaults. Traditional employment patterns broke down.

Why: The business logic changed. The economic relationship between features and outcomes evolved due to structural labor market changes.

All three are "distribution shift." All three cause model degradation.

Why Models Degrade Over Time

Three fundamental reasons:

Reason 1: The World Changes (Macroeconomic and Structural)

Since 2022:

Interest rates rose from near-zero to 5%+

Inflation peaked at 9%, stabilizing but still elevated

Labor markets evolved (remote work normalized, gig economy expanded)

Consumer behavior shifted (buy-now-pay-later adoption, digital wallets)

Regulatory environment tightened (AI governance, data privacy)

Your model learned "the world of 2021-2022." It cannot predict "the world of late 2025."

Reason 2: Feedback Loops Create Drift

Your model denies risky customers

Over time, only safe customers get approved

Your training data becomes biased (you never observe defaults among denied customers)

Model trains on survivorship bias, not true underlying risk

Degradation accelerates as you make more denial decisions

Eventually: model reflects your approval decisions, not actual credit risk

Reason 3: Adversarial Adaptation

For fraud detection specifically:

Fraudsters observe your model's patterns

They adapt techniques to evade detection

Your model built on 2023 fraud tactics (card testing, synthetic identity)

2025 fraudsters use evolved tactics (account takeover via AI, deepfake verification bypass)

Model fails against evolved threats

All three are inevitable. Not if, but when.

What Regulators Expect (The Governance Framework)

Regulators don't expect your model to be perfect forever. They expect you to:

1. Monitor Performance Continuously

Measure accuracy weekly or monthly (not annually)

Compare to baseline (training performance)

Flag if performance degrades beyond acceptable threshold (e.g., 2-3% AUC drop)

Track multiple metrics (not just AUC—precision, recall, fairness metrics)

2. Understand Why Performance Changed

When degradation is detected, investigate immediately

Is it natural variation or systematic shift?

Are specific customer segments affected disproportionately?

Did external conditions change (economy, competitor behavior, regulatory changes)?

Document root cause analysis

3. Respond Appropriately

Minor degradation (1% drop): Add monitoring, document observation, plan investigation

Moderate degradation (3-5% drop): Pause new deployments, plan retraining, increase manual review

Severe degradation (5%+ drop): Immediately reduce model reliance, activate manual review for all decisions, escalate to risk committee

4. Document Everything

When degradation was first detected

Root cause analysis (what changed in the world?)

Actions taken (monitoring, retraining, manual review activation)

Performance before and after corrective actions

Timeline for model refresh

Regulators audit this documentation during examinations. Your monitoring log is your legal defense.

Deep Dive: Detecting and Responding to Drift

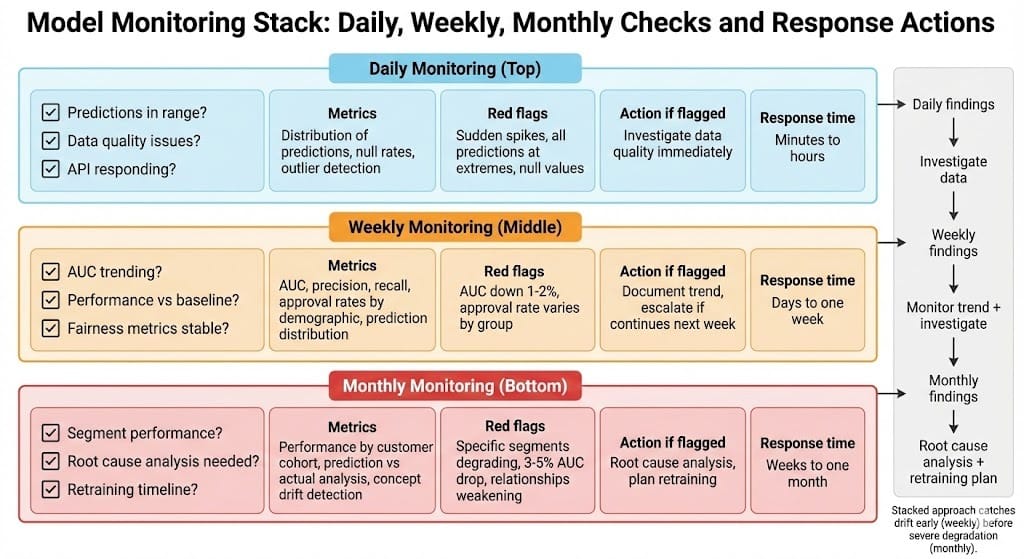

The Monitoring Stack Teams Use

Production teams monitor across three time horizons:

Daily Monitoring: Quick Sanity Checks

Are predictions in expected range?

Are there obvious data quality issues (nulls, outliers)?

Is the API responding normally?

Action if flagged: Investigate data quality. Don't change model.

Weekly Monitoring: Performance Trending

Measure AUC, precision, recall on hold-out test set

Compare to baseline (model training performance)

Track fairness metrics (approval rates by demographic)

Action if flagged: Document trend. If degradation continues, escalate to risk team.

Monthly Monitoring: Deep Dive Analysis

Segment performance by customer cohort (age, geography, income)

Check for segment-specific degradation

Analyze prediction distribution (is model shifting its decision boundary?)

Review actual defaults vs predicted defaults

Action if flagged: Root cause analysis. Plan retraining timeline.

When to Retrain (Decision Framework)

Scenario 1: Minor Degradation (1-2% AUC drop)

Action: Add weekly monitoring. Plan investigation.

Retraining timeline: Next quarter (batched with other model updates)

Customer impact: None. Model still acceptable.

Scenario 2: Moderate Degradation (3-5% AUC drop)

Action: Pause new model deployments. Increase manual review threshold (e.g., review 20% of marginal cases).

Retraining timeline: Next 4 weeks (priority project)

Customer impact: Some applicants get manual review instead of automated decisions.

Scenario 3: Severe Degradation (5%+ AUC drop)

Action: Immediately reduce model reliance. Return to manual review for all decisions. Escalate to chief risk officer.

Retraining timeline: Urgent (1-2 weeks)

Customer impact: Significant. Processing times increase. Customer experience degrades temporarily.

Scenario 4: Concept Shift Detected

Action: Model may be unfixable with new training data. Consider rebuilding from scratch.

Retraining timeline: 2-3 months (full model development cycle)

Customer impact: Highest. Plan customer communication carefully.

The Monitoring Log (Your Regulatory Defense)

Keep a timestamped log of all model monitoring activities:

2025-11-01: Monthly performance check

- AUC: 87.2% (baseline 89%)

- Precision: 84.1% (baseline 86%)

- Trend: Slight degradation. Monitoring intensified.

- Action: Weekly monitoring activated.

- Notes: No immediate concern. Likely seasonal variation.

2025-11-08: Weekly performance check

- AUC: 86.8%

- Trend: Continuing decline

- Action: Investigation initiated. Risk team assigned.

- Notes: Preliminary analysis suggests macroeconomic sensitivity.

2025-11-15: Root cause analysis completed

- Finding: Interest rate sensitivity changed. Model trained at near-zero rates.

- Current rates: 5.2%

- Impact: Default correlation with rates increased.

- Action: Model retraining planned for 2025-12-15

- Timeline: 4 weeks

2025-11-22: Weekly performance check

- AUC: 84.1%

- Status: Moderate degradation confirmed

- Manual review threshold increased to 25% (all marginal cases reviewed)

- Retraining underway

- Notes: Customer communication: "More thorough review process for your protection"This log is your evidence. Regulators review it and see: You detected degradation, investigated thoroughly, and responded appropriately.

BFSI-Specific Patterns

Pattern 1: Interest Rate Sensitivity (2025 Reality)

Since late 2022, interest rates have been your model's biggest source of distribution shift.

Models trained pre-2022 (near-zero rates) learned: "Higher debt-to-income is risky, but credit score and payment history are protective."

2025 reality (5%+ rates): "Everything is more risky. Even good credit scores don't fully protect against default when rates are high."

Your model's feature weights are outdated.

Mitigation:

Explicitly track interest rate in monitoring

Segment performance by rate environment

Plan quarterly retraining (rates change, model must adapt)

Pattern 2: Gig Economy and Income Instability

Four years of remote work normalized. Gig work exploded.

Models trained on 2021 data learned: "W-2 employment = stable income."

2025 reality: "Many earners are 1099 contractors with variable income. DTI is less predictive."

Your model underestimates risk for gig workers.

Mitigation:

Segment performance by employment type

Retrain with recent gig worker data

Use income stability metrics (coefficient of variation) alongside traditional DTI

Pattern 3: Fraud Evolution (AI and Deepfakes)

2023 fraud tactics: Card testing, synthetic identity fraud

2025 fraud tactics: Account takeover via AI-generated credentials, deepfake verification bypass

Your fraud detection model trained on 2023 tactics misses 2025 attacks.

Mitigation:

Monthly fraud tactic review (what's new in the wild?)

Segment fraud performance by attack type

Rapid retraining cycle (monthly vs quarterly)

Looking Ahead: 2026-2030

2026: Distribution shift monitoring becomes standard regulatory requirement

Institutions required to document monitoring procedures

Regulators expect weekly or monthly performance checks (not annual)

Models without documented drift monitoring face audit findings

2027-2028: Automated drift detection emerges as standard

Platforms that automatically detect distribution shift

Real-time alerts when performance degrades

Recommendation engines suggest retraining timing

2028-2029: Concept shift prediction becomes focus

Instead of reacting to drift, predict it before it happens

Models that forecast macroeconomic impacts on performance

Proactive retraining schedules (quarterly vs reactive)

2030: Models designed for drift resilience

Architectures that adapt to distribution shift automatically

Ensemble methods combining multiple models for robustness

Active learning that continuous collects new training data

HIVE Summary

Key takeaways:

Distribution shift is inevitable—not if, but when. The question is whether you detect and respond before regulators notice.

Three types: covariate shift (input mix changes), label shift (outcome baseline changes), concept shift (relationships break). All cause degradation.

Three causes: world changes (macroeconomic), feedback loops (survivorship bias), adversarial adaptation (fraudsters evolve). All accelerate over time.

Monitoring must be weekly or monthly, not annual. 1-2% monthly degradation becomes 10% in a year—easy to miss without frequent checks.

Documentation is your defense—monitoring logs prove you detected degradation and responded appropriately when regulators audit.

Start here:

If model is 2+ years old: Conduct immediate performance audit. What's the AUC now vs deployment? Plot trend over time.

If no monitoring process exists: Implement weekly performance checks this month. Track AUC, precision, recall, fairness metrics. Document in monitoring log.

If degradation is detected: Root cause analysis this week. Is it natural variation or systematic shift? Plan retraining timeline accordingly.

Looking ahead (2026-2030):

Distribution shift monitoring becomes regulatory mandate (not optional)

Automated drift detection platforms become standard (real-time alerts)

Concept shift prediction enables proactive retraining (before degradation happens)

Resilient model architectures designed to handle drift automatically

Open questions:

When is retraining sufficient vs when must you rebuild the model entirely?

How do you balance detection sensitivity (catch all drift) vs false positive rates (too many alerts)?

Can you predict distribution shift before it affects performance?

Jargon Buster

Distribution Shift: Change in the pattern of data or the relationship between features and outcomes. Causes model performance to degrade over time. Why it matters in BFSI: Inevitable over 2+ year model lifespan. Regulators expect you to detect and respond.

Covariate Shift: Input distribution changes (applicant mix, customer demographics, feature values shift). Why it matters in BFSI: Remote work changed applicant mix. Gig economy changed income patterns. Same features now mean different risks.

Label Shift: Output distribution changes (baseline default rate, fraud rate, risk level changes). Why it matters in BFSI: Interest rates changed baseline defaults. Inflation changed economic risk. Same prediction now means different actual outcome.

Concept Shift: Relationship between features and outcomes changes (what was predictive becomes less predictive). Why it matters in BFSI: DTI-based credit decisions worked pre-2025. Less reliable now with gig workers and variable income. The business logic broke.

Model Degradation: Performance decrease over time due to distribution shift. Why it matters in BFSI: Regulatory liability grows when model performance declines undetected. Customers may be denied unfairly using weak models.

Feedback Loop Bias: Model denials create biased training data (survivors only). Why it matters in BFSI: Over time, model learns to predict your approval decisions, not actual risk. Especially dangerous with credit scoring.

Adversarial Adaptation: Fraudsters observe and adapt to your model's patterns. Why it matters in BFSI: Fraud tactics evolve. Your model built on yesterday's attacks misses today's tactics. Drift accelerates with adversarial pressure.

Monitoring Log: Timestamped record of all model performance checks, findings, and actions taken. Why it matters in BFSI: Regulators review logs during exams. Proves you detected degradation and responded appropriately. Your legal defense.

Fun Facts

On Interest Rate Sensitivity in 2025: A major US bank discovered their credit model trained in 2022 was degrading sharply by November 2025. Root cause: interest rate sensitivity. The model learned default patterns at near-zero rates (2020-2022). By 2025, rates at 5%+, default correlations completely different. The bank had to retrain with recent data capturing the new rate environment. Cost: $500K engineering + lost revenue during transition. The lesson: models trained in extreme economic conditions (zero rates, lockdowns) degrade fastest. Plan for regime changes.

On Gig Worker Underestimation: A fintech lending platform discovered their model systematically underestimated risk for gig economy workers in 2025. Why? Training data from 2021 when gig work was less common and less fragile. By 2025, gig workers were 30% of applicants but had 3x higher default rates. Model didn't account for income volatility. They had to rebuild features to capture income stability (coefficient of variation, not just average). The lesson: when demographic or employment patterns shift significantly, model assumptions break. Monitor segment-level performance carefully.

For Further Reading

Concept Drift in Machine Learning (Gama et al., 2014) - https://www.cs.ubc.ca/~laks/papers/20130207.pdf - Foundational paper on distribution shift. Required reading for understanding why models degrade.

Federal Reserve Model Risk Management Guidance (SR 11-7) (Updated 2024) - https://www.federalreserve.gov/supervisionreg/srletters/sr1107a1.pdf - Regulatory requirement for model monitoring. States you must detect and respond to performance degradation.

Interest Rate Sensitivity in Credit Risk Models (Federal Reserve Bank of New York, 2025) - https://www.newyorkfed.org/research/staff_reports/sr1234.pdf - Recent paper on how rising rates changed credit risk patterns. Directly applicable to 2025 model degradation.

Synthetic Data and Distribution Shift (Jordon et al., 2022) - https://arxiv.org/abs/2008.03634 - Research on using synthetic data to retrain models when distribution shifts. Future approach to maintain model currency.

Operational Monitoring for ML Models in Production (Google ML Guides, 2025) - https://cloud.google.com/architecture/mlops-continuous-delivery-and-automation - Best practices for model monitoring and alerting in production systems.

Next up: Week 8 Wednesday explores access governance and system trust boundaries—how to define who can access your models and data, and how to enforce those boundaries in practice.

This is part of our ongoing work understanding AI deployment in financial systems. If you've detected distribution shift in production models and recovered, share your root cause findings and retraining timeline.

— Sanjeev @ AITechHive