Quick Recap: Evidently AI automatically monitors your production models, detecting when prediction patterns or data distributions shift. Instead of manual weekly checks of AUC, Evidently runs continuous monitoring, comparing current predictions to baseline, flagging when drift exceeds thresholds. It answers the critical question: "Is my model's behavior changing?" Setup takes hours. Monitoring runs 24/7. When drift is detected, automated alerts trigger review processes before performance degrades into regulatory findings.

It's Tuesday morning, December 2025. Your fraud detection model has been running for eighteen months. It's solid—94% precision, low false positive rate, customers trust it.

Your team isn't monitoring performance actively. The model works. You check metrics quarterly at best.

Then Evidently AI (which someone integrated as a side project months ago) sends an alert: "Prediction distribution shift detected. 35 percent more fraud flags in the past 7 days compared to 30-day baseline."

Your initial reaction: "The model is working. False alert."

You investigate anyway. Pull the last week of fraud cases. You see it immediately: the model is flagging patterns it never flagged before. Not because it's smarter. Because the fraud tactics changed.

Fraudsters discovered your model approves certain transaction types easily. They're exploiting that gap. Your model isn't detecting the new attack pattern.

Without Evidently's alert, you would have discovered this during next quarter's review—three months from now. By then, fraud loss would be significant. Customers would have experienced fraud. Regulators would ask why monitoring didn't catch the shift.

With the alert, you catch it in week one. You investigate, identify the new fraud tactic, and implement mitigations before damage scales.

This is Evidently AI in production: continuous monitoring detecting drift before it becomes a problem.

Why This Tool Pattern

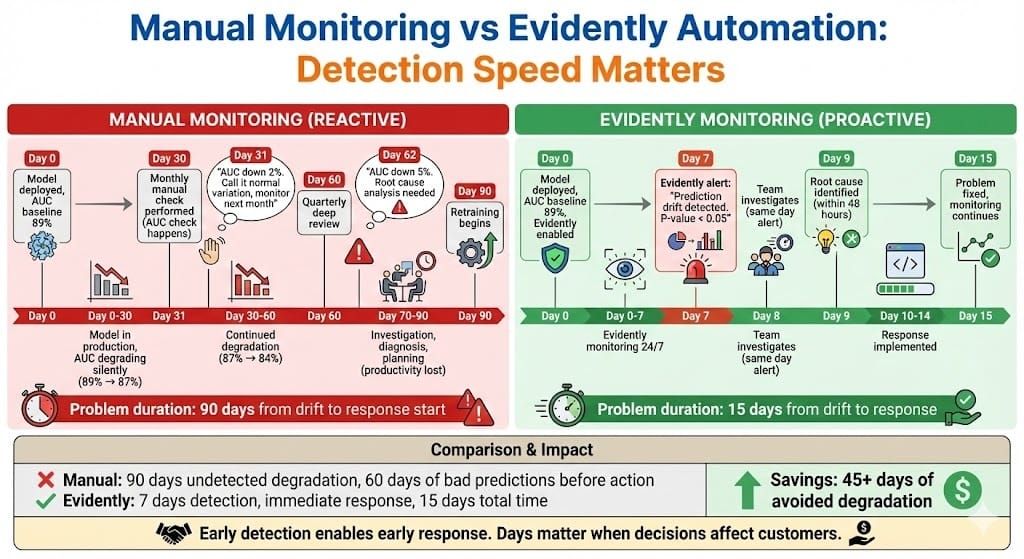

Production models don't fail all at once. They degrade gradually. Your job is detecting the degradation before it matters.

Why this matters in BFSI: Regulators expect continuous monitoring, not quarterly reviews. A drift that happens over eight weeks is invisible in a three-month cycle. Evidently catches it in week two. Early detection means faster response, smaller impact.

The gap most teams face: Manual monitoring is expensive. Checking AUC, precision, recall, fairness metrics weekly takes hours. Most teams check quarterly or annually. By then, degradation is significant.

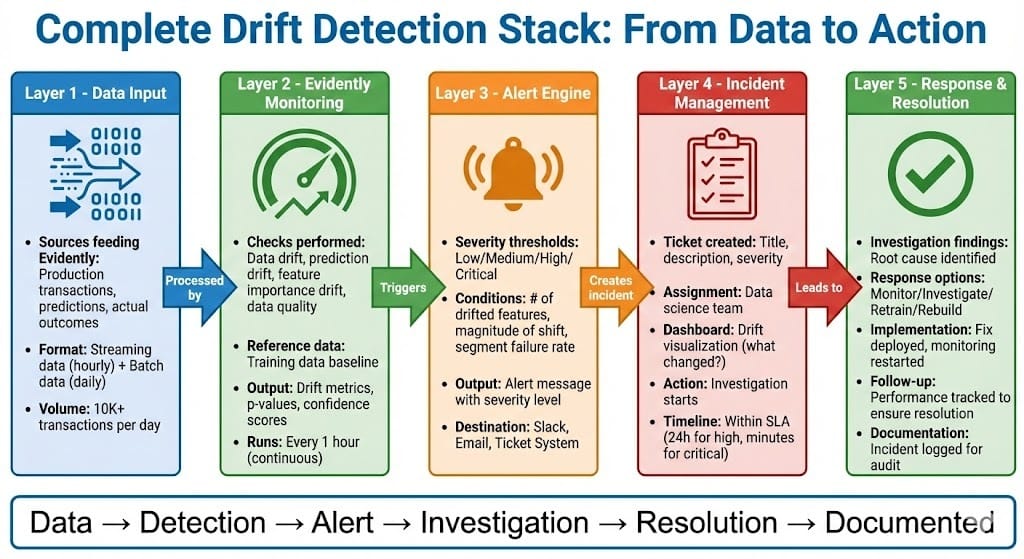

Production teams need:

Automated monitoring that runs 24/7

Alerts when drift is detected (not weekly reviews)

Visual dashboards showing what changed

Root cause hints (which features drifted, which segments)

Integration with incident response (alert → ticket → team review)

Evidently AI provides all of this. It's specialized for production model monitoring in a way generic dashboards aren't.

The trade-off: Evidently requires setup time (2-4 hours initially). False positives possible (sometimes drift is natural variation). But the benefit—early detection before problems scale—far outweighs the cost.

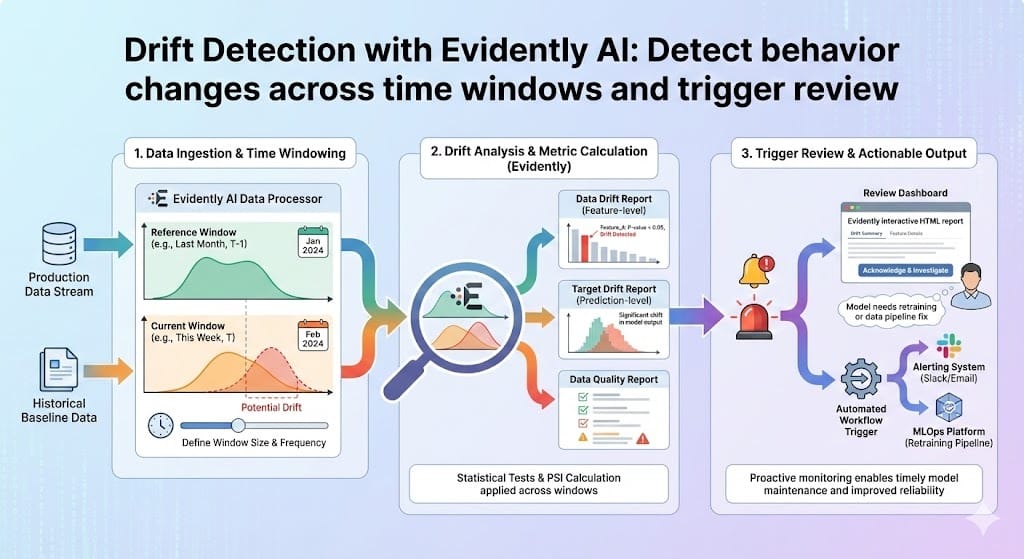

How This Works: The Monitoring Flow

Production teams use Evidently as their early warning system:

Traditional Monitoring (Reactive):

Model in production

↓

[Quarterly manual check]

↓

"AUC dropped 5%"

↓

Investigation (two weeks later)

↓

Root cause identified

↓

Retraining planned

↓

Problem already scaledEvidently Monitoring (Proactive):

Model in production

↓

Evidently runs continuous checks (hourly/daily)

↓

[Drift detected: threshold exceeded]

↓

Alert sent immediately

↓

Team investigates within hours

↓

Root cause identified quickly

↓

Response planned before problem scalesThe difference: weeks of undetected degradation vs hours.

Building Drift Detection with Evidently AI

Step 1: Install Evidently and Define Baseline

Evidently tracks your model's behavior against a baseline (historical performance).

python

from evidently.report import Report

from evidently.metric_preset import DataDriftPreset, RegressionPreset

import pandas as pd

# Load your model and historical data

model = load_model('credit_model_v2.3.pkl')

historical_data = pd.read_csv('training_data.csv')

# Evidently learns baseline from historical data

baseline_features = historical_data[['age', 'income', 'dti', 'credit_score']]

baseline_target = historical_data['default']

print("Baseline established from historical data")Why this matters in BFSI: Baseline represents "normal model behavior." When current behavior deviates, Evidently flags it. Baseline is your reference point.

Step 2: Monitor Data Drift

Data drift detects when input features change distribution.

from evidently.report import Report

from evidently.metric_preset import DataDriftPreset

def monitor_data_drift(current_data, baseline_data):

"""

Compare current data to baseline.

Detect if feature distributions shifted.

"""

# Create drift report

drift_report = Report(metrics=[DataDriftPreset()])

# Run analysis

drift_report.run(

reference_data=baseline_data,

current_data=current_data

)

# Extract results

drift_results = drift_report.as_dict()

# Check for significant drifts

detected_drifts = []

for feature, metrics in drift_results['metrics'].items():

if metrics['drift_detected']:

detected_drifts.append({

'feature': feature,

'drift_method': metrics['method'], # Kolmogorov-Smirnov, Chi-square, etc

'p_value': metrics['p_value'],

'drift_score': metrics['drift_score']

})

return detected_drifts

# Monitor current week vs baseline

current_week = load_recent_transactions(days=7)

drifts = monitor_data_drift(current_week, historical_data)

if drifts:

for drift in drifts:

print(f"Drift detected in {drift['feature']}: {drift['drift_score']:.2%}")What it detects:

Age distribution shifted (customers getting younger or older)

Income distribution shifted (applicants earning more/less)

Debt-to-income shifted (loan patterns changing)

Credit score shifted (new segment of applicants)

This catches covariate shift (input distribution changing).

Step 3: Monitor Prediction Drift

Prediction drift detects when your model's outputs change.

from evidently.metric_preset import RegressionPreset

def monitor_prediction_drift(model, current_data, baseline_data):

"""

Compare model predictions on current data vs baseline.

Detect if prediction distribution shifted.

"""

# Generate predictions

baseline_predictions = model.predict(baseline_data[features])

current_predictions = model.predict(current_data[features])

# Create prediction report

prediction_report = Report(metrics=[RegressionPreset()])

# Prepare data with predictions

baseline_with_pred = baseline_data.copy()

baseline_with_pred['prediction'] = baseline_predictions

current_with_pred = current_data.copy()

current_with_pred['prediction'] = current_predictions

# Run analysis

prediction_report.run(

reference_data=baseline_with_pred,

current_data=current_with_pred

)

# Extract metrics

results = prediction_report.as_dict()

return {

'baseline_mean_prediction': results['metrics']['prediction']['mean'],

'current_mean_prediction': results['metrics']['prediction']['mean_current'],

'prediction_drift_detected': results['metrics']['prediction']['drift_detected'],

'mean_absolute_error': results['metrics']['mae'],

'prediction_distribution_shift': results['metrics']['prediction']['distribution_shift']

}

# Monitor predictions

pred_drift = monitor_prediction_drift(model, current_week, historical_data)

if pred_drift['prediction_drift_detected']:

print(f"Prediction drift: mean shifted from {pred_drift['baseline_mean_prediction']:.3f} "

f"to {pred_drift['current_mean_prediction']:.3f}")What it detects:

Model predicting higher fraud rates (or lower)

Model predicting higher default risk (or lower)

Prediction distribution fundamentally changed

Mean prediction shifted significantly

This catches label shift and concept shift (model behavior changing).

Step 4: Automated Alerting and Integration

When drift is detected, trigger alerts and create tickets for human review.

import logging

from slack_sdk import WebClient

import json

def drift_detection_with_alerts(model, current_data, baseline_data,

slack_webhook=None, ticket_system=None):

"""

Monitor drift. If detected, alert team.

"""

# Monitor both data and prediction drift

data_drifts = monitor_data_drift(current_data, baseline_data)

pred_drift = monitor_prediction_drift(model, current_data, baseline_data)

# Determine severity

severity = 'low'

if len(data_drifts) >= 3:

severity = 'medium'

if pred_drift['prediction_drift_detected']:

severity = 'high'

if len(data_drifts) >= 5 and pred_drift['prediction_drift_detected']:

severity = 'critical'

# If drift detected, alert team

if data_drifts or pred_drift['prediction_drift_detected']:

alert_message = {

'timestamp': datetime.now().isoformat(),

'severity': severity,

'data_drifts_detected': len(data_drifts),

'drifted_features': [d['feature'] for d in data_drifts],

'prediction_drift': pred_drift['prediction_drift_detected'],

'investigation_needed': True

}

# Send Slack alert

if slack_webhook:

slack = WebClient(token=slack_webhook)

slack.chat_postMessage(

channel='#ml-monitoring',

text=f"🚨 {severity.upper()} Drift Alert",

blocks=[

{

"type": "section",

"text": {

"type": "mrkdwn",

"text": f"*Drift Detected ({severity})*\n"

f"Data drifts: {len(data_drifts)}\n"

f"Prediction drift: {pred_drift['prediction_drift_detected']}\n"

f"Features: {', '.join([d['feature'] for d in data_drifts])}"

}

}

]

)

# Create ticket in incident system

if ticket_system:

ticket = ticket_system.create_incident(

title=f"{severity.upper()} Model Drift Detected",

description=json.dumps(alert_message, indent=2),

severity_level=severity

)

logging.info(f"Ticket created: {ticket['id']}")

return alert_message

return None

# Run continuous monitoring

schedule.every(1).hours.do(

drift_detection_with_alerts,

model=model,

current_data=load_recent_data(hours=1),

baseline_data=historical_data,

slack_webhook=os.getenv('SLACK_WEBHOOK'),

ticket_system=incident_manager

)This is your 24/7 monitoring loop. When drift is detected, your team is notified within minutes.

BFSI-Specific Patterns

Pattern 1: Segment-Level Monitoring

Overall metrics might hide problems in specific segments.

def monitor_drift_by_segment(model, current_data, baseline_data,

segment_column='customer_segment'):

"""

Monitor drift separately for each customer segment.

Catch segment-specific problems hidden in aggregate metrics.

"""

segment_results = {}

for segment in current_data[segment_column].unique():

current_segment = current_data[current_data[segment_column] == segment]

baseline_segment = baseline_data[baseline_data[segment_column] == segment]

# Monitor this segment

data_drift = monitor_data_drift(current_segment, baseline_segment)

pred_drift = monitor_prediction_drift(model, current_segment, baseline_segment)

segment_results[segment] = {

'data_drifts': len(data_drift),

'prediction_drift': pred_drift['prediction_drift_detected'],

'needs_review': len(data_drift) > 0 or pred_drift['prediction_drift_detected']

}

return segment_results

# Monitor each customer segment separately

segment_drifts = monitor_drift_by_segment(model, current_week, historical_data)

# Check which segments have problems

for segment, results in segment_drifts.items():

if results['needs_review']:

print(f"Segment {segment}: Drift detected, needs investigation")Why this matters in BFSI: Aggregate metrics hide problems. Your fraud detection might perform fine overall but fail for a specific customer type. Segment monitoring catches this.

Pattern 2: Feature Importance Drift

Monitor whether your model's feature importance changed. This signals concept shift.

def monitor_feature_importance_drift(model, current_data, baseline_data):

"""

Compare feature importance: baseline vs current.

If top features changed, concept shift happened.

"""

# Get baseline feature importance (from model training)

baseline_importance = model.feature_importances_ # For tree models

baseline_ranking = sorted(

enumerate(baseline_importance),

key=lambda x: x[1],

reverse=True

)

# Calculate importance on current data

current_importance = calculate_shap_importance(model, current_data)

current_ranking = sorted(

enumerate(current_importance),

key=lambda x: x[1],

reverse=True

)

# Compare top 5 features

baseline_top5 = [idx for idx, _ in baseline_ranking[:5]]

current_top5 = [idx for idx, _ in current_ranking[:5]]

overlap = len(set(baseline_top5) & set(current_top5))

if overlap < 4: # Less than 4 of top 5 features match

return {

'concept_shift_detected': True,

'baseline_top5': baseline_top5,

'current_top5': current_top5,

'overlap': overlap

}

return {'concept_shift_detected': False}

# Monitor concept shift

concept_shift = monitor_feature_importance_drift(model, current_week, historical_data)

if concept_shift['concept_shift_detected']:

print("WARNING: Feature importance changed. Concept shift likely.")Why this matters in BFSI: When top features change, your model's logic changed. This is concept shift—often signals model needs rebuilding, not retraining.

Pattern 3: Threshold-Based Alerting with Severity Levels

Different drift levels require different responses.

DRIFT_THRESHOLDS = {

'data_drifts_count': 3, # Alert if 3+ features drift

'prediction_drift_magnitude': 0.05, # Alert if mean prediction shifts 5%+

'segment_failure_rate': 0.2 # Alert if 20%+ of segments have drift

}

def calculate_alert_severity(data_drifts, pred_drift, segment_results):

"""

Determine alert severity based on drift magnitude and scope.

"""

severity = 'none'

# Level 1: Low severity

if len(data_drifts) >= 1 and len(data_drifts) < 3:

severity = 'low'

# Level 2: Medium severity

if len(data_drifts) >= 3 or \

(pred_drift['prediction_drift_detected'] and

abs(pred_drift['current_mean_prediction'] - pred_drift['baseline_mean_prediction']) > 0.03):

severity = 'medium'

# Level 3: High severity

segment_failure_rate = sum(1 for s in segment_results.values() if s['needs_review']) / len(segment_results)

if len(data_drifts) >= 5 or \

segment_failure_rate > 0.2 or \

(pred_drift['prediction_drift_detected'] and

abs(pred_drift['current_mean_prediction'] - pred_drift['baseline_mean_prediction']) > 0.05):

severity = 'high'

# Level 4: Critical severity

if segment_failure_rate > 0.4 or \

(len(data_drifts) >= 5 and pred_drift['prediction_drift_detected']):

severity = 'critical'

return severity

# Map severity to response

RESPONSE_BY_SEVERITY = {

'none': 'Continue monitoring',

'low': 'Add to weekly review, monitor next 7 days',

'medium': 'Create ticket, investigate within 24 hours, consider manual review increase',

'high': 'Escalate to risk officer, increase manual review threshold, plan retraining',

'critical': 'Immediately reduce model reliance, activate emergency manual review, halt new deployments'

}

Common Mistakes

Mistake 1: Alert Fatigue from Too Many Thresholds

❌ Alerting on every minor variation (causes team to ignore alerts) ✅ Calibrate thresholds to real problems (alert only significant drift)

Too many false positives = alerts ignored.

Mistake 2: Not Segmenting by Customer Type

❌ Monitoring aggregate metrics only ✅ Monitor segments separately (fraud risk differs by customer type)

Segment-specific problems hide in aggregate metrics.

Mistake 3: Ignoring Feature Importance Changes

❌ Assuming model logic stays the same ✅ Monitor feature importance drift separately

When top features change, concept shift happened. Model may need rebuilding.

Mistake 4: Alerting Without Dashboards

❌ Getting drift alerts but no visual way to investigate ✅ Evidently generates dashboards showing what drifted, where

Team needs to understand the drift, not just be alerted to it.

Mistake 5: No Response Playbook

❌ Detecting drift but no clear next steps ✅ Document severity levels and corresponding actions

Alert without response is useless. Define actions upfront.

Looking Ahead: 2026-2030

2026: Drift detection becomes standard infrastructure

Most institutions deploy automated monitoring (Evidently, Datadog, custom)

Regulatory exams expect continuous monitoring logs

Institutions without automation face findings

2027-2028: Predictive drift detection emerges

Models predict drift before it impacts performance

Automated retraining triggered when drift is predicted

Humans shift from reactive to proactive role

2028-2029: Integration with ML platforms

Drift detection built into training and serving platforms

Automated response (trigger retraining, adjust thresholds, alert team)

Monitoring becomes infrastructure, not tooling

2030: Drift becomes feature of model architecture

Models designed to handle drift automatically

Continuous learning from production data (carefully)

Humans review, models adapt

HIVE Summary

Key takeaways:

Evidently AI detects data drift (input distributions shift), prediction drift (model outputs change), and feature importance drift (model logic evolves)

Continuous monitoring catches degradation in days, not quarters. Early detection enables fast response before problems scale.

Segment-level monitoring reveals segment-specific problems hidden in aggregate metrics. Different customer types drift differently.

Alert severity must map to response actions. Critical alerts trigger immediate action (reduce model reliance). Low alerts trigger investigation (no immediate action).

Feature importance drift signals concept shift—sometimes model needs rebuilding, not retraining. Monitor it separately.

Start here:

If no monitoring exists: Install Evidently this week. Set up baseline from training data. Start logging drift metrics daily.

If manual monitoring exists: Automate with Evidently. Convert weekly manual checks to daily automated checks. Free up team time for investigation.

If monitoring exists but no alerts: Add alerting. Define severity levels and response actions. Integrate with ticket system so drift detection creates incidents.

Looking ahead (2026-2030):

Drift detection becomes regulatory expectation (not optional)

Predictive drift detection enables proactive response (fix before impact)

Automated retraining triggered by drift signals (less manual intervention)

Drift handling becomes part of model architecture (not bolted-on)

Open questions:

How to distinguish real drift from natural variation in small samples?

When is monitoring too frequent (generating noise) vs too infrequent (missing problems)?

Can you predict concept shift before it happens?

Jargon Buster

Data Drift: Input feature distributions change over time. Customers become younger, earn more, have different employment patterns. Why it matters in BFSI: When customer mix changes, model assumptions break. Fraud patterns shift with demographic changes.

Prediction Drift: Model output distribution changes. Model predicts higher fraud rates (or lower), defaults (or approval). Why it matters in BFSI: If model predictions drift while actual outcomes don't, calibration is broken. Model is over or under-confident.

Concept Drift: Relationship between features and outcomes changes. Income predictiveness changes with employment patterns. DTI means different things for gig workers vs W-2 employees. Why it matters in BFSI: Model may need rebuilding, not retraining. Logic broke.

Evidently AI: Open-source ML monitoring platform detecting data drift, prediction drift, and data quality issues in production. Why it matters in BFSI: Automates monitoring tasks, runs 24/7, generates alerts and dashboards.

Baseline: Historical reference data used to detect drift. Represents "normal" model behavior. Why it matters in BFSI: Without baseline, you can't know if current behavior is different.

Segment Monitoring: Monitoring model performance separately for each customer segment (by age, geography, income). Why it matters in BFSI: Aggregate metrics hide segment-specific problems. Fraud model might fail for one customer type while succeeding overall.

Feature Importance Drift: Changes in which features contribute most to predictions. Why it matters in BFSI: Signals concept shift. When top features change, model logic evolved. Sometimes retraining doesn't fix it—rebuild required.

Severity Levels: Classification of drift alerts by impact (low/medium/high/critical). Maps to response actions. Why it matters in BFSI: Not all drift requires same response. Minor drift needs monitoring. Critical drift needs immediate action.

Fun Facts

On Alert Calibration in Production: A fintech deployed Evidently and initially set thresholds too low, alerting on minor variations. They got 40-50 alerts per week. Within three weeks, team stopped reading alerts (alert fatigue). They recalibrated thresholds, reducing alerts to 2-3 significant ones per week. Same signal, better signal-to-noise ratio. Lesson: Calibrate thresholds to real problems, not statistical significance. Ignore false positives faster by threshold adjustment.

On Hidden Segment Drift: A bank's fraud model looked fine in aggregate metrics (94% precision). But when they added segment monitoring, they discovered fraud detection failed catastrophically for customers age 18-25 (60% false negative rate). The segment grew from 5% to 18% of new customers. Aggregate metrics masked segment-specific failure. They increased monitoring for younger customers and implemented separate model for that segment. Lesson: Always segment monitor. One segment's problem can hide in aggregate metrics.

For Further Reading

Evidently AI Documentation (Evidently Team, 2025) - https://docs.evidentlyai.com - Official guide for drift detection setup, monitoring workflows, and integration patterns.

Data Drift Detection in Production ML (Polyzotis et al., 2018) - https://arxiv.org/abs/1809.03383 - Academic foundation for understanding data drift and its impact on model performance.

ML Monitoring Best Practices (Huyen, 2022) - https://monitoring.mlops.community - Practical guide on production monitoring architecture, alerting strategies, and response workflows.

Feature Importance Drift and Model Degradation (Barredo Arrieta et al., 2020) - https://arxiv.org/abs/2004.03167 - Research on concept drift detection using feature importance changes.

Real-Time ML Monitoring in Financial Services (Federal Reserve, 2024) - https://www.federalreserve.gov/supervisionreg/srletters/sr2410.pdf - Regulatory expectations for continuous model monitoring in banks.

Next up: Week 8 Sunday explores access governance and system trust boundaries—defining who can access your models and data, and how to enforce those boundaries technically and operationally.

This is part of our ongoing work understanding AI deployment in financial systems. If you've deployed Evidently AI and tuned alert thresholds to avoid false positives, share your threshold settings and what real drift looks like in your domain.

— Sanjeev @ AITechHive