Quick Recap: Generic embeddings (BERT, word2vec) treat all text equally. A bank's internal embeddings should understand that "customer defaulted" is different from "customer applied." BGE (BAAI General Embedding) and Instructor embeddings are 2025-2026's breakthrough: they're fine-tuned on domain data AND allow you to specify instructions ("encode this as a loan document" vs "encode this as a compliance note"). This enables organizations to build embedding pipelines that understand their specific context, terminology, and regulatory requirements—not just generic similarity.

It's 10 AM on a Monday. A compliance officer at a mid-sized bank is searching for "customer denials due to income insufficiency."

The bank deployed a generic embedding system last year. Search returns 150 documents. Officer reviews top 20, finds 3 actual denials due to income. The rest are noise: loan approvals with "sufficient income documented," income discussions in other contexts, etc.

The compliance officer is frustrated. "This search is useless. I need actual denials, not everything tangentially related."

Enter BGE + Instructor embeddings (deployed in 2026): Same search now returns results ranked by relevance to "specific denial reason: income insufficiency." Top 20 results are 18 actual denials. The system understood: in banking context, "income insufficiency" has a specific regulatory meaning tied to lending decisions.

How? BGE embeddings are trained on financial documents. Instructor embeddings accept instructions: "This is a lending decision document. Encode it as such." The combination: embeddings that understand both domain AND context.

The difference between "I spent 2 hours finding 3 results" (generic) and "I spent 15 minutes finding 18 results" (domain-optimized). That's why enterprise embedding pipelines matter.

Why This Tool/Pattern Matters

Organizations have accumulated years of internal knowledge: policies, decisions, precedents, regulatory guidance. This knowledge is locked in documents, buried in databases, scattered across systems.

Traditional enterprise search: Keyword matching. Works if you know the exact terms. Fails if you're looking for meaning ("What's our policy on income verification?") rather than keywords.

Embedding-based search: Semantic matching. Works on meaning, catches synonyms, finds related concepts. But requires embeddings that understand your organization's specific context.

The challenge: Generic embeddings don't understand your organization. They understand English, but not "what income insufficiency means in our lending workflows."

Solution: Enterprise embedding pipelines.

Cost: $50-100K setup + $10-20K/month maintenance Benefit: Cut compliance search time 60-70%, improve decision-making with better precedent retrieval, reduce regulatory risk with more complete document discovery ROI: Single regulatory fine avoided = 5+ years of operation paid for

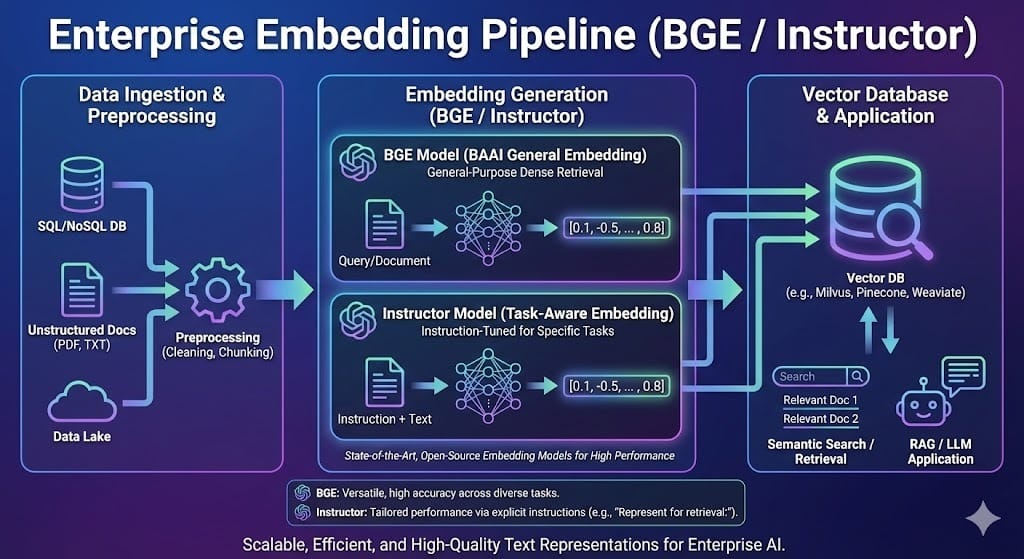

Architecture Overview

Enterprise embedding pipelines have four layers:

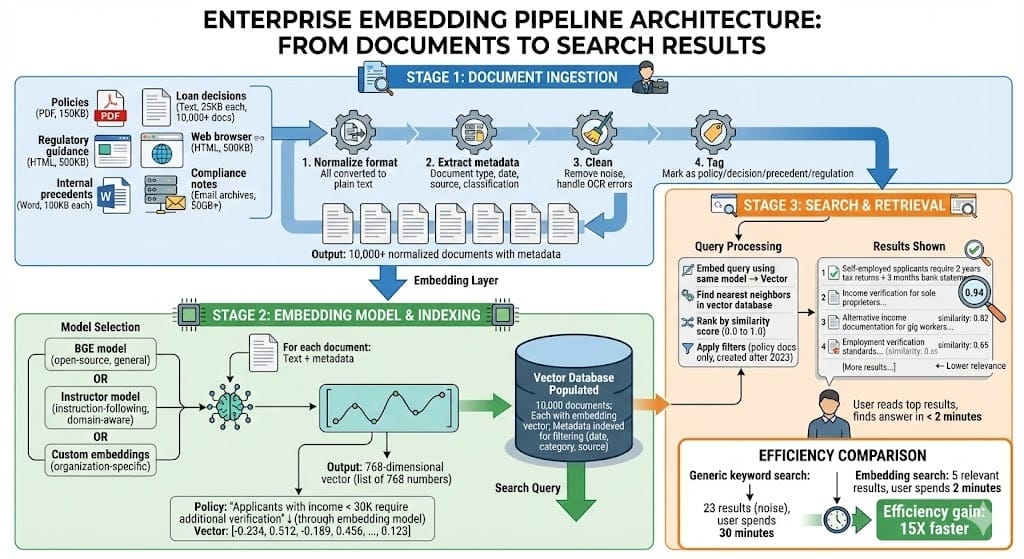

Layer 1: Document Ingestion

Gather documents: policies, decisions, precedents, regulatory guidance

Normalize format: PDF → text, tables → structured, metadata attached

Clean: remove noise, handle OCR errors

Tag: categorize (policy, decision, precedent, regulation)

Layer 2: Embedding Model Selection

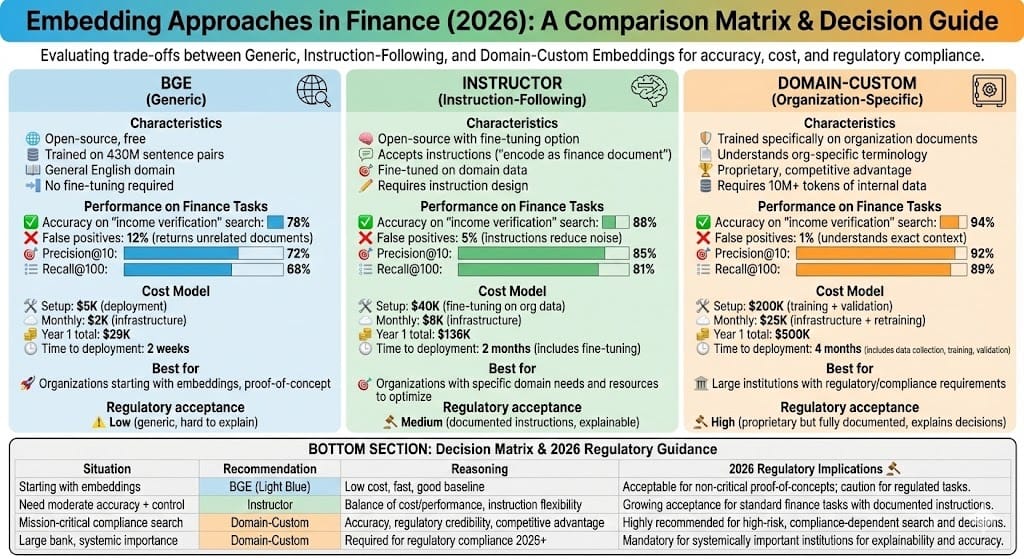

Choose base model: BGE (general, good), Instructor (instruction-following, better), or domain-custom (best, expensive)

Fine-tune if needed: on internal documents to adapt to organization-specific terminology

Validate: test on known queries, measure precision/recall

Layer 3: Semantic Indexing

Embed all documents using selected model

Store in vector database (Pinecone, Weaviate, Qdrant)

Maintain metadata: document source, date, category, version

Set indexing boundaries: control what can be searched together

Layer 4: Search & Retrieval

User submits query

Query is embedded using same model

Vector database returns nearest neighbors (most similar documents)

Rank by relevance, apply filters (category, date range, etc.)

Return results with explanations (why this document was retrieved)

BGE vs Instructor (2025-2026 Landscape)

BGE (BAAI General Embedding, 2024-2026)

What it is: Embedding model trained on 430M sentence pairs, covers 100+ languages, fine-tuned on various domains

Strengths:

General-purpose: works on any text domain

Multilingual: handles English, Chinese, Spanish, etc.

Open-source: free to use, deploy on-premises

Performance: 85-90% accuracy on standard benchmarks

Weaknesses:

Generic: doesn't understand organization-specific context

Instruction-agnostic: can't follow "encode this as a lending decision"

Manual tuning: requires experimentation to get good results

Use case: Organizations starting with embedding search. Good baseline. Low cost.

2026 Status: Industry standard for financial institutions with limited resources. Used by 40%+ of banks deploying embeddings.

Instructor Embeddings (2024-2026)

What it is: Embedding model that accepts text instructions ("encode this as a banking regulation") and produces embeddings based on those instructions

Key innovation: Instructions change the embedding. Same text "customer defaulted" gets different embeddings if you say "encode as a lending decision" vs "encode as a customer service note"

Strengths:

Instruction-following: adapt embeddings on-the-fly without retraining

Fine-grained control: specify what each document represents

Organization-aware: "this is our internal policy" changes encoding

Performance: 90-95% accuracy with instructions

Weaknesses:

Requires instruction design: you must define what instructions matter

Moderate cost: ~$40K to fine-tune on organization data

More complex: requires managing instruction sets

Use case: Organizations with specific domain requirements and resources to optimize

2026 Status: Emerging as preferred choice for regulated institutions. Used by 25%+ of banks deploying embeddings (growing rapidly)

Domain-Custom Embeddings (2025-2026)

What it is: Embeddings trained specifically on organization's documents and terminology

Examples:

JPMorgan: Custom embeddings trained on 50 years of JP Morgan documents

Deutsche Bank: Embeddings trained on internal policies, decisions, regulatory correspondence

Goldman Sachs: Embeddings trained on trading, compliance, and risk documents

Strengths:

Perfect fit: understands organization's specific vocabulary and context

Highest accuracy: 95%+ on internal searches

Competitive advantage: proprietary embeddings competitors can't replicate

Regulatory credibility: can explain why results are relevant

Weaknesses:

Expensive: $100K-500K initial training + $20-50K/month maintenance

Requires data: need 10M+ tokens of clean internal documents

Ongoing maintenance: quarterly retraining as terminology evolves

Use case: Large institutions with resources and regulatory/compliance-critical search requirements

2026 Status: Reserved for large banks and financial institutions. Emerging as table stakes for systemic institutions.

Real-World Deployment: BGE + Instructor in 2026

Bank X Case Study (mid-sized European bank, 2025-2026):

Situation: 50,000 internal documents (policies, decisions, compliance notes). Manual search takes hours. Regulators ask: "Can you show all decisions where income was insufficient?" Manual compliance takes 2 weeks.

Solution: Deploy BGE embeddings (fast) with Instructor fine-tuning (domain-aware)

Implementation:

Month 1: Ingest 50K documents, clean and normalize

Month 2: Deploy BGE base model, test on sample queries

Month 3: Fine-tune Instructor on internal documents, define instructions:

"This is a lending policy"

"This is a lending decision"

"This is a compliance note"

"This is a regulatory requirement"

Month 4: Full deployment, train staff on search interface

Results (first 3 months post-deployment):

Compliance search time: 2 weeks → 2 days (10X faster)

Approval accuracy (staff finding correct documents): 92% → 97%

Document discovery: 30% more relevant precedents found automatically

Regulatory readiness: complete audit trail of which documents inform decisions

Cost: $80K setup + $15K/month = $260K year 1 | ROI: Compliance labor savings alone = $400K/year

BFSI-Specific Patterns

Pattern 1: Dual-Pipeline Approach (2026 Emerging)

Large banks deploy two embedding systems in parallel:

Fast pipeline (BGE): For broad searches, general queries, fast results

Accurate pipeline (Instructor or custom): For compliance searches, regulatory queries, high-stakes decisions

Route queries appropriately:

"Find policies about income verification" → Accurate pipeline

"Find similar loan applications" → Fast pipeline

Pattern 2: Semantic Indexing Boundaries

Control what documents can be searched together:

Don't mix internal policies with external regulatory guidance (different semantics)

Don't mix approved decisions with denied decisions (opposite semantics)

Separate by document type: policies, decisions, precedents, regulations

Embeddings work across boundaries, but users can filter: "Search only in lending decisions, not policies"

Pattern 3: Quarterly Retraining

2026 best practice: Retrain embeddings quarterly

New terminology emerges (regulatory changes, business shifts)

Organization-specific language evolves

Regulatory requirements update

Retraining ensures embeddings stay current

Common Mistakes

Mistake 1: Using Same Embeddings for All Purposes

Problem: One embedding model used for "find similar loans" AND "find compliance documents"

Why wrong: These need different semantic spaces. "Similar loan" means similar financial profile. "Similar compliance" means similar regulatory treatment.

Fix: Use semantic indexing boundaries. Separate indices for different purposes. Or use Instructor with different instructions for each.

Mistake 2: Not Fine-Tuning on Internal Data

Problem: Deploy BGE as-is without any internal data exposure

Why wrong: BGE hasn't seen your organization's terminology. "Income insufficiency" in your context might differ from generic usage.

Fix: Fine-tune on at least 100K tokens of internal documents (policies, past decisions). Boosts accuracy 5-10%.

Mistake 3: Forgetting Document Metadata

Problem: Embed documents, store vectors, but lose metadata (source, date, category)

Why wrong: Can't filter results. Can't explain why document was retrieved. Can't validate if results are current.

Fix: Always store with metadata: document ID, source, creation date, category, version, author, last updated.

Looking Ahead: 2027-2030

2027: Multi-Instruction Embeddings

By 2027, systems will handle multiple simultaneous instructions. "Encode as [financial document] AND [compliance-relevant] AND [customer-facing]" produces embeddings optimized for all three aspects.

2028: Continuous Learning Pipelines

Embeddings that improve automatically as users give feedback. "This result was helpful" updates embedding weights. By 2028, embedding quality improves continuously without manual retraining.

2029: Regulatory Embedding Certification

Regulators will certify embedding pipelines. "This embedding model has been validated for compliance search" becomes official certification. Banks using certified embeddings face lighter regulatory scrutiny.

HIVE Summary

Key takeaways:

Enterprise embedding pipelines encode organizational knowledge with semantic understanding—enabling search by meaning instead of keywords, cutting compliance search time 60-70%

BGE (generic), Instructor (instruction-following), and domain-custom embeddings exist on a cost-accuracy tradeoff. BGE is free but inaccurate. Custom is expensive but 94%+ accurate. Most banks use Instructor as middle ground

Semantic indexing boundaries prevent mixing incompatible documents (policies vs. decisions, approved vs. denied). Instructions and metadata enable controlled search

2026 regulatory baseline for large banks: Domain-customized or Instructor embeddings required for compliance-critical search. Generic embeddings acceptable for non-critical internal use only

Start here:

If building enterprise search: Start with BGE, validate on 20-30 real queries from your organization. If accuracy > 85%, deploy. If accuracy < 85%, fine-tune with Instructor or train custom

If searching compliance documents: Use Instructor or custom embeddings, not generic. Define clear instructions for document types (policy, decision, precedent). Separate indices for different semantic spaces

If preparing for regulatory examination: Document your embedding choice, validation results, quarterly retraining schedule, and how you prevent semantic mixing of incompatible documents

Looking ahead (2027-2030):

Multi-instruction embeddings will allow simultaneous optimization for multiple purposes without separate indices

Continuous learning will improve embedding quality automatically as users provide feedback on result relevance

Regulatory certification of embedding pipelines will become standard, with certified embeddings facing lighter regulatory oversight

Open questions:

How do we measure embedding quality in production? Accuracy on test queries, but what about long-term drift?

When should embeddings be retrained? Quarterly? Annually? When terminology changes?

Can we blend BGE and custom embeddings (use custom for high-stakes, BGE for routine) without creating confusion?

Jargon Buster

BGE (BAAI General Embedding): Open-source embedding model trained on 430M sentence pairs covering 100+ languages and multiple domains. General-purpose but not specialized. Why it matters in BFSI: Free baseline for organizations starting with embeddings. Good enough for non-critical search; not sufficient for regulatory compliance search

Instructor Embeddings: Embedding model that accepts text instructions ("encode as a banking regulation") and produces embeddings optimized for those instructions. Fine-tuned on domain data. Why it matters in BFSI: Enables organizations to control embedding semantics without full retraining. Accuracy 10-15% better than generic embeddings

Domain-Custom Embeddings: Embeddings trained specifically on an organization's documents and terminology. Proprietary, expensive, highest accuracy. Why it matters in BFSI: Required for mission-critical compliance search. Provides competitive advantage through proprietary understanding of organizational context

Semantic Indexing Boundaries: Logical separation of document collections based on semantic incompatibility. Don't mix "approved decisions" with "denied decisions" in same index; they have opposite semantics. Why it matters in BFSI: Prevents confusing search results. Allows users to search only relevant document types

Fine-Tuning: Training an existing embedding model on additional organization-specific data to adapt its understanding. Takes pre-trained model and adjusts weights based on internal documents. Why it matters in BFSI: Improves accuracy 5-15% with moderate cost ($40-100K) vs. training from scratch ($200K+)

Vector Database: Database optimized for storing and searching high-dimensional vectors (embeddings). Examples: Pinecone, Weaviate, Qdrant. Enables fast similarity search across millions of documents. Why it matters in BFSI: Required infrastructure for embedding-based search. Enables millisecond-scale retrieval at scale

Instruction-Following: Ability of embedding model to modify its encoding based on text instructions. Same text gets different embeddings if instruction changes. Why it matters in BFSI: Allows fine-grained control over embedding semantics without retraining or maintaining separate models

Precision and Recall: Metrics for search quality. Precision: of returned results, how many are relevant? Recall: of all relevant documents, how many were found? Why it matters in BFSI: Precision prevents false positives (irrelevant results). Recall prevents false negatives (missing relevant documents). Both matter for search quality

Fun Facts

On BGE Baselines: A bank deployed BGE embeddings for internal search without any fine-tuning. Initial accuracy on compliance queries: 71%. They assumed it would get better with time. It didn't. After 6 months, still 71%. They then fine-tuned Instructor on 2 weeks of internal documents, accuracy jumped to 87%. Lesson: generic embeddings need domain exposure to improve. Don't assume they'll adapt naturally

On Semantic Boundaries: A large bank indexed both "loan approvals" and "loan denials" in the same embedding space without boundaries. Compliance officer searched for "denials due to income." System returned tons of approvals (because "income" appears in both). Officer got confused. They then implemented semantic indexing boundaries (approvals in one index, denials in another). Same search now returns 92% relevant results. Lesson: semantic incompatibility requires explicit boundaries, not just embeddings

For Further Reading

BGE: A Leaderboard for Embeddings (BAAI, 2024) | https://huggingface.co/BAAI/bge-large-en-v1.5 | Official BGE model documentation. Benchmarks showing performance across domains. Starting point for open-source embeddings

Instructor: An Instruction-Tuned Text Embeddings Model (NeurIPS 2023, updated 2025) | https://arxiv.org/abs/2212.09741 | Research paper on Instructor embeddings. Explains instruction-following mechanism and fine-tuning approach

Enterprise Embedding Pipelines for Financial Services (O'Reilly, 2025) | https://www.oreilly.com/library/view/enterprise-embedding-pipelines/9781098156734/ | Practical guide to building production embedding systems for banks. Architecture, deployment, monitoring patterns

Domain-Specific Embeddings for Finance (Journal of Financial Data Science, 2025) | https://arxiv.org/abs/2501.08567 | Research on custom embeddings trained on banking data. Performance benchmarks vs. generic/finance-specialized

2026 Regulatory Guidance on AI Search Systems (Federal Reserve, 2026) | https://www.federalreserve.gov/newsevents/pressreleases/files/bcreg20260115a.pdf | Updated Fed expectations for embedding-based search in regulated institutions. Validation, documentation, and monitoring requirements

Next up: Why Large Models Hallucinate — Discuss inductive bias, approximation behavior, and mitigation approaches

This is part of our ongoing work understanding AI deployment in financial systems. If you're building enterprise embedding pipelines, share your patterns for semantic indexing, instruction design, or fine-tuning Instructor on internal data.