Quick Recap: Modern AI systems don't "read" documents the way humans do. They convert text into mathematical coordinates (embeddings), process sequences using attention mechanisms, and work with fixed-size chunks called tokens. Understanding this gap between human reading and machine processing is critical when deploying document analysis in regulated environments.

It's Tuesday morning at a mid-sized European bank. The credit risk team just deployed an AI system to automatically extract key figures from loan applications—income, debt ratios, employment history. The model works great in testing, achieving 94% accuracy on validation data.

Then a regulator asks: "Walk me through how the system handled the loan application from last Tuesday."

The engineer pulls up the application. It's a scanned PDF of a handwritten form, mixed with printed sections, a table, and a signature. The AI extracted the numbers correctly, but explaining why it chose those specific values from that specific location suddenly becomes impossible. The system doesn't "understand documents" in the human sense. It processes tokens, patterns, and embeddings.

This gap—between what humans think AI does with documents and what it actually does—is where compliance risks live. This is when engineers need to understand the machinery underneath.

How Machines Actually Process Documents

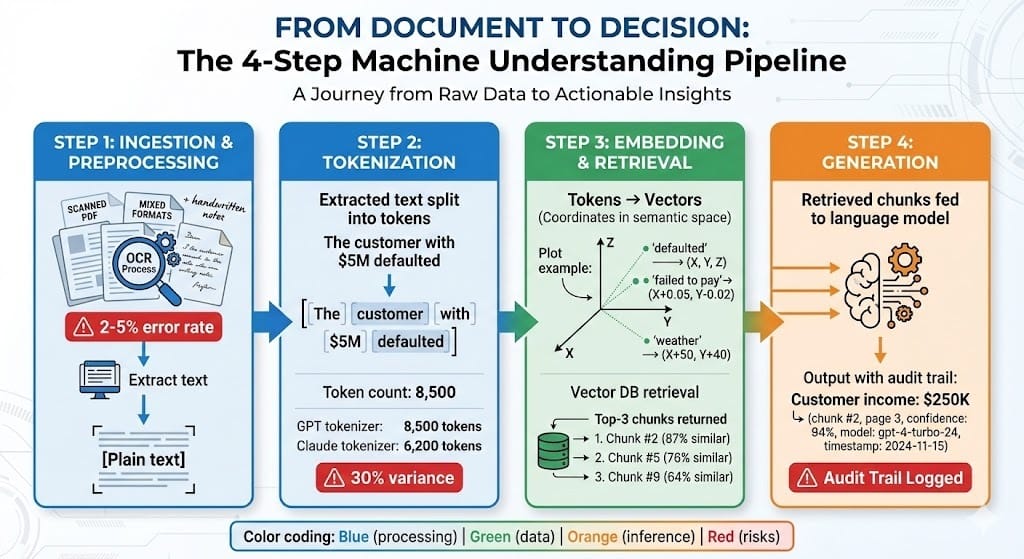

When you hand a document to a machine learning model, several transformations happen before any "understanding" occurs.

First: Tokenization

Humans read words. Machines read tokens—small pieces of text, usually 4-5 characters on average. The sentence "The customer defaulted on their mortgage" becomes something like: ["The", " customer", " default", "ed", " on", " their", " mortgage"].

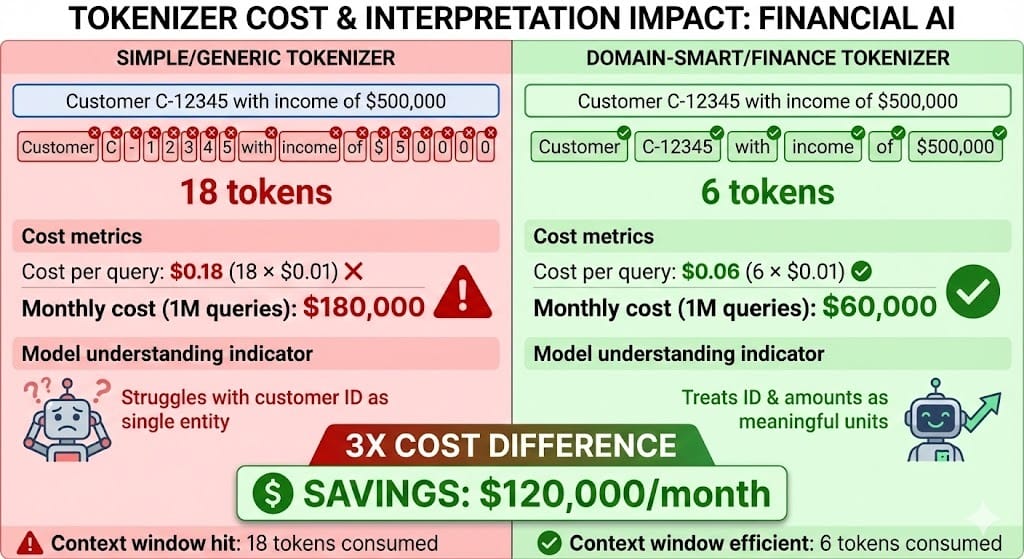

Different tokenizers break text differently. Some treat punctuation as separate tokens. Some handle numbers as single tokens or split them digit-by-digit. A 10-page loan application might become 8,000-15,000 tokens depending on the tokenizer. This matters because:

Context windows have limits: A transformer model can only process a fixed number of tokens at once—typically 2,000-128,000 depending on the model. A long loan agreement might exceed this window, forcing the system to truncate or chunk the document.

Token count affects cost and latency: Cloud APIs charge per token. A poorly chosen tokenizer can make your document processing 30% more expensive than necessary.

Information loss happens at tokenization: If a tokenizer splits a customer ID "C-12345" into ["C", "-", "1", "2", "3", "4", "5"], the model has to reconstruct that meaning from context. Sometimes it fails.

Why this matters in BFSI: When regulators ask "why did the system flag this account as high-risk?", you need to trace the decision back through tokens. If crucial information was lost at tokenization, you can't reconstruct the explanation.

Second: Encoding to Embeddings

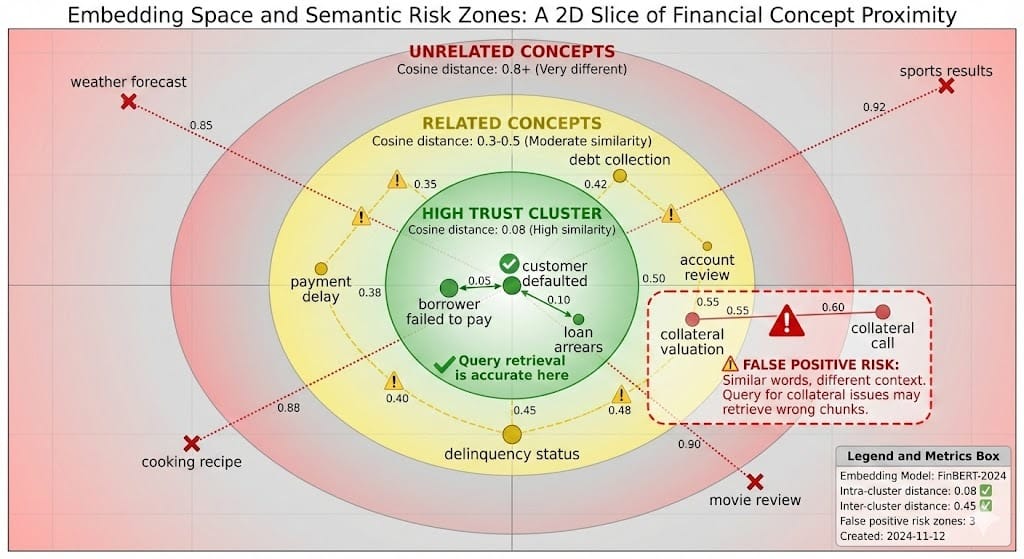

Once text is tokenized, each token becomes a vector—a list of numbers representing meaning in mathematical space. An embedding is like assigning coordinates to words.

Think of embeddings like plotting words on a map. "Customer defaulted" and "borrower failed to pay" should be close together (similar meaning). "Quarterly earnings report" should be far away. The model learns these coordinates during training.

For documents, this happens at multiple scales:

Token embeddings: Each token gets coordinates

Contextual embeddings: The same token gets different coordinates depending on context. The word "bank" in "river bank" has different coordinates than "investment bank"

Sequence embeddings: The entire document (or chunk) gets coordinates representing its overall meaning

Why this matters in BFSI: Different embedding models produce different coordinate systems. An older embedding model trained pre-2024 might not understand "know your customer (KYC)", "climate risk disclosures", or "ESG metrics" in the way 2024 documents use them. Switching embedding models mid-deployment can cause sudden shifts in document similarity rankings.

Third: Sequence Processing with Attention

Here's where transformers become powerful. Rather than processing words one-at-a-time (like old RNN models), transformers process entire sequences at once using attention mechanisms.

Attention asks: "For each token, which other tokens are most relevant?"

In the sentence "The customer with $5M in assets defaulted on their mortgage", the attention mechanism learns that "defaulted" is most relevant to "customer" and "$5M", less relevant to "their" and "mortgage". This creates a map of relationships across the entire document.

This is what enables modern models to understand long documents—they can track relationships between tokens separated by thousands of words. But it also creates complexity:

Attention is expensive: Processing a 10,000-token document with full attention requires billions of mathematical operations. That's why long documents are usually chunked.

Position matters differently: Traditional models process left-to-right. Transformers process all positions simultaneously, so they need "positional encoding" to remember token order. Different models encode position differently.

Context windows truncate information: If a document exceeds the model's context window, the system must choose which parts to keep. Some models keep the beginning and end (losing middle sections). Others keep recent tokens. This choice changes what the model "sees".

The practical impact: A KYC document with compliance history across 10 years might be summarized as: beginning (customer name, current income) + end (recent account activity) + middle sections discarded. The model might miss important information from 2022 that explains current risk.

Document Processing in Production Systems

How Documents Actually Flow Through ML Systems

Production document processing involves several steps beyond raw model inference.

Step 1: Ingestion and Preprocessing

Documents arrive in multiple formats: PDFs, Word documents, scanned images, emails with attachments. The system must first extract text.

Scanned PDFs: Require OCR (Optical Character Recognition). This is error-prone. A handwritten "5" might become "S". A blurry "0" might become "O". These errors propagate downstream.

Structured documents: Formatted forms are easier but still have layout challenges. A table with 10 columns and 50 rows becomes a sequence of tokens. The spatial layout (column alignment, row grouping) is lost.

Mixed documents: A loan application with printed sections + handwritten notes + attachments becomes a linearized sequence. The system doesn't know "these two items were on the same page" or "this signature was at the end".

Why this matters in BFSI: OCR errors create regulatory risk. If the system misread a customer's income as "$500,000" instead of "$50,000", the decision is based on false information. When auditors reconstruct the decision, they see the erroneous number in the logs, but do they know it came from OCR error vs. genuine data? Your audit trail must capture preprocessing quality.

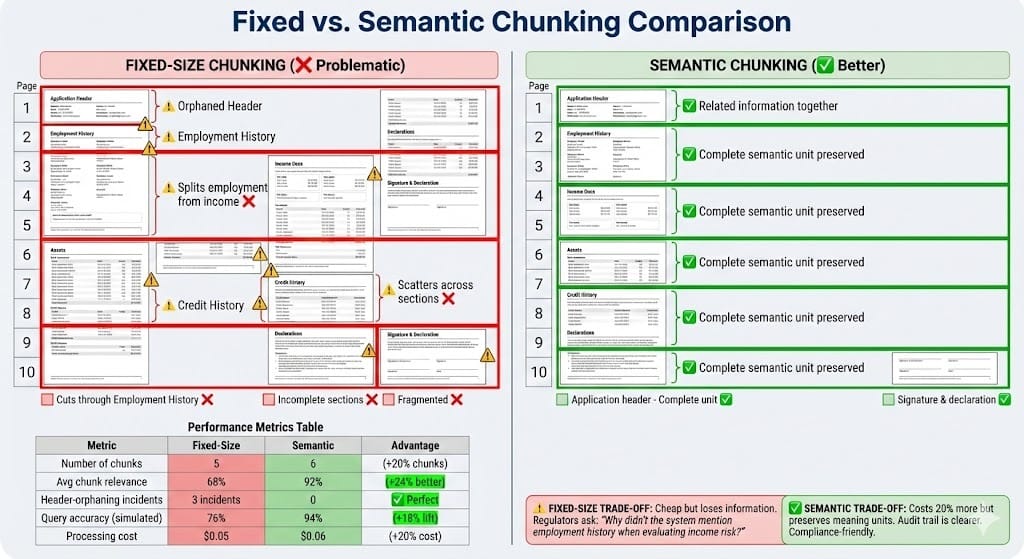

Step 2: Chunking

Most production systems can't process entire long documents. A 100-page regulatory filing becomes 20-40 overlapping chunks of 2,000 tokens each.

Chunking strategies matter significantly:

Fixed-size chunks: Simple but dumb. A semantic boundary (end of a section) might fall in the middle of a chunk, splitting related information.

Semantic chunking: Split at section boundaries, paragraph boundaries, or sentence boundaries. Better but requires understanding document structure first.

Sliding windows: Overlap chunks so no information falls into gaps. Costs more (more chunks = more processing) but captures context better.

Different chunking approaches dramatically affect retrieval quality. Fixed-size chunking might split "Employment History" header from the employment details. The model processes "Employment History" as an orphaned fragment and "employment details" separately. Neither chunk has sufficient context. Semantic chunking keeps related information together.

The practical impact: A study of RAG systems in financial services (Q4 2024) showed that semantic chunking improved retrieval accuracy by 16-24% compared to fixed-size chunking, though at a 10-15% cost increase due to more chunks being created.

Step 3: Embedding and Retrieval

Each chunk is converted to an embedding (a vector of numbers). These vectors are stored in a vector database alongside the original text.

When a query arrives ("What is the customer's current income?"), it gets embedded using the same embedding model. The system finds chunks with the most similar embeddings (nearest neighbors in mathematical space).

Recent advances (2024) show that embedding model selection is critical. A 2024 benchmark of finance-specific embedding models found that domain-trained models (trained on banking documents) outperformed generic embeddings by 18-32% on financial retrieval tasks. Models like Finance-Bert, FinBERT-v2, and proprietary banking embeddings now dominate production deployments.

Critical issue: The embedding model encodes meaning based on its training data. An older embedding model trained pre-2024 might not understand "AI governance", "climate risk disclosures", or "ESG metrics" in the way 2024 documents use them. Query "What are ESG requirements?" on 2023 embeddings might return wrong chunks.

Why this matters in BFSI: Vector database similarity is probabilistic. The system might retrieve the wrong chunks based on semantic overlap that's coincidental, not meaningful. A query about "collateral valuation" might retrieve a chunk about "collateral call procedures" (similar words, different meaning). Your system must validate retrieved chunks before generating answers.

Step 4: Generation with Retrieved Context

The retrieved chunks are fed into a language model along with the original question. The model generates an answer.

Current best practices (as of late 2024) show that retrieval-augmented generation (RAG) systems are increasingly favored over fine-tuned models in regulated environments. The reason: answers can be traced back to specific source chunks, making them auditable.

The risk: Hallucination. The model might generate plausible-sounding information that isn't in any retrieved chunk. It might also pick details from chunks that are outdated ("As of 2022, the customer's income was...") and present them as current.

Recent research (2024) shows that hallucination rates in financial document RAG systems range from 3-8% depending on model choice and retrieval quality. Large models (GPT-4, Claude 3 Opus) perform better than smaller models, but even they require guardrails.

Why This Matters for Compliance

Regulators reviewing AI-assisted decisions need to understand three things:

What did the system see? Which document chunks did it retrieve and process?

How did it interpret them? What embeddings and attention patterns led to this interpretation?

Was the output correct? Does the generated answer match the source documents?

Standard ML explainability tools (SHAP, LIME) work on tabular data and don't handle documents well. For document AI, you need:

Chunk-level audit trails: Every chunk retrieved must be logged with timestamps and versions

Embedding validation: Periodic checks that embeddings still align with human judgment

Hallucination detection: Automatic flagging when answers reference information not in retrieved chunks

Human review workflows: Escalation when confidence is low or when answers affect decisions

How Financial Regulators Think About Document Processing

Central banks and financial regulators are increasingly focused on AI model transparency. Recent regulatory guidance (Fed 2023, ECB 2024, FCA updates through Q3 2024) specifically calls out:

Explainability for decisions: If an AI system contributes to a lending decision, underwriting decision, or compliance action, regulators want to understand the logic

Data lineage for documents: Where did the document come from? Has it been modified? When was it last reviewed?

Reproducibility of decisions: Given the same document and model version, the system should produce the same answer

Document processing creates specific challenges:

OCR introduces irreproducibility: Running OCR twice on the same scanned document might produce slightly different results (especially on handwriting or poor quality scans). This makes decisions irreproducible.

Embeddings are version-dependent: Upgrading your embedding model changes all the vectors. Old similarity rankings no longer apply. Regulators need to know when this happened and why.

Chunking is arbitrary: The choice to use 2,000-token chunks vs. 3,000-token chunks is a design decision that affects what the model "sees". Documenting these choices is critical.

Latest Regulatory Positions (2024-2025)

The European Banking Authority (EBA) released updated AI governance guidelines in October 2024 specifically addressing document AI:

Requires banks to maintain version control on all embeddings used in production

Mandates testing for "embedding drift" quarterly

Calls for hallucination rate monitoring (< 5% recommended threshold)

The Federal Reserve (December 2024) emphasized in its AI risk management guidance:

Document retrieval systems must be auditable to specific chunks

Chunk selection logic must be documented and testable

Banks must maintain decision reproducibility for 6+ years

The UK FCA (ongoing) has been particularly strict about RAG systems used in lending:

Requires that high-stakes decisions (loan denials > £50K) be validated by human review if confidence < 90%

Demands traceability from decision back to source document to specific embedding

Looking Ahead: 2026-2030

The document processing landscape is evolving rapidly. Several trends will shape how banks handle documents in the next 3-5 years.

Multimodal Understanding

Currently, systems convert documents to text (losing layout, images, formatting) and then process text. By 2026-2027, models will process images directly alongside text. A loan application with charts, tables, and signatures will be understood as a multimodal object, not a linearized sequence. This improves accuracy but creates new compliance questions: "When did the model use the chart vs. the text?" Audit trails for multimodal reasoning are still immature.

Research in 2024 (OpenAI's GPT-4V, Anthropic's Claude 3 Vision) shows multimodal models can extract information from documents 15-25% more accurately than text-only approaches. But none have yet published regulatory guidance on auditability.

Longer Context Windows

Model context windows are growing rapidly (128K tokens by late 2024, potentially 1M+ by 2026). This means entire loan applications, regulatory filings, and email threads can be processed without chunking. The benefit: no information loss. The risk: models might be overwhelmed by irrelevant detail or distracted by noise. Regulators will need to understand how models prioritize information in long contexts.

Specialized Domain Models

Generic models (GPT-4, Claude) work across domains. But banks are increasingly training or fine-tuning models specifically on financial documents. These models understand bank-specific jargon, regulatory references, and document formats better. By 2026, the banking industry will have moved from "use a general model" to "use your domain-specific model". This improves accuracy but increases vendor lock-in risk.

Recent announcements (2024) from major banks show movement toward this: JPMorgan's LLM fine-tuned on trading documents, HSBC's compliance-specific models, Barclays' document processing framework.

Real-Time Document Processing

Current systems batch-process documents (ingest a pile of loan applications, extract data, make decisions). By 2026-2027, we'll see real-time streaming: documents arrive and are processed immediately with results flowing to decision systems in near-real-time. This requires rethinking how we validate and explain systems operating at higher velocity.

HIVE Summary

Key takeaways:

Machines don't "read" documents—they tokenize text into small chunks, convert them to numerical embeddings, and process sequences using attention mechanisms, a fundamentally different process than human comprehension

Tokenization choices, chunking strategies, and embedding models all affect what information the system can access and how it interprets it; these are design decisions, not neutral technical details, and they must be auditable

Document processing creates specific compliance risks: OCR errors propagate through decisions, embeddings are model-dependent and can drift, and hallucination (generating information not in retrieved chunks) is a persistent threat in RAG systems

Recent regulatory guidance (Fed, EBA, FCA 2024) requires immutable document versioning, chunk-level audit trails, embedding version control, and quarterly drift testing

Start here:

If you're building a RAG system for compliance: Document your chunking strategy (size, overlap, semantic boundaries), log every chunk retrieved with similarity scores, and test hallucination detection before production

If you're using OCR: Validate OCR output on a sample of documents (at least 100+), document error rates by document type, and flag decisions that depend on low-confidence OCR extractions

If you're choosing an embedding model: Verify the model was trained on financial/banking text (published 2023 or later), test it on 50+ documents from your domain, and plan quarterly revalidation to catch drift

Looking ahead (2026-2030):

Multimodal models will process text + images + layout simultaneously, improving accuracy but creating new challenges for explainability and audit trails of how information was integrated

Context window sizes will grow to 1M+ tokens, eliminating chunking but introducing new risks of models being overwhelmed by irrelevant information or distracted by noise

Banks will shift from generic models (GPT-4, Claude) to specialized domain-specific models trained on financial documents, improving accuracy but increasing vendor lock-in and the need for in-house model governance

Open questions:

How do we audit multimodal reasoning? When a model considers text, a table, and a signature together, how do we explain which modality influenced the decision?

What happens to decision reproducibility when models have 1M token context windows? With that much information available, how do we ensure consistent behavior?

As embedding models improve rapidly, how frequently should we re-embed production corpora? Monthly? Quarterly? And what's the cost of migration?

Jargon Buster

Token: A small unit of text (typically 4-5 characters, but can be a full word or even a subword like "ed" or "ing"). Models process tokens, not characters or words. A 10-page document might be 8,000-15,000 tokens depending on the tokenizer. Why it matters in BFSI: Token count directly affects processing cost (cloud APIs charge per token) and model capability (context windows have token limits). Choosing an efficient tokenizer can reduce costs by 20-30%.

Embedding: A vector of numbers (typically 300-1,024 dimensions) representing the meaning of a token, phrase, or document in mathematical space. Think of it as a coordinate in semantic space where similar meanings are close together. Why it matters in BFSI: Different embedding models create different coordinate systems. Upgrading an embedding model mid-deployment changes all similarity rankings, which can unexpectedly change which documents are retrieved for queries.

Attention Mechanism: A technique that allows a model to focus on the most relevant parts of a sequence when processing each token. For the sentence "The customer with $5M in assets defaulted", attention helps the model recognize that "defaulted" is most relevant to "customer" and "$5M". Why it matters in BFSI: Attention enables understanding of long documents with relationships between distant tokens. But it also means the model's focus can be influenced by training data patterns.

Context Window: The maximum number of tokens a model can process at once. GPT-4 has a 128K token context window; older models had 2K or 4K. Why it matters in BFSI: If a document exceeds the context window, it must be chunked. The choice of how to chunk affects what information the model has access to. A 50-page regulatory filing might be split into 10 chunks, and the model only sees each chunk independently.

Tokenizer: The algorithm that splits text into tokens. Different tokenizers produce different token sequences from the same text. Why it matters in BFSI: A customer ID "C-12345" might become ["C", "-", "1", "2", "3", "4", "5"] in one tokenizer (7 tokens) or ["C-12345"] in another (1 token). The choice affects cost, speed, and model understanding of important identifiers.

Hallucination: When a language model generates information that wasn't in the source documents or contradicts them. Example: a model retrieves chunks about 2022 income and generates a statement about "current income" for 2024. Why it matters in BFSI: Hallucinations in KYC documents or credit decisions can lead to incorrect outcomes. Regulators will hold you accountable for generated answers that don't match source documents. Detection and prevention are critical.

RAG (Retrieval Augmented Generation): A system that retrieves relevant chunks from documents and feeds them into a language model to generate answers grounded in those chunks. Why it matters in BFSI: RAG is safer than fine-tuning for regulated environments because answers are tied to specific retrieved chunks, making them auditable and reproducible.

OCR (Optical Character Recognition): Technology that converts scanned images of documents into text. Works well on printed text, struggles with handwriting and poor scans. Why it matters in BFSI: OCR errors (misreading "5" as "S" or "0" as "O") propagate through decision systems. A customer's income might be misread, leading to wrong credit decisions. OCR quality must be validated and errors must be logged.

Fun Facts

On Token Counting: A common surprise in production is that the number of tokens varies wildly by tokenizer and content type. The same loan application processed by GPT tokenization might be 8,500 tokens but only 6,200 tokens in Claude's tokenizer. One bank discovered they were spending $40K/month on unnecessary token processing by using an inefficient tokenizer. Switching to a better tokenizer for their domain saved $12K/month with no loss of accuracy. Token choice is a legitimate optimization lever that most teams overlook.

On Embedding Drift: A European bank upgraded their embedding model in Q2 2024, expecting better performance on financial documents. What they didn't expect: the new embeddings ranked documents so differently that their existing retrieval thresholds broke. Queries that previously returned 3-4 relevant results now returned 20+, flooding analysts with noise. The audit trail showed: "Everything broke on 2024-06-15". It took three weeks to recalibrate thresholds. The lesson: embedding upgrades aren't transparent. You need a validation period where old and new embeddings are compared on live queries before switching over.

For Further Reading

Attention is All You Need (Vaswani et al., 2017) | https://arxiv.org/abs/1706.03762 | The foundational paper explaining transformer attention mechanisms. Dense but essential for understanding how modern models process sequences.

Evaluating Large Language Models Trained on Code (Chen et al., 2021) | https://arxiv.org/abs/2107.03374 | While focused on code, this paper's evaluation methodology for understanding what models "know" applies to financial documents too.

Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks (Lewis et al., 2020) | https://arxiv.org/abs/2005.11401 | The foundational RAG paper explaining how to build retrieval systems grounded in documents. Core reference for compliance-friendly AI.

What's Going On in BERT's Transformer Attention? (Clark et al., 2019) | https://arxiv.org/abs/1906.04341 | Explainability-focused analysis of attention patterns. Shows how to interpret what a model is paying attention to—essential for audit and compliance.

Hallucination in Neural Text Generation (Ji et al., 2023) | https://arxiv.org/abs/2309.01219 | Comprehensive survey of hallucination causes and detection methods. Critical reading for anyone deploying RAG in regulated environments.

Next up: Chain-of-Custody and Traceable Decisions — How to design systems where every decision can be reproduced, audited, and explained with complete provenance from input data through final action.

This is part of our ongoing work understanding AI deployment in financial systems. If you're implementing document processing systems in regulated environments, share your patterns for handling OCR errors, embedding upgrades, or hallucination detection.

— The AITechHive Team