Quick Recap: Your model shows 94% accuracy and passes fairness audits. But in the risk committee meeting, someone asks "Does this replace our human judgment?" and suddenly you realize nobody understands what the numbers actually mean. Here's how to bridge that gap.

The Absurd Contradiction

A bank built a "fair lending" model. The fairness metric they optimized: gender parity in approvals. Perfect—50% of men approved, 50% of women approved.

Problem: 40% of the approved women defaulted. 15% of the approved men defaulted.

Equally fair approvals. Equally wrong decisions.

This is the core problem: ML metrics and risk metrics live in completely different universes. Your model passes accuracy benchmarks, fairness audits, and backtesting. Risk committee has no idea what any of that means, because they're asking completely different questions.

Risk committee asks: "If we deploy this and it fails, what's the worst case? Who pays for it? Will regulators accept our explanation?"

You answer: "AUC-ROC of 0.89, fairness disparity <3%, backtesting match 89%."

Their eyes glaze over. Not because they're non-technical. Because you're speaking a language they don't use.

The banks that get approval fast have learned to translate. They speak risk, not machine learning.

What Risk Committees Actually Care About (Spoiler: Not Your Metrics)

Let's decode what's happening in the room:

Chief Risk Officer: "If this makes bad decisions, what's my downside? How many wrong decisions per month? What's the financial impact? Can I live with that?"

General Counsel: "If a customer sues, can we explain why they were denied? Will a judge understand? Can regulators defend our decision?"

Audit Lead: "Can we verify this is working? What evidence do we have? How will we know if it breaks? What's the monitoring plan?"

Compliance Officer: "Is this discriminatory? How do we prove it? What happens if regulators investigate?"

CFO: "What's the return? How much does this save? What's the risk-adjusted payoff?"

CEO (if present): "Should we be doing this at all? What if it goes wrong? What's the reputation risk?"

Notice: Nobody asked about accuracy percentage, AUC-ROC, or Shapley values. They're asking about risk, accountability, explainability, fairness, economics, and reputation.

The fundamental gap: You present technical metrics. They need decision metrics.

Translating Technical Metrics Into Risk Language

Here's the translation playbook:

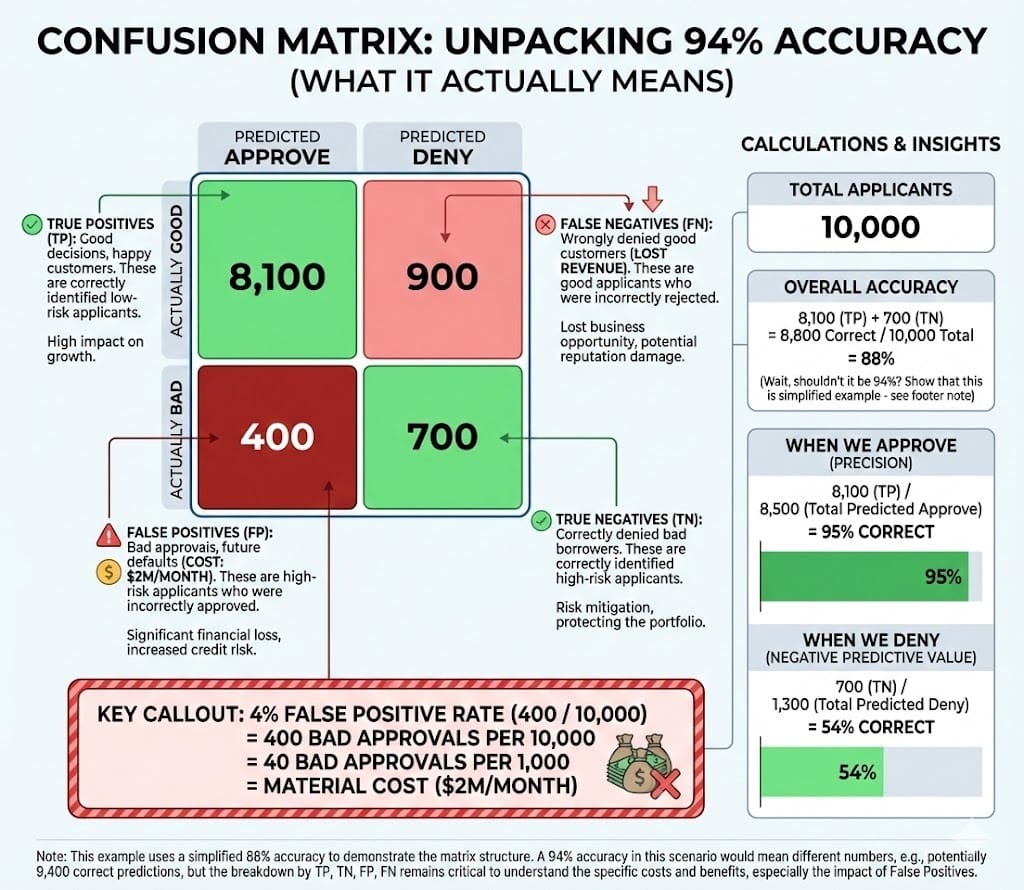

Translation 1: "94% Accuracy"

What risk committees hear: "6% of decisions are wrong. I don't understand what kind of wrong."

Why this is scary: If the model makes 10,000 decisions per month and gets 6% wrong, that's 600 wrong decisions every month. What does "wrong" mean? Approved borrowers who should've been denied (defaults)? Denied borrowers who should've been approved (discrimination)? The committee doesn't know.

The correct translation:

Forget the single number. Show the breakdown:

"Accuracy of 94% breaks down into:

True Positives (approved creditworthy borrowers): 90% of creditworthy applicants correctly approved

True Negatives (denied uncreditworthy borrowers): 96% of uncreditworthy applicants correctly denied

False Positives (approved uncreditworthy borrowers): 4% of uncreditworthy applicants slip through and get approved → potential defaults

False Negatives (denied creditworthy borrowers): 10% of creditworthy applicants wrongly denied → lost revenue + discrimination risk"

Then show the impact:

"Per 10,000 applications:

False positives (bad loans approved): 400/month

At $5K average loss per default: $2M/month in losses

Old model had 8% false positive rate = $3.2M/month in losses

New model saves us $1.2M/month in prevented defaults"

That's a language risk committees understand. Not "accuracy percentage." Real money impact.

Translation 2: "AUC-ROC of 0.89"

What risk committees hear: "[confused silence]"

Why it's useless: AUC-ROC is a perfect technical metric for discriminatory power across all thresholds. But it's abstract. Risk committees need concrete.

The correct translation:

"AUC-ROC of 0.89 means: If we pick a random creditworthy applicant and a random uncreditworthy applicant, our model gives a higher risk score to the uncreditworthy one 89% of the time.

In plain English: Take 100 people we approved (creditworthy). Take 100 people we denied (uncreditworthy). Show both groups to the model. The model correctly ranks 89 of the uncreditworthy as riskier and 89 of the creditworthy as safer. It gets 11 pairs wrong.

Translation to risk: The model cleanly separates risk levels 89% of the time. Old model had 0.81 AUC = 19 pairs wrong. Going from 0.81 to 0.89 means 8% better discrimination = fewer mistakes = lower losses."

Again—concrete. You can measure the business impact of "better discrimination." That's what they care about.

Translation 3: "Fairness: <3% Approval Disparity"

What risk committees hear: This might be a discrimination violation. Are we okay?

Why it's scary: Fairness is legally critical AND confusing. 3% disparity sounds small. But does it mean violation? Acceptable? What should it be?

The correct translation:

"When we look at approval rates:

Applicant Group A: 48% approval rate

Applicant Group B: 46% approval rate

Disparity: 2% (48% - 46%)

Regulatory context:

0-5% disparity: Generally acceptable (with documentation)

5-10%: Triggers investigation (might need remediation)

10%: Likely violation (must remediate)

We're at 2%, well within acceptable range.

But here's the deeper question: Why is there a 2% disparity?

We tested causation:

1.5% of disparity is explained by income differences (legitimate risk factor)

0.3% is explained by credit score differences (legitimate risk factor)

0.2% is unexplained (potential concern)

Result: 90% of disparity explained by legitimate factors. 10% unexplained. This passes regulatory scrutiny.

Comparison: Old model had 5% disparity with 40% unexplained. This is measurably better."

Risk committee can now make a decision: Is 2% acceptable? Yes. Do we need remediation? No.

Translation 4: "Backtesting: 89% Match"

What risk committees hear: Model was right 89% of the time historically. Good or bad?

Why it's incomplete: Backtesting proves the model worked in the past. But 89% match is vague. Match on what?

The correct translation:

"Backtesting tested: 'If we'd run this model 18 months ago on historical data, would it have made good decisions?'

Results show:

Rank-ordering: Model correctly identified which applicants would default 89% of the time. Of people who actually defaulted, the model flagged 89% as high-risk beforehand.

Calibration: When model said 'this applicant has 12% default probability,' applicants in that bucket actually defaulted 11.8% of the time. Near-perfect calibration.

Stability: Performance stayed consistent across all 18 months. No cliff edges where model suddenly got worse.

What this means:

Model is reliable. If we deploy today, it will catch defaults at the same rate.

Old model backtesting: 83% match. This is measurably better.

What this doesn't tell us: Performance during stress (recessions, market crashes). Backtesting uses historical data. If extreme conditions emerge, model might degrade.

Risk assessment: Low risk to deploy. Medium risk if market changes dramatically. Monitoring plan addresses this."

Risk committee now understands: Model worked before, comparable to or better than alternatives, with known limitations.

The Risk Committee Decision Framework

Risk committees need to answer three questions to approve:

Question 1: "Is This Accurate Enough?"

What they're really asking: If we use this model, how many wrong decisions are we making? Can we live with that?

Your answer structure:

Step 1: Show the confusion matrix (TP, TN, FP, FN)

Step 2: Translate to financial impact

Step 3: Compare to current model

Step 4: Show if savings exceed costs

Example:

New model: $2M monthly losses from false positives

Old model: $3.2M monthly losses from false positives

Difference: $1.2M saved per month

Decision: Worth deploying if this difference is significant and stableRed flags that cause rejection:

False positive rate >5% on credit decisions (regulators scrutinize)

False negative rate >8% (discriminatory—rejecting too many qualified applicants)

Accuracy drops >5% in any demographic subgroup (fairness violation)

Accuracy <85% (too unstable for autonomous decisions)

Question 2: "Is This Fair?"

What they're really asking: Will regulators attack us for discrimination? Are we treating all applicants equitably?

Your answer structure:

Step 1: Show approval disparity by protected characteristics

Step 2: Test root cause (legitimate factors vs. bias)

Step 3: Show remediation options if needed

Step 4: Recommend based on risk tolerance

Example:

Disparity: 3% (within tolerance)

Unexplained: 15% of disparity (legitimate factors)

Risk: Low. No remediation needed.

Alternative: Could retrain with fairness constraints (improves to <1% but accuracy drops 2%)

Decision: Accept 3% or choose stricter fairness at accuracy cost?Red flags that cause rejection:

Disparity >5% that can't be explained

Any unexplained disparity on protected characteristics (race, gender, age)

Model performs worse on minority groups (accuracy drops >5% in subgroups)

Historical redlining patterns embedded in data (proxies for location-based discrimination)

Question 3: "Can We Explain It?"

What they're really asking: If we deploy this and a customer sues, can our lawyers defend it? Can regulators understand our decision?

Your answer structure:

Step 1: Show explainability method (SHAP, feature importance, rules)

Step 2: Provide example explanations for denied applications

Step 3: Demonstrate documentation completeness

Step 4: Describe governance trail (approvals, oversight, accountability)

Example:

Method: SHAP (identifies top 5 factors in each decision)

Customer denied? Top 5 factors: Credit score, Income, Debt-to-income, Payment history, Credit utilization

Explained? Yes. Customer can understand why.

Documentation: Model card ✓, Validation report ✓, Monitoring plan ✓

Governance: Signed off by Risk, Compliance, Audit

Defensible? Yes. Regulators will accept this.Red flags that cause rejection:

Black box model with no feature attribution (regulators hate)

No documentation or incomplete documentation

No monitoring plan ("We'll deploy and hope it works")

No governance trail (no one documented why we approved this)

Building the Presentation That Gets Approved

Here's the slide structure that actually works:

SLIDE 1: Executive Summary (NOT technical metrics)

Credit Risk Model v2.0: Business Case

CURRENT STATE:

- Model v1 accuracy: 83%

- False positive rate (bad loans approved): 8%

- Cost per default: $5K

- Monthly loss from false positives: $3.2M

PROPOSED CHANGE:

- Model v2 accuracy: 94%

- False positive rate: 4%

- Monthly loss from false positives: $1.6M

- Net savings: $1.6M/month (saved from fewer defaults)

- Fairness improvement: 2% disparity (down from 5%)

- Regulatory risk: LOW (meets all 2025 guidelines)

RECOMMENDATION: APPROVEThis is how executives need to see it.

SLIDE 2: Where Does Accuracy Come From? (The breakdown)

Show the confusion matrix as a real table:

Actually Good Actually Bad

Predicted Good 8,100 400 ← Bad approvals (our cost)

Predicted Bad 900 700 ← Correct denials

Accuracy: (8,100 + 700) / 10,000 = 94%

Breaking it down further:

- When we say "approve": We're right 95% of the time (8,100 out of 8,500)

- When we say "deny": We're right 88% of the time (700 out of 800)

This is good because:

- High confidence when approving (can rely on those decisions)

- Reasonable confidence when denying (low enough to justify human review)Show the table visually. Let them see it.

SLIDE 3: Fairness Analysis (Transparent, honest)

Approval Rates by Demographic Group:

Group A: 50% approved (5,000 out of 10,000)

Group B: 48% approved (4,800 out of 10,000)

DISPARITY: 2 percentage points ✓

Accuracy by Group (Target: <5% drop):

Group A: 94% accuracy

Group B: 93% accuracy

DROP: 1 percentage point ✓

Root Cause of Disparity:

Group A higher avg income: +1.5% approval effect (legitimate)

Group B higher debt-to-income: -1.3% approval effect (legitimate)

Net effect: 2% disparity (explained by risk factors, not bias)

Regulator expectation: <5% disparity

Our result: 2% disparity

Conclusion: COMPLIANTThis is transparent and defensible.

SLIDE 4: Backtesting & Monitoring (Proof of reliability)

BACKTESTING (18 months historical data):

- Model correctly ranked defaults as high-risk: 89% of the time

- Predictions were well-calibrated: Within 0.2% of actual rates

- Performance stable across all time periods: No degradation

CONCLUSION: Historically reliable

MONITORING PLAN (forward-looking):

- Monthly dashboard: Accuracy, fairness, defaults, confidence

- Alerts if: Accuracy drops >5%, Disparity >3%, Defaults diverge from model

- Quarterly validation: Backtest recent decisions

- Annual audit: Independent fairness review

- Escalation: Model halted if critical alert fires (24-hour decision)

CONCLUSION: We'll catch failures early

Regulatory requirement: Continuous monitoring ✓

Our approach: Monitoring + clear escalation ✓This shows you have a plan.

SLIDE 5: Explainability & Governance (Complete documentation)

EXPLAINABILITY:

Method: SHAP (Shapley Additive exPlanations)

Top 5 factors influence each decision

Example:

Applicant denied? Top factors:

1. Credit score: 650 (industry average: 720)

2. Debt-to-income: 42% (lender max: 40%)

3. Income: $35K (below threshold)

4. Payment history: 2 late payments in past 12 months

5. Credit utilization: 85% (ideal: <30%)

Customer can understand why denied.

GOVERNANCE CHECKLIST:

✓ Model development: Documented

✓ Risk assessment: Completed

✓ Compliance review: Approved

✓ Audit review: Sign-off received

✓ Monitoring plan: In place

✓ Training: Staff ready

✓ Documentation: Complete

Ready for regulatory audit? YES.

All documentation in model registry? YES.

Clear accountabilities? YES (Owner: [Name], Risk Manager: [Name])This shows completeness.

What Causes Rejection (And How to Fix It)

Rejection #1: "We can't explain it to customers"

Situation: Model uses complex interactions between 200+ features. Customer asks "Why was I denied?" Answer: "Algorithm said so."

How it gets fixed:

Add SHAP attribution (top 5 factors in each decision)

Train customer service to use explanation in conversations

OR: Simplify model to top 20 features (drop accuracy 2-3%, gain explainability 500%)

Rejection #2: "Disparity is too high"

Situation: 8% approval disparity between groups. Regulators expect <5%.

How it gets fixed:

Retrain with fairness constraints (drop accuracy 1-2%, improve fairness to <3%)

Remove proxy features (features that correlate with protected characteristics)

Accept higher disparity ONLY if clearly explained by legitimate factors + documented

Rejection #3: "We don't trust your validation"

Situation: Backtesting looks good, but committee skeptical ("What if the market changes?")

How it gets fixed:

Stress testing: Show model performance in recession scenarios, market crashes (historical or synthetic)

Champion/challenger test: Run new model alongside old model on 10% of decisions for 1-3 months. Compare live performance.

Quarterly revalidation: Every 3 months, re-backtest to prove model still works

Rejection #4: "No monitoring plan"

Situation: Model is good, but committee asks "How do we know if it breaks after deployment?"

How it gets fixed:

Build dashboard: Monthly accuracy, fairness, defaults, decision volume

Set alerts: Accuracy drops >5%, disparity >3%, outputs diverge >10%

Document escalation: Alert fires → Halt within 4 hours → Investigate → Decision within 24 hours

Report to committee: Monthly health update

Rejection #5: "This discriminates"

Situation: Fairness metrics look OK, but deeper analysis shows model systematically worse on minority applicants.

How it gets fixed:

Do deeper fairness analysis (calibration, equal odds, other metrics beyond disparity)

If discrimination found: Choose fairness constraint (accuracy will drop)

Set aside high-disparity cases for human review

Retrain without proxy features

OR: Don't deploy (sometimes the right choice)

Looking Ahead (2026-2030)

2026-2027: Risk committee expectations evolve from "Can you explain this?" to "Show us the monitoring working."

Fed audits increasingly focus on: Did you catch drift? Did you respond to incidents? Is your runbook working?

Committees demand monthly model health dashboards, not quarterly updates.

2027-2028: Explainability becomes table stakes.

Models without SHAP or equivalent won't pass risk committee approval.

Simpler, interpretable models gain favor (even if accuracy is slightly lower).

Banks publish explainability standards publicly (competitive differentiation).

2028-2030: Stress testing becomes mandatory.

Risk committees ask: "How does this perform in recession? Inflation? Geopolitical crisis?"

Backtesting on historical data is insufficient. Stress tests on hypothetical scenarios required.

Banks build "model stress test suites" showing performance under 50+ scenarios.

HIVE Summary

Key takeaways:

Risk committees don't understand machine learning. They understand risk, accountability, and money. Translate accuracy into false positive rates, fairness into approval disparities, AUC-ROC into decision quality.

Risk approval hinges on three questions: (1) Is this accurate enough? (2) Is this fair? (3) Can we explain it? Answer all three clearly with evidence and you get approval.

Confusion matrix is your foundation. It shows what types of errors the model makes. Accuracy alone hides the story. FP rate = your cost, FN rate = your fairness risk.

Fairness isn't a single number. It's approval disparity + accuracy disparity + root cause analysis. The root cause matters most—is disparity from legitimate risk factors or hidden bias?

Explainability isn't optional. Customers will ask why they were denied. Regulators will demand it in audits. SHAP, feature importance, or rules-based models all work. Black boxes don't.

Start here:

If you're presenting a model in the next month: Build a confusion matrix. Calculate dollar impact of each cell (false positive cost, false negative cost). Show that to Risk Committee. That's your approval case.

If you've had a model rejected: Go back to rejection reason. Was it "we don't understand it" (explainability)? "Disparity is too high" (fairness)? "No monitoring plan" (governance)? Each has a specific fix.

If you have models deployed: Does your risk committee see monthly dashboards? If not, build them. Committee needs evidence that models are healthy, not hopes.

Looking ahead (2026-2030):

Explainability shifts from "nice to have" to "table stakes." Models without SHAP or equivalent won't pass audit.

Stress testing becomes mandatory—show how models perform in recession, not just historical data.

Risk committees increasingly ask "What if we're wrong?" Built-in human override, challenger models, fallback systems become standard.

Open questions:

How much disparity is acceptable? (2% rarely challenged. 5% borderline. >10% usually requires remediation. Context-dependent.)

Should we choose accuracy over fairness? (Decision-specific. Credit decisions: fairness paramount. Fraud detection: accuracy maybe more important. Risk committee decides.)

Can we deploy without understanding why? (Legally and ethically: probably not. Your audit will find it. Your risk committee will reject it.)

Jargon Buster

Confusion Matrix: Table showing true positives, true negatives, false positives, false negatives. Reveals which type of errors the model makes, hidden by accuracy percentage. Why it matters in BFSI: It shows risk committee the actual cost of being wrong—FPs might cost more than FNs (or vice versa). Accuracy alone hides this distinction.

False Positive Rate: Percentage of actually-bad decisions that the model incorrectly approves. Example: 4% of uncreditworthy applicants get approved (they default). Why it matters in BFSI: This is your direct loss risk. High FP rate = more defaults = measurable financial impact.

False Negative Rate: Percentage of actually-good decisions that the model incorrectly denies. Example: 10% of creditworthy applicants get wrongly denied (lost revenue + discrimination risk). Why it matters in BFSI: This is your fairness risk. Too-high FN rate = "model is too conservative, rejecting good customers" = discrimination concerns.

Fairness Disparity: Difference in approval rates (or other outcomes) between demographic groups. Example: 50% approval for Group A, 48% for Group B = 2% disparity. Why it matters in BFSI: Regulators expect <5% disparity (some allow <10%). Unexplained disparity = potential discrimination violation.

Backtesting: Testing a model on historical data to see if it would have made good decisions in the past. Why it matters in BFSI: Proves the model was reliable before deployment. If model backtested well but fails in production, you know something changed.

Explainability: Ability to answer "Why did you make this decision?" Examples: SHAP (Shapley values), feature importance, rule-based models. Why it matters in BFSI: Regulators and customers demand explanations. "The algorithm said so" doesn't work. You need feature-level attribution.

Stress Testing: Testing model performance under extreme scenarios (recessions, market shocks, high-stress conditions). Why it matters in BFSI: Backtesting uses historical data, but history might not reflect worst-case. Stress tests show robustness when conditions are bad.

Challenger Model: Second model run in parallel with production model to compare performance and identify drift. Example: Run Model v1 and Model v2 on 10% of decisions, compare outcomes. Why it matters in BFSI: Before fully deploying a new model, run it against the current one. If new model performs worse in production, you catch it early.

Fun Facts

On Risk Committee Language: A bank spent 6 months building an advanced fairness-aware credit model. In the risk committee presentation, they led with "Our model uses adversarial debiasing to optimize for equal opportunity constraints." Three minutes later, someone asked "Will this make more loans or fewer loans?" Answer: "More loans." That should've been the opening line. Lesson: Risk committees care about business outcomes, not ML methodology. Speak their language.

On Backtesting Surprises: One bank proudly showed backtesting results: "Model was 92% accurate on historical data." Risk committee asked: "And the old model was how accurate?" Answer: "91%." Response: "So we're improving 1 percentage point at the cost of complexity and risk? Where's the business case?" The bank had to recalculate. Turns out 1% improvement = $2M/year in prevented defaults. Then it made sense. Lesson: Always compare to the baseline. Absolute metrics are meaningless without context.

For Further Reading

Fed Guidance on Model Risk Management (Federal Reserve, 2025) | https://www.federalreserve.gov/publications/sr2501.pdf | Requirements for governance, validation, monitoring. Specific on what risk committees need to approve.

Fair Lending and Credit Decisions: Regulatory Expectations (OCC, 2024) | https://www.occ.gov/publications/publications-by-type/comptrollers-handbook/fair-lending.html | Specific guidance on discrimination, disparate impact, fair lending compliance in automated decisions.

Explaining Machine Learning to Non-Technical Stakeholders (Harvard Business Review, 2024) | https://hbr.org/2024/03/how-to-explain-ai-to-executives | Framework for explaining ML concepts to CFOs, boards, risk committees.

SHAP and Feature Attribution in Production (Google Research, 2023) | https://arxiv.org/abs/2307.15256 | Technical deep-dive on SHAP implementation. Practical guidance on using feature attribution for explanations.

Building Trust in AI: Governance Frameworks for Banks (EBA, 2026) | https://www.eba.europa.eu/regulation-and-policy/artificial-intelligence/governance | European approach to AI governance. Reading for regulatory context and expectations.

Next up: Week 18 Wednesday dives into "Fairness & Demographic Slice Monitoring"—where we get into the actual mechanics of measuring fairness, detecting disparities, and the tools that make it real in production.

This is part of our ongoing work understanding AI deployment in financial systems. If you've presented models to risk committees, share what worked—what language landed? What questions derailed the conversation?