Quick Recap: Systems that need human review at every decision point defeat the purpose of automation. Systems with no human review are disasters waiting to happen. The sweet spot is human-in-the-loop: AI handles routine cases (90%), humans handle exceptions (10%), with clear escalation rules that actually work. The challenge isn't designing the happy path—it's designing what happens when the system is confused, confident-but-wrong, or encountering something it's never seen before.

The Confidence Trap

A fintech's loan approval system was working great. 97% of applications were auto-approved by the AI. Humans reviewed the remaining 3%. Everyone was happy.

Then Q3 2025 happened. Gig economy exploded. Income patterns changed. The model's confidence stayed high (still predicting 97% approval rate) but accuracy dropped to 72%. The model was hallucinating. It approved a fraudulent applicant. Then another. Then a pattern of high-risk approvals that the human reviewers didn't catch because they'd gotten complacent—the model was "always right," why double-check?

By the time anyone noticed, $2.3M in fraudulent loans had been approved.

The post-mortem revealed: the system had escalation rules, but they were written assuming the model would signal uncertainty ("I'm 60% confident"). The model never did—it stayed confident. The escalation threshold was: "escalate if confidence < 70%." But the model was giving 95% confidence on bad decisions. Escalation rule was useless.

This is the human-in-the-loop design trap: having humans in the loop isn't enough. You need intelligently designed humans in the loop, with escalation rules that catch the actual failure modes.

When to Use Human Review

Rule 1: Confidence-Based Escalation

High confidence + high stakes = still might need human review

Low confidence = always escalate

Confidence alone is not sufficient predictor of correctness

Rule 2: Distribution Shift Detection

If input is different from training distribution = escalate

Example: "This applicant's income is 3 standard deviations above training median" = escalate

Model hasn't seen this, confidence signals won't warn you

Rule 3: High-Stakes Decisions

Loan denial > $100K = always human review (financial impact)

Policy interpretation = always human review (regulatory impact)

Fraud accusation = always human review (customer relationship impact)

Rule 4: Pattern Monitoring

If approval rate for demographic group X suddenly changes 5%+ = escalate for review

Model may have developed bias without signaling

Humans catch systematic errors machines miss

Rule 5: Contradiction Detection

If AI recommends "approve" but another system flags risk = escalate

Example: Credit scoring AI says approve, fraud detection says "unusual pattern" = escalate

Conflicting signals demand human judgment

Deep Dive: Designing Escalation Rules That Actually Work (2026)

Anti-Pattern: The Ignored Escalation

What happens:

Rule says: "Escalate if confidence < 70%"

System escalates 5% of decisions

Humans get 5 escalations/day

Humans start auto-approving escalations because "model was usually right anyway"

Escalation becomes rubber-stamp, defeats purpose

Why it fails: Humans adapt to systems. If escalations are noise, humans ignore them.

Fix: Make escalations rare (< 1% ideally, max 3%), meaningful, and acted upon.

Example: Instead of escalating 5% randomly, escalate 0.5% high-value decisions OR distribution-shifted decisions OR high-impact denials. Each escalation has weight.

Anti-Pattern: The All-or-Nothing Review

What happens:

Rule says: "All loan denials need human review"

20% of applications are denied

Humans need to review 2,000 denials/month

Each review takes 10 minutes (routine denial, obvious factors)

Humans spend 333 hours/month on routine reviews

Burnout, errors, quality drops

Why it fails: Humans can't maintain focus on 333 hours of routine work.

Fix: Tier reviews by risk.

Routine denials (low risk, clear factors): No human review, log for audit

Edge case denials (moderate risk, unclear factors): Human review

High-impact denials (high risk, customer relationship impact): Human review + documented reasoning

Reduces human review load 80% while maintaining quality.

Pattern: Smart Escalation Tiers (2026 Best Practice)

Tier 1: Auto-Approve

Confidence > 95%

No distribution shift

Not high-stakes

No contradictions

Action: Approve, log decision

Tier 2: Escalate for Quick Review (30 seconds)

Confidence 80-95% OR

Mild distribution shift OR

Customer requested review

Action: Human glances at factors, approves or denies (90% approved)

Tier 3: Full Review (5-10 minutes)

Confidence < 80% OR

Distribution shift detected OR

High-stakes decision ($100K+) OR

Contradicting signals

Action: Human reviews completely, documents reasoning

Tier 4: Specialist Review (20+ minutes)

Regulatory concern OR

Pattern suggests potential discrimination OR

Fraud indicator

Action: Specialist (compliance, risk, underwriting) reviews, documents thoroughly

Example volume (10,000 applications):

Tier 1: 7,000 (70%)

Tier 2: 2,500 (25%)

Tier 3: 450 (4.5%)

Tier 4: 50 (0.5%)

Human review load: 7-10 hours total, focused on high-value decisions.

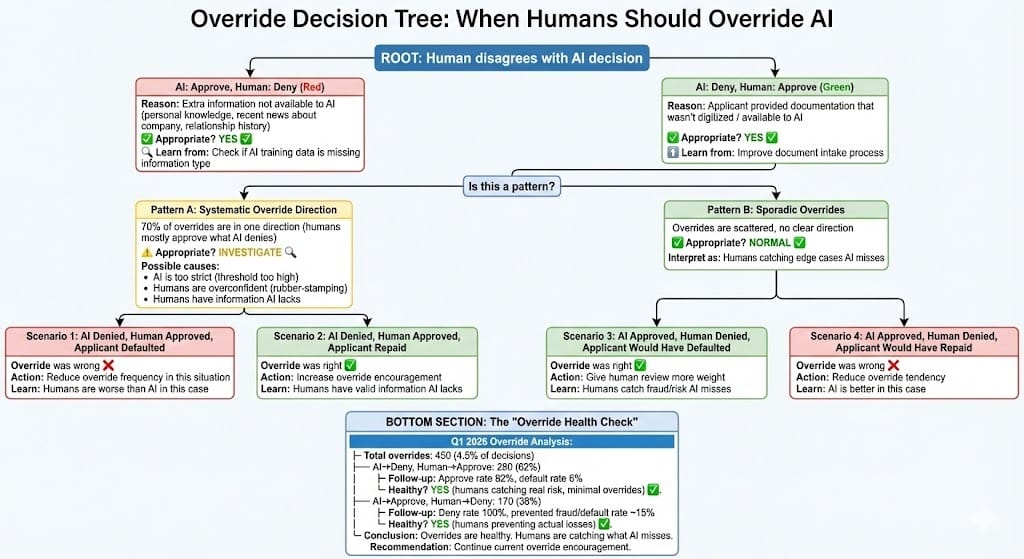

The Override Problem: When Humans Disagree with AI

Scenario: Human review tier 3, AI recommended "deny," human thinks "approve" (or vice versa).

What happens next?

2026 Best Practice:

Human documents reasoning: "AI recommended deny due to debt-to-income ratio, but applicant provided additional income from side business not captured in income verification. Approving based on updated financial picture."

Decision is made (human's judgment)

Outcome is tracked: Did applicant repay? Default?

After 100+ overrides in same direction, system retrains: "Humans are consistently overriding AI on side income. Missing this signal. Retrain on gig worker income patterns."

System improves

The key: Overrides aren't failures. They're training data for continuous improvement.

The risk: Overrides that become systematic bias ("Humans approve 40% more women than AI") need investigation.

BFSI-Specific Patterns

Pattern 1: Exception Handling Strategy

When system encounters truly novel situation (has never seen this):

Confidence drops automatically

System escalates to Tier 3/4

Human makes judgment

If novel pattern repeats, system retrains

By 2027, edge case becomes standard case

Pattern 2: Regulatory Interaction Design

When decision needs regulatory approval:

Tier 4 escalation goes to compliance

Compliance documents reasoning

When pattern emerges ("we're approving 10 applicants in unusual category"), compliance proactively updates guidance

Prevents future conflicts

Pattern 3: Feedback Loop Velocity

Quarterly vs. Real-Time:

Quarterly retraining: Standard 2025 practice

Real-time feedback: 2026 emerging practice

If override happens at 10 AM, system learns by noon

By 5 PM, new model version deployed

Looking Ahead: 2027-2030

2027: Contextual Escalation

Escalation rules adapt based on context:

If fraud is spiking this week → lower escalation thresholds (catch more fraud, accept more false positives)

If customer churn is high → reduce false positives (keep customers happy)

If new regulation is issued → temporary Tier 4 on all loans until policy is settled

Dynamic escalation based on business conditions.

2028: Predictive Override

System learns which decision types humans override frequently:

Predicts likely overrides before human sees them

Presents alternative reasoning to human

"You usually approve these despite AI denial. Here's why AI might be wrong..."

Accelerates human decision-making

2029: Autonomous Escalation Correction

System that detects bad escalation thresholds and auto-corrects:

Too many overrides in one direction? Self-adjusts

Escalation threshold becoming noise? Self-tightens

Learns optimal escalation without human tuning

HIVE Summary

Key takeaways:

Human-in-the-loop only works if escalation rules are intelligent. Escalating everything defeats automation. Escalating nothing is disaster. Sweet spot is ~1-5% escalation on high-value or risky decisions

Tier-based escalation (auto-approve → quick review → full review → specialist) allows humans to focus effort on decisions that matter while automating routine ones

Overrides aren't failures—they're training data. Systematic overrides in one direction indicate system problems worth investigating. Sporadic overrides indicate healthy human judgment

2026 regulatory baseline: Systems must have documented escalation rules, audit trail of escalations, and evidence that humans are actually reviewing escalations (not rubber-stamping)

Start here:

If designing human-in-the-loop: Don't just add "human review for everything." Tier your decisions by risk. Auto-approve routine cases, escalate edge cases. Track which tiers have the most value

If overrides are ignored: Your escalation thresholds are wrong. Make escalations rarer (0.5-1%) and more meaningful. When escalations matter, humans pay attention

If preparing for regulatory review: Document your escalation criteria, show override data, prove humans are actually engaged (not rubber-stamping). Regulators care about this

Looking ahead (2027-2030):

Contextual escalation will adjust thresholds based on real-time business conditions (fraud spikes, regulatory changes, market shifts)

Predictive override will help humans make faster decisions by showing where they typically disagree with AI

Autonomous escalation will self-correct bad thresholds without human tuning

Open questions:

How do we know if humans are reviewing escalations carefully or rubber-stamping?

When should we trust human judgment over AI (and vice versa)?

How do we prevent escalations from becoming a "second-opinion machine" that humans ignore?

Jargon Buster

Escalation: Routing a decision to human review because system is uncertain, decision is high-stakes, or input is unusual. Why it matters in BFSI: Prevents bad AI decisions from reaching customers. Must be designed intelligently or becomes useless.*

Distribution Shift: When input data is different from training data (e.g., gig workers weren't in training, now they're 20% of applicants). Why it matters in BFSI: Model hasn't seen this, confidence signals won't warn you. Need automatic detection.*

Confidence Score: Model's estimate of correctness (95% = very sure, 50% = guessing). Why it matters in BFSI: Should trigger escalation if too low, but high confidence can hide wrong answers. Not sufficient alone.*

Override: Human decision disagrees with AI recommendation. Why it matters in BFSI: If systematic, indicates system needs retraining. If sporadic, normal human judgment. Track patterns.*

Audit Trail: Complete record of decision path including escalations, overrides, reasoning. Why it matters in BFSI: Regulators require proof that humans reviewed decisions, didn't rubber-stamp.*

Tier-Based Review: Decisions routed to different review levels based on risk (auto → quick → full → specialist). Why it matters in BFSI: Focuses human effort on high-value decisions, not routine ones.*

Rubber-Stamping: Humans approving escalations without actual review. Why it matters in BFSI: Defeats purpose of human-in-the-loop. If escalations are rubber-stamped, thresholds are wrong.*

Closed-Loop Feedback: Override data fed back to system for retraining. Why it matters in BFSI: System improves over time. Overrides aren't failures; they're improvement signals.*

Fun Facts

On Escalation Failure: A bank set escalation threshold at "confidence < 80%." Seemed reasonable. But 95% of decisions had confidence > 80% (model was overconfident). System only escalated 100 decisions/month from 100,000. Humans got one escalation every three days—lost focus. Started ignoring them. One escalated bad decision slipped through. Post-mortem: confidence threshold didn't match actual decision value. They restructured as risk-based (loan amount) instead of confidence-based. Escalations increased to 1%, became more meaningful, humans paid attention again. Lesson: escalation thresholds must create signal, not noise

On Override Learning: A bank tracked overrides for a year. Found: humans overrode AI denial 60% of the time when applicant was self-employed (AI trained on mostly W-2). They retrained the model on gig worker data. Override rate dropped to 10%. System learned. But earlier: they had 60% override rate and didn't notice the pattern for 6 months. If they'd been tracking patterns, could have fixed it 6 months earlier. Lesson: monitor override directions. Patterns indicate where your system is weakest

For Further Reading

Designing Human-in-the-Loop Systems for Financial AI (O'Reilly, 2025) | https://www.oreilly.com/library/view/human-in-loop-design/9781098165413/ | Architecture for escalation, override handling, continuous improvement through feedback loops.

Escalation Thresholds and Alert Fatigue (Journal of Human Factors, 2025) | https://arxiv.org/abs/2501.14567 | Research on when humans ignore alerts vs. pay attention. How to design escalation for engagement.

Auditable Decision Records and Compliance (Federal Reserve, 2025) | https://www.federalreserve.gov/newsevents/pressreleases/files/bcreg20250130a.pdf | Regulatory expectations for documenting human reviews, escalations, overrides.

Learning from Overrides: Continuous Improvement (Risk Management Institute, 2025) | https://www.rmins.org/research/override-learning | How to structure feedback loops so overrides improve system performance.

Case Studies: Human-in-the-Loop Failures 2024-2026 (ABA Financial Services, 2025) | https://www.aba.com/research/human-loop-failures | Real examples of systems with poorly designed escalation. What went wrong, lessons learned.

Next up: AI Incident Response Runbook + Escalation Matrix — Define override workflows when AI outputs need intervention

This is part of our ongoing work understanding AI deployment in financial systems. If you're designing human-in-the-loop systems, share your patterns for escalation thresholds, override tracking, or feedback loops that actually improve systems.