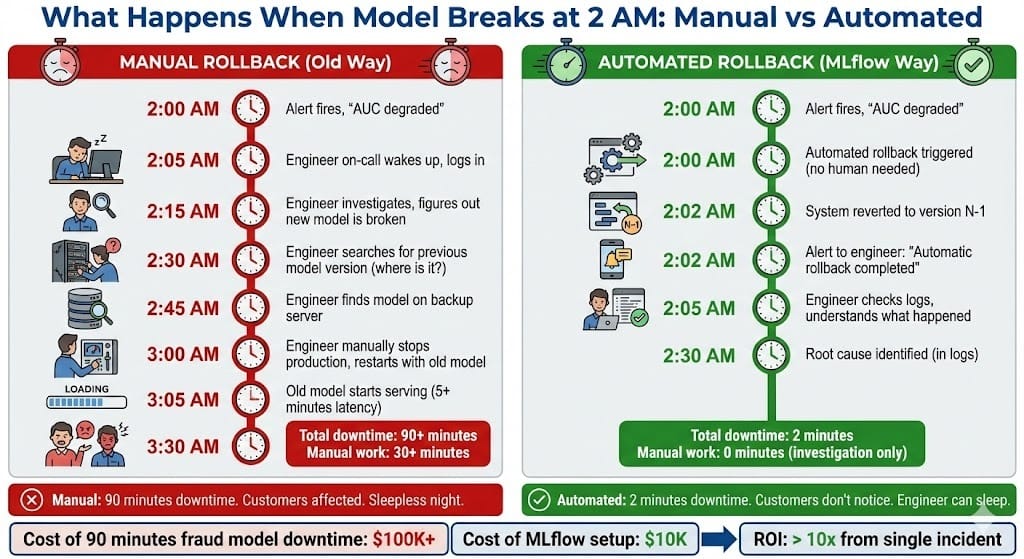

Quick Recap: Your new credit model performs well in validation. You deploy it to production. Two hours later, performance crashes. AUC drops 15%. You need to rollback immediately. MLflow tracks every model version, what changed, and lets you rollback in minutes instead of hours. Version control for models, like Git for code.

Your model team finishes training a new credit scoring model. Tests look good. Better AUC than the current production model.

They deploy Friday afternoon.

Saturday morning, 2 AM.

Your on-call engineer gets an alert: credit approval rates have spiked. Applications that should be denied are being approved.

She investigates. The new model is broken. But broken how?

She can't even tell what changed. Model code? Training data? Threshold? Feature engineering? Something else?

Rolling back means: shut down new model, restart old model. But they don't have a clean copy of the old model. They have to search backup servers.

Two hours of downtime. Customers angry. Regulatory questions incoming.

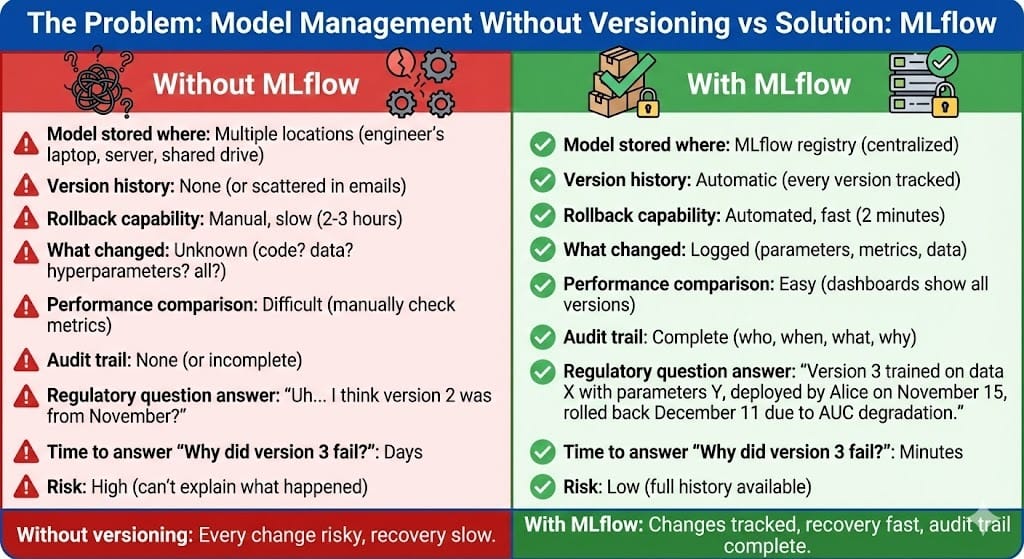

This is what happens without model versioning.

With MLflow: One command rollback. Two-minute recovery. Complete audit trail of what changed. Governance at every step.

Why This Tool Pattern

Models are not software. They're worse than software to manage.

Software: You commit code to Git. Every version tracked. Rollback means checking out an old commit.

Models: Code changed. Training data changed. Hyperparameters changed. Feature engineering changed. You changed the model 47 different ways. Which one broke it?

Why this matters in BFSI: Regulators require you to know what changed between model versions. You must be able to rollback. You must have audit trails. MLflow provides all of this.

The gap most teams face: Model versions are hidden. Training runs are scattered. Rollback requires manual intervention and luck.

Production teams need:

Every model version tracked automatically

What changed between versions documented

One-command rollback to previous version

Audit trail of deployments and changes

Governance checkpoints (approval before deploy)

MLflow handles this. It's Git for machine learning.

The trade-off: MLflow setup takes time. But it saves hours when you need to rollback at 2 AM.

How Model Versioning Works

The Problem: Models Without Versioning

You train a model. Deploy it. It works for three months.

Then you notice performance degrading. You retrain. New model is better. Deploy it.

Six months later: An analyst asks "Why was version 3 better than version 2?"

You have no idea. No changelog. No audit trail. Just two model files.

Worse: Your data scientist left the company. The notebooks that trained model 3 are gone. You can't reproduce it.

Worst: A regulator asks "What changed between model 2 and model 3?" You say "Uh... something?"

The Solution: Model Registry with MLflow

MLflow tracks:

Every model version (v1, v2, v3, etc)

When it was trained

On what data

With what hyperparameters

What the performance metrics are

Who trained it

Which version is in production

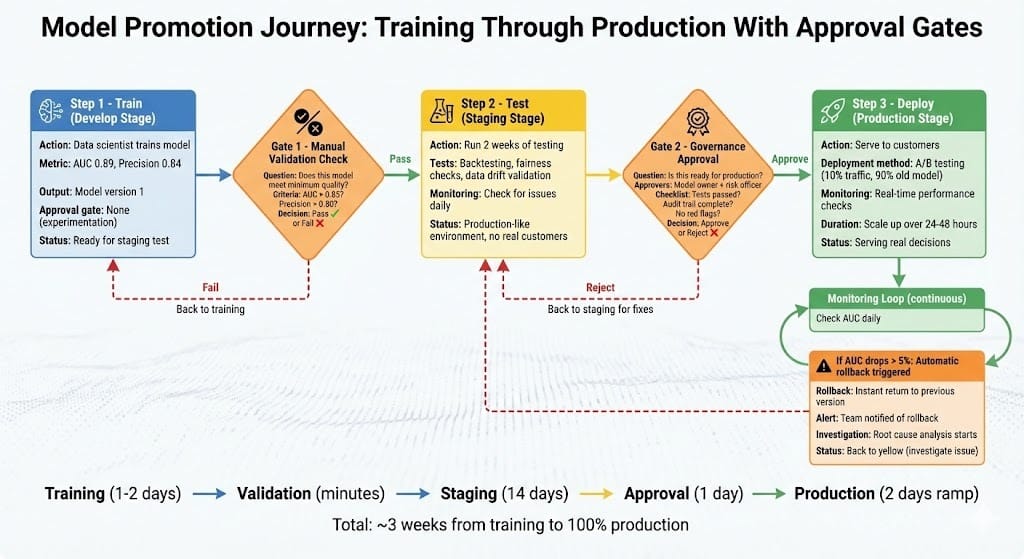

Simple Flow

Train model

↓

Log to MLflow (automatic)

↓

MLflow creates version 1

↓

Validate model

↓

Promote to staging

↓

Test in staging

↓

Promote to production (governance approval required)

↓

Monitor performance

↓

If problem detected: Rollback to version N-1 (one command)

Building Model Versioning with MLflow

Step 1: Log Models During Training

When you train, log the model automatically.

import mlflow

from sklearn.ensemble import RandomForestClassifier

# Start MLflow experiment

mlflow.set_experiment("credit_scoring")

with mlflow.start_run():

# Train model

model = RandomForestClassifier(

n_estimators=100,

max_depth=10,

random_state=42

)

model.fit(X_train, y_train)

# Log parameters

mlflow.log_params({

'n_estimators': 100,

'max_depth': 10,

'random_state': 42

})

# Log metrics

mlflow.log_metrics({

'auc': 0.89,

'precision': 0.84,

'recall': 0.76

})

# Log model

mlflow.sklearn.log_model(model, "credit_model")

print("Model logged to MLflow")That's it. MLflow now tracks this version.

Step 2: Register Model and Move Through Stages

Promote models through stages: develop → staging → production.

from mlflow import MlflowClient

client = MlflowClient()

# Register model (first time)

# This creates "credit_scoring" registered model

model_uri = "runs:/{run_id}/credit_model"

model_version = mlflow.register_model(

model_uri=model_uri,

name="credit_scoring"

)

# Returns: version 1

# Later: promote version 1 to staging

client.transition_model_version_stage(

name="credit_scoring",

version=1,

stage="Staging"

)

# Test in staging for 2 weeks

# ...testing happens...

# Promote to production (requires approval)

client.transition_model_version_stage(

name="credit_scoring",

version=1,

stage="Production"

)

print("Version 1 now in production")Step 3: Rollback When Needed

Something breaks. You need the previous version back. One command.

python

# Check what version is currently in production

client = MlflowClient()

prod_versions = client.get_latest_versions(

name="credit_scoring",

stages=["Production"]

)

current_version = prod_versions[0].version

print(f"Current production: version {current_version}")

# Rollback to previous version

previous_version = current_version - 1

client.transition_model_version_stage(

name="credit_scoring",

version=previous_version,

stage="Production"

)

# Done. System now serving previous version.

print(f"Rolled back to version {previous_version}")Two commands. Sixty-second rollback. Complete audit trail.

Step 4: Audit Trail

Every change tracked.

Version 1: Deployed 2025-11-15, AUC 0.89, Precision 0.84

Version 2: Deployed 2025-12-01, AUC 0.87, Precision 0.80

Version 3: Deployed 2025-12-10, AUC 0.92, Precision 0.86

Version 3: Rolled back 2025-12-11 02:15 (performance issue)

Version 2: Re-deployed 2025-12-11 02:20Regulators see this log and know exactly what happened.

BFSI-Specific Patterns

Pattern 1: Governance Checkpoints

You can't promote to production without approval.

# Version 3 tested and ready

# But who can deploy?

# Require approval before production deployment

def promote_with_approval(version_id, approver_list):

"""

Promote only if approved by someone on approver list.

"""

print(f"Requesting approval for version {version_id}")

# Send to approval system (Slack, email, ticket)

approval_request = {

'version': version_id,

'approvers': approver_list,

'status': 'pending'

}

# Wait for approval

# Only then promote

# Log who approvedThis prevents solo deployments. Requires consensus.

Pattern 2: Automated Rollback Triggers

When performance degrades, trigger rollback automatically.

# Monitor production model performance

def monitor_and_rollback():

"""

Check model performance.

If AUC drops >5%, rollback automatically.

"""

client = MlflowClient()

current_auc = get_current_auc()

baseline_auc = 0.89 # Training AUC

if current_auc < (baseline_auc - 0.05):

print("AUC degradation detected")

# Get current production version

prod_versions = client.get_latest_versions(

name="credit_scoring",

stages=["Production"]

)

current_version = prod_versions[0].version

# Rollback

previous_version = current_version - 1

client.transition_model_version_stage(

name="credit_scoring",

version=previous_version,

stage="Production"

)

# Alert team

send_alert(f"Automatic rollback triggered: {current_version} → {previous_version}")No human waiting. System detects issue and rolls back.

Pattern 3: A/B Testing Before Full Rollout

Test new model on small percentage of traffic first.

# Deploy version 3, but only serve to 10% of users

# Monitor performance on that 10%

# If good, increase to 100%

# If bad, rollback quickly

deployment_config = {

'version': 3,

'traffic_split': {

'version_3': 0.10, # 10% of traffic

'version_2': 0.90 # 90% to safe version

}

}

# Monitor version 3 performance on that 10%

# If AUC on 10% is good for 24 hours:

# Increase to 50/50

# If still good after 24 hours:

# Increase to 100%

# If AUC degrades:

# Immediately rollback version 2 back to 100%Reduces risk of bad deployments. Gradual rollout prevents 2 AM disasters.

Common Mistakes

Mistake 1: Not Versioning at All

❌ Training new model, deploying it, deleting old model ✅ Keeping all versions in MLflow. Can rollback anytime.

You'll need the old model eventually.

Mistake 2: Solo Deployments

❌ Any engineer can deploy to production ✅ Require approval before production deployment

Prevents accidental bad deployments.

Mistake 3: No Audit Trail

❌ Deploying without logging what changed ✅ Every version change logged with who, when, why

Regulators will ask during audit.

Mistake 4: Slow Rollback Process

❌ Rollback requires manual intervention, 2-3 hours ✅ Automated rollback: one command, two minutes

2 AM is when you realize this matters.

Mistake 5: Testing in Production Only

❌ Deploy directly to production, hope it works ✅ Staging environment: test there first, deploy after approval

Staging catches 80% of issues before customers see them.

Looking Ahead: 2026-2030

2026: Model versioning becomes regulatory expectation

Institutions must track model changes

Rollback capability verified during exams

MLflow or equivalent standard

2027-2028: Automated governance enforcement

Policies define who can deploy

Deployments require automated compliance check

Violations blocked automatically

2028-2029: Continuous model deployment

Safe to deploy multiple times per day

A/B testing becomes standard practice

Automated rollback on degradation

2030: Models treated as first-class infrastructure

Model deployment as automated as code deployment

CI/CD pipelines for models standard

Versioning and rollback built in

HIVE Summary

Key takeaways:

Model versioning is Git for ML. Every version tracked. Easy rollback. Complete audit trail.

Three stages: develop (training), staging (testing), production (serving). Promote only after validation.

Governance checkpoint required before production. Prevents solo deployments. Requires approval.

Rollback must be fast (one command). When problems happen at 2 AM, you need recovery in minutes.

A/B testing before full rollout reduces risk. Test on 10% of traffic first. Scale if good.

Start here:

If no versioning exists: Install MLflow this week. Start logging models automatically during training.

If versioning exists but no governance: Add approval gates. Require sign-off before production deployment.

If approval exists but manual: Automate the approval workflow. Integrate with Slack or ticket system.

Looking ahead (2026-2030):

Model versioning becomes regulatory requirement (not optional)

Automated governance enforcement (policies in code)

Continuous model deployment becomes standard

Models treated as first-class infrastructure

Open questions:

How long should each version be kept? (Storage cost vs compliance requirement)

Can you rollback while serving traffic? (Yes, but needs careful orchestration)

What's the right A/B test duration? (24 hours? 1 week?)

Jargon Buster

Model Versioning: Tracking every model version automatically. Includes metadata (training data, hyperparameters, performance metrics). Why it matters in BFSI: Enables rollback and audit trails. Regulatory requirement.

Model Registry: Central store of model versions. Tracks stages (develop/staging/production). Why it matters in BFSI: Single source of truth. Controls promotion between environments.

Stage Promotion: Moving model through stages: develop → staging → production. Each stage has different testing requirements. Why it matters in BFSI: Prevents bad models from reaching customers. Governance checkpoint.

Rollback: Reverting to previous model version. Should be fast and automatic. Why it matters in BFSI: When new model fails, must recover quickly. 2 AM doesn't wait.

A/B Testing: Serving different model versions to different users. Measure performance difference. Why it matters in BFSI: Reduces risk. Test on 10% before full rollout.

Audit Trail: Complete log of model changes, deployments, and rollbacks. Who, when, what, why. Why it matters in BFSI: Regulatory requirement. Essential for incident investigation.

Governance Checkpoint: Approval gate before promotion. Requires human or automated validation. Why it matters in BFSI: Prevents risky deployments. Enforces separation of duties.

Staging Environment: Production-like environment for testing. Same data pipeline, same infrastructure. Different from production (can fail safely). Why it matters in BFSI: Catches 80% of issues before customers see them.

Fun Facts

On Rollback Speed: A major bank's model failed at 2 AM (wrong threshold pushed). Their manual rollback process: 2 hours to identify problem, 1 hour to find old model, 30 minutes to redeploy. Total: 3.5 hours downtime. They switched to MLflow. New rollback time: 2 minutes. Lessons learned: prepare for 2 AM. MLflow matters.

On A/B Testing Value: A fintech deployed new fraud model to 100% of traffic. Precision dropped 8%. Cost: $250K fraud loss before they noticed. Six months later, they used A/B testing: same new model deployed to 10% only. Precision drop detected in 4 hours (on 10% traffic). Automatic rollback triggered. Total cost: $2.5K (just on 10%). Lesson: test first, scale later. A/B testing saved $247.5K.

For Further Reading

MLflow Documentation (MLflow Team, 2025) - https://mlflow.org/docs - Official guide for model versioning, registry, and deployment.

Model Management in Production ML (Huyen, 2022) - https://huyenchip.com/2022/12/21/twelve-ml-mistakes.html - Common mistakes and patterns for model lifecycle management.

ML Governance and Compliance (Gartner, 2025) - https://www.gartner.com/en/documents/3899773 - Regulatory expectations for model versioning and audit trails.

Continuous Integration/Deployment for ML (Google MLOps, 2025) - https://cloud.google.com/architecture/mlops-continuous-delivery-and-automation - How to automate model promotion safely.

Federal Reserve Guidance on Model Risk Management (Federal Reserve, 2024) - https://www.federalreserve.gov/supervisionreg/srletters/sr2410.pdf - Regulatory requirements for model versioning and change control.

Next up: Week 9 Sunday explores why model lifecycle is fundamentally different from software lifecycle. Models degrade over time even when code stays the same. Understanding this difference changes how you manage production AI systems.

This is part of our ongoing work understanding AI deployment in financial systems. If you've implemented MLflow and used rollback to recover from bad deployments, share your timeline and lessons learned.

— Sanjeev @ AITechHive.com