Quick Recap: When you train fraud detection models on transaction data, you need customer identifiers for linking and investigation. But you can't store raw SSNs, credit card numbers, or email addresses in training sets. Presidio and spaCy automatically detect and redact PII (personally identifiable information) while keeping data operationally useful—replacing names with tokens but preserving transaction patterns.

Opening Hook

It's Tuesday morning. Your data team is preparing training data for a new fraud detection model. They've pulled 2 years of transaction history—millions of records with customer names, emails, phone numbers, account numbers, and payment card details.

Your compliance officer reviews the dataset. She spots it immediately: raw SSNs in a column labeled "verification_id." Full credit card numbers in transaction records. Customer email addresses linked to transaction amounts.

"This can't leave our secure environment," she says. "If this dataset is breached, every customer's identity is exposed. We're liable for breach notification, credit monitoring, regulatory fines."

Your data engineer responds: "But we need those identifiers to link transactions to customers during model investigation and fraud validation."

"Not the raw values. Redact everything. Replace names with tokens. Replace email with masked versions. Keep enough information to track back to investigations, but make the raw data unusable."

Your engineer groans: "That's weeks of custom regex and manual masking scripts. And we need to do this for every data pull."

This is when teams discover Presidio and spaCy. Combined, they automate PII detection and redaction. In minutes, not weeks.

Why This Tool Pattern

PII redaction is mandatory in regulated finance. It's not optional.

Why this matters in BFSI: Regulators require you to minimize sensitive data exposure in training datasets. If your training data is breached, you're liable. Beyond compliance, minimizing PII reduces your attack surface and simplifies data governance.

The gap most teams face: PII detection is manual. Regex patterns catch obvious cases (SSN format XXX-XX-XXXX), but miss context-dependent information (is "4111111111111111" a customer ID or a credit card?). Custom masking scripts are fragile—they break when data formats change.

Production teams need:

Automatic PII detection across unstructured data

Context-aware identification (understanding that "12345 Main St" is an address, not a reference code)

Configurable redaction rules (replace with token, mask, delete, or anonymize)

Audit trails proving what was redacted and why

Presidio (Microsoft's PII detection engine) + spaCy (NLP for context) solve this, but only if integrated into your data pipeline as an early step—not bolted on afterward.

The trade-off: Redaction is imperfect. Occasionally, false positives mask legitimate data. Sometimes PII slips through. You need a validation layer.

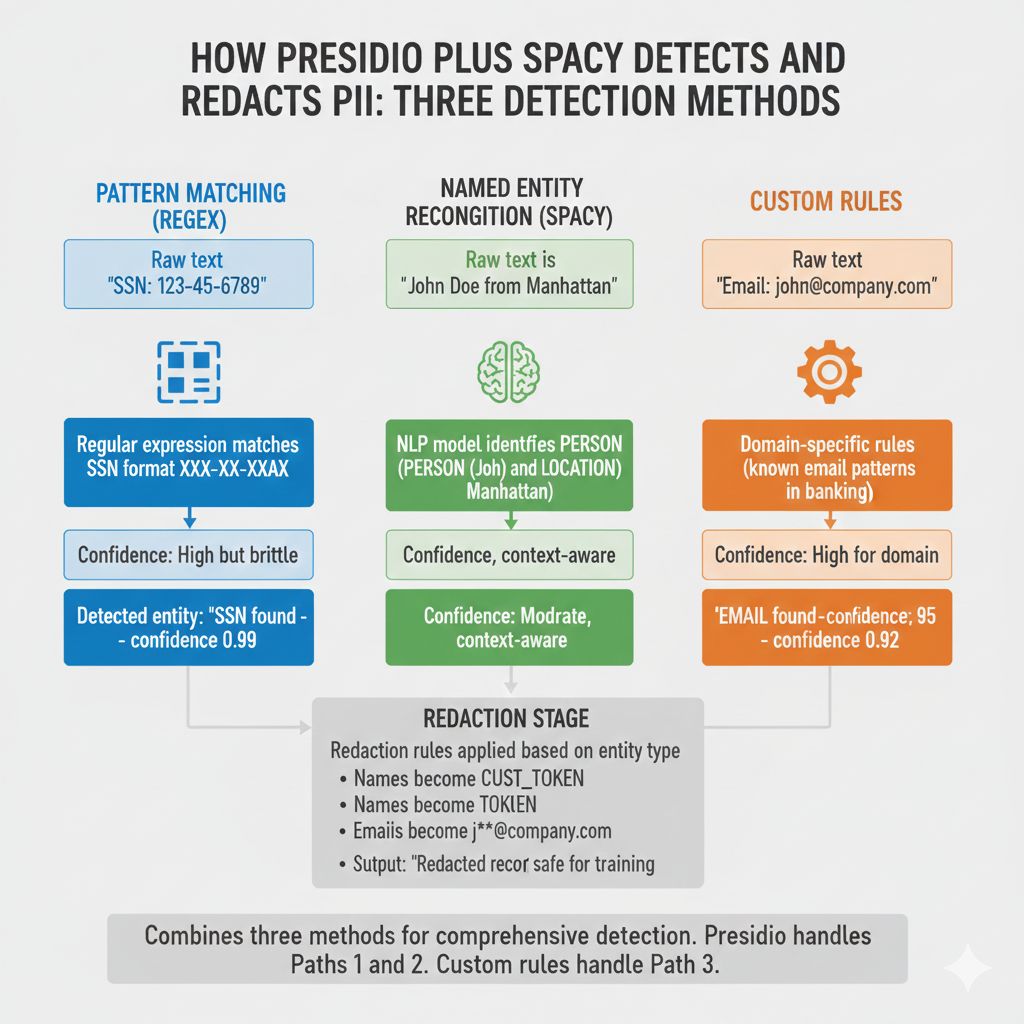

How This Works: The Detection and Redaction Flow

Production teams use a two-step system:

Step 1: Detection (Presidio)

Scans all text fields for PII patterns

Uses regular expressions (SSN, phone, credit card formats)

Uses NLP models (recognizes names, locations, organizations in context)

Returns list of detected entities with confidence scores

Step 2: Redaction (Custom Rules)

For each detected entity, apply redaction rule

Replace with token (john_doe → CUST_12345)

Mask ([email protected] → j***@company.com)

Delete (phone number removed entirely)

Aggregate (replace with statistical summary)

Here's the flow:

Raw transaction data

↓

Presidio detects PII

(Name: John Doe → confidence 0.95)

(Email: [email protected] → confidence 0.92)

(SSN: 123-45-6789 → confidence 0.99)

↓

Apply redaction rules

(Names → Replace with customer token)

(Emails → Mask domain)

(SSNs → Delete entirely)

↓

Redacted data safe for training

↓

Audit log: "Redacted 47 PII entities from this dataset"This preserves transaction patterns (amounts, dates, merchant categories) while removing identifiable information.

Building the Redaction Pipeline

Step 1: Install and Initialize Presidio

Presidio comes with pre-trained models for common PII types. spaCy provides NLP context.

from presidio_analyzer import AnalyzerEngine

from presidio_anonymizer import AnonymizerEngine

import spacy

# Load spaCy model for NLP context

nlp = spacy.load("en_core_web_sm")

# Initialize Presidio analyzer

analyzer = AnalyzerEngine()

# Initialize anonymizer (handles redaction)

anonymizer = AnonymizerEngine()

print("Redaction pipeline initialized")Why this matters in production: Presidio runs locally (no API calls), processes fast, and integrates directly into data pipelines.

Step 2: Detect PII in Transaction Data

Here's how to scan a transaction record:

def detect_pii_in_record(transaction_record):

"""

Scan transaction for PII entities.

Returns list of detected entities with confidence scores.

"""

# Combine all text fields into one document

text_to_scan = f"""

Customer: {transaction_record['customer_name']}

Email: {transaction_record['email']}

Phone: {transaction_record['phone']}

Amount: {transaction_record['amount']}

Merchant: {transaction_record['merchant']}

"""

# Detect PII entities

results = analyzer.analyze(

text=text_to_scan,

language="en",

score_threshold=0.5 # Only entities with 50%+ confidence

)

detected_entities = []

for result in results:

detected_entities.append({

'entity_type': result.entity_type, # PERSON, EMAIL, PHONE, etc

'text': text_to_scan[result.start:result.end],

'confidence': result.score,

'location': (result.start, result.end)

})

return detected_entities

# Example usage

transaction = {

'customer_name': 'John Doe',

'email': '[email protected]',

'phone': '555-123-4567',

'amount': 250.00,

'merchant': 'Starbucks'

}

pii_found = detect_pii_in_record(transaction)

print(f"Detected {len(pii_found)} PII entities")What Presidio detects: PERSON (names), EMAIL, PHONE, CREDIT_CARD, SSN, IBAN, IP_ADDRESS, LOCATION, ORGANIZATION, DATE_TIME, and more.

Step 3: Apply Redaction Rules

Different data types need different redaction:

def redact_transaction_record(record, detected_pii):

"""

Apply redaction rules based on PII type.

"""

redaction_rules = {

'PERSON': 'replace', # Replace names with tokens

'EMAIL': 'mask', # Mask domain: [email protected] → j***@company.com

'PHONE': 'delete', # Delete phone entirely

'CREDIT_CARD': 'delete', # Delete card numbers

'SSN': 'delete', # Delete SSNs

'IBAN': 'mask', # Mask bank accounts

'IP_ADDRESS': 'delete', # Delete IPs

}

# Build anonymization operators

operators = {}

for entity_type, action in redaction_rules.items():

if action == 'replace':

operators[entity_type] = {

'type': 'replace',

'new_value': f'TOKEN_{entity_type}' # PERSON → TOKEN_PERSON

}

elif action == 'mask':

operators[entity_type] = {

'type': 'mask',

'masking_char': '*',

'chars_to_mask': 10,

'from_end': False

}

elif action == 'delete':

operators[entity_type] = {

'type': 'replace',

'new_value': '' # Replace with empty string

}

# Apply redaction

redacted_text = anonymizer.anonymize(

text=str(record),

analyzer_results=detected_pii,

operators=operators

)

return redacted_text.text

# Example

redacted = redact_transaction_record(transaction, pii_found)

print(f"Original: {transaction['customer_name']}")

print(f"Redacted: {redacted}")Why this flexibility: Different PII types require different handling. Names can become tokens (you need to link back). Credit cards should be deleted (no use in training). Emails can be masked partially.

Step 4: Audit Logging

Always log what was redacted. Regulators want proof.

def redact_with_audit_log(record, dataset_name):

"""

Redact PII and log for compliance.

"""

pii_detected = detect_pii_in_record(record)

redacted_record = redact_transaction_record(record, pii_detected)

audit_entry = {

'timestamp': datetime.now().isoformat(),

'dataset': dataset_name,

'record_id': record['id'],

'pii_entities_redacted': len(pii_detected),

'entity_types': [e['entity_type'] for e in pii_detected],

'redaction_actions': ['replace', 'mask', 'delete'],

'confidence_threshold': 0.5

}

# Log to audit database

log_to_audit_database(audit_entry)

return redacted_record, audit_entryWhy auditing matters: When regulators ask "how did you handle PII in this dataset?", you show the audit log proving systematic redaction.

BFSI-Specific Patterns

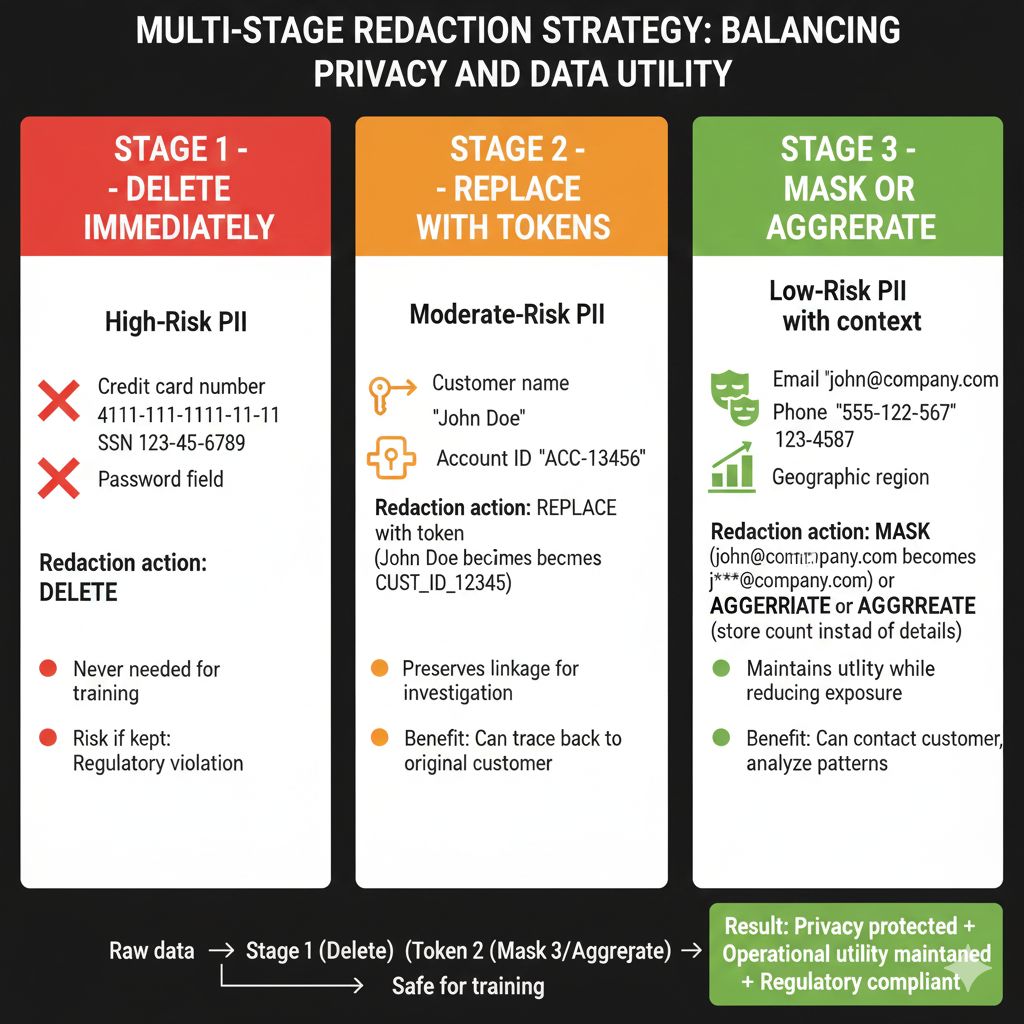

Pattern 1: Multi-Stage Redaction Strategy

Not all PII needs the same treatment:

Stage 1 (Strict): Immediately delete high-risk PII

Credit card numbers → Delete (never needed in training)

SSNs → Delete (never needed in training)

Account passwords → Delete (obvious)

Stage 2 (Controlled): Replace with tokens

Customer names → CUST_ID token (preserves linkage for investigation)

Email addresses → Masked version (can contact customer without exposing email)

Stage 3 (Aggregated): Generalize sensitive context

Phone numbers → Delete individual numbers, store count (how many contact attempts)

IP addresses → Delete specific IPs, store geographic region (understands where customer is from)

This approach maximizes data utility while minimizing exposure.

Pattern 2: False Positive Handling

Presidio sometimes flags legitimate data as PII. A model account number might look like a credit card. A transaction reference might look like an SSN.

Handle false positives with a whitelist:

def redact_with_whitelist(record, whitelist_patterns):

"""

Detect PII but exclude known false positives.

"""

pii_detected = detect_pii_in_record(record)

# Filter out whitelisted patterns

pii_filtered = [

e for e in pii_detected

if not any(pattern.match(e['text']) for pattern in whitelist_patterns)

]

return redact_transaction_record(record, pii_filtered)

# Whitelist patterns for your domain

whitelist = [

re.compile(r'^ACT\d{10}$'), # Account numbers start with ACT

re.compile(r'^REF\d{8}$') # Reference numbers start with REF

]Why this matters: Without whitelisting, you over-redact and lose legitimate features for your model.

Pattern 3: Batch Redaction for Datasets

Redact entire datasets at scale:

def redact_dataset(input_csv, output_csv):

"""

Read CSV, redact all PII, write clean CSV.

Logs redaction statistics for compliance.

"""

import pandas as pd

df = pd.read_csv(input_csv)

redaction_stats = {

'total_rows': len(df),

'rows_with_pii': 0,

'pii_entities_removed': 0,

'entity_breakdown': {}

}

redacted_rows = []

for idx, row in df.iterrows():

pii_detected = detect_pii_in_record(row.to_dict())

if pii_detected:

redaction_stats['rows_with_pii'] += 1

redaction_stats['pii_entities_removed'] += len(pii_detected)

for entity in pii_detected:

entity_type = entity['entity_type']

redaction_stats['entity_breakdown'][entity_type] = \

redaction_stats['entity_breakdown'].get(entity_type, 0) + 1

redacted_row = redact_transaction_record(row.to_dict(), pii_detected)

redacted_rows.append(redacted_row)

# Write redacted data

pd.DataFrame(redacted_rows).to_csv(output_csv, index=False)

# Log statistics

print(f"Redaction complete: {redaction_stats['pii_entities_removed']} PII entities removed")

print(f"Entity breakdown: {redaction_stats['entity_breakdown']}")

return redaction_statsThis scales to millions of records efficiently.

Common Mistakes

Mistake 1: Redacting Too Aggressively

❌ Deleting all customer identifiers including tokens ✅ Replace with tokens for linkage, delete only truly sensitive data (SSN, credit cards)

You need some way to link back to customers for investigation.

Mistake 2: Ignoring False Positives

❌ Accepting Presidio's detection as ground truth ✅ Validate detected PII, whitelist legitimate false positives

Your legitimate reference numbers might look like credit cards.

Mistake 3: Skipping Audit Logs

❌ Redacting silently, no record of what changed ✅ Log every redaction: entity type, action taken, timestamp

Regulators demand proof of systematic redaction.

Mistake 4: One-Size-Fits-All Rules

❌ Same redaction rules for all data types ✅ Different rules for names (token), emails (mask), credit cards (delete)

Not all PII needs the same treatment.

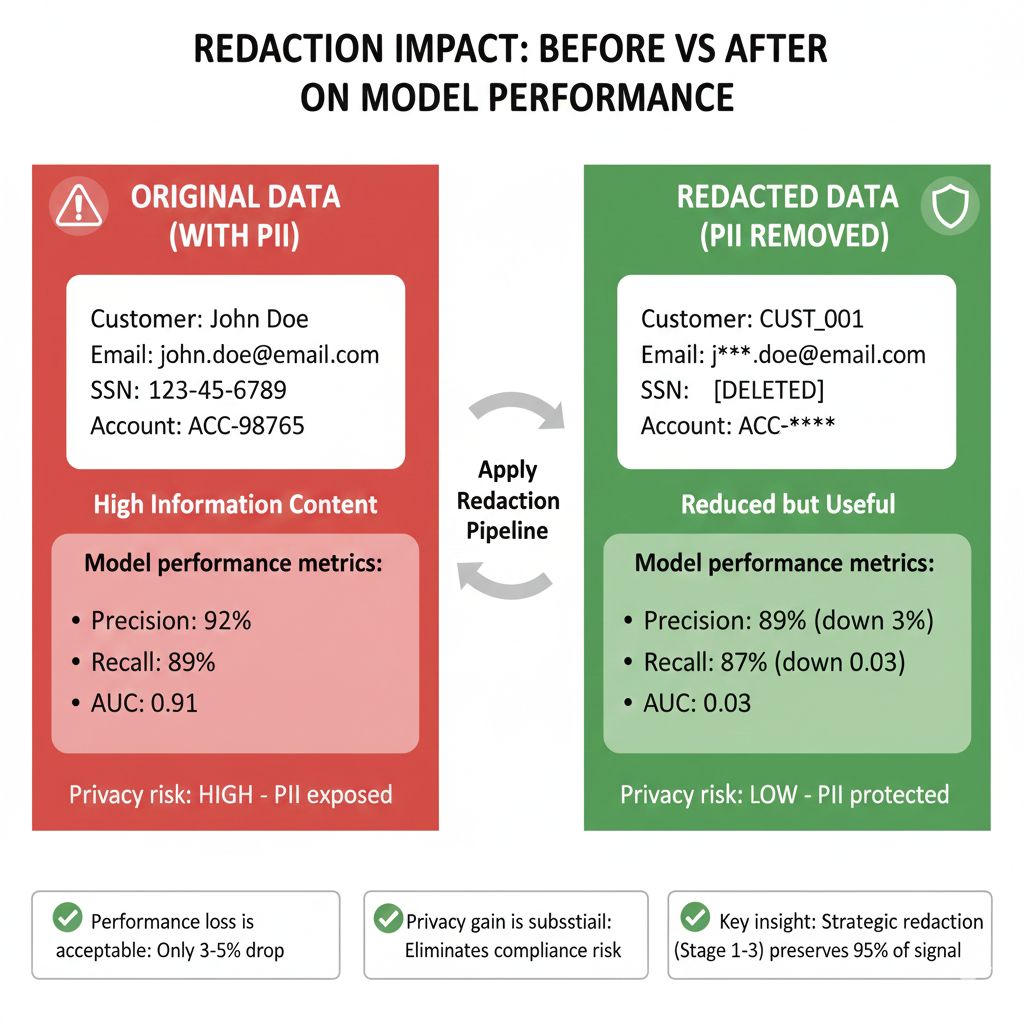

Mistake 5: Not Testing Redaction Impact

❌ Assuming redacted data still trains models well ✅ Compare model performance: original data vs redacted data

Sometimes over-redaction hurts model performance. Test it.

Looking Ahead: 2026-2030

2026: Automated PII detection becomes standard

Regulatory frameworks mandate systematic PII redaction

Teams without automated redaction pipelines face audit findings

Presidio-like tools become table stakes

2027-2028: Context-aware redaction improves

Models better understand whether data is truly PII

False positive rates drop significantly

Whitelisting becomes less necessary

2028-2029: Privacy-preserving alternatives emerge

Differential privacy makes redaction sometimes unnecessary

Synthetic data generation replaces redaction for some use cases

Federated learning eliminates need to centralize data

2030: Privacy-by-design becomes standard

Systems built with privacy from day one, not added later

Redaction becomes last resort, not first defense

Zero-trust architectures make sensitive data never centralized

HIVE Summary

Key takeaways:

Presidio automatically detects PII; spaCy adds NLP context for accurate identification

Multi-stage redaction strategy: Delete high-risk data (credit cards, SSN), replace with tokens (names for linkage), mask or aggregate (emails, phones)

Audit logging is non-negotiable—regulators demand proof of what PII was redacted and when

False positives require whitelisting—legitimate reference numbers might look like credit cards

Test redaction impact on model performance—over-redaction sometimes hurts accuracy

Start here:

If starting now: Install Presidio, run it on a sample dataset, identify false positives in your domain, build whitelist

If redacting manually: Automate with Presidio. Replace regex scripts with context-aware detection. Save engineering time.

If scaling up: Build batch redaction pipeline. Integrate into data workflows. Log everything for compliance.

Looking ahead (2026-2030):

Automated PII detection becomes regulatory requirement (not optional)

Better NLP models reduce false positives (whitelisting becomes less necessary)

Privacy-preserving alternatives emerge (differential privacy, synthetic data)

Privacy-by-design becomes standard (redaction as backup, not primary defense)

Open questions:

How to balance redaction (privacy) against data utility (model performance)?

When is redaction sufficient versus when do you need differential privacy?

Can you redact PII in unstructured text (documents, emails) as effectively as structured data?

Jargon Buster

PII (Personally Identifiable Information): Any data that can identify an individual—names, SSNs, emails, phone numbers, credit card numbers. Why it matters in BFSI: Must be redacted or deleted before using data for training.

Presidio: Microsoft's open-source PII detection engine. Uses regex patterns and NLP models to identify sensitive data. Why it matters in BFSI: Automates detection, handles context better than simple regex.

spaCy: NLP library providing named entity recognition (NER). Identifies names, locations, organizations in text context. Why it matters in BFSI: Helps Presidio distinguish between "4111111111111111" as credit card vs legitimate reference number.

Tokenization: Replacing sensitive data with placeholder tokens (john_doe → CUST_12345). Why it matters in BFSI: Preserves ability to link back to investigations while hiding raw identities.

Masking: Partially hiding data ([email protected] → j***@company.com). Why it matters in BFSI: Reduces exposure while keeping data partially readable for validation.

Redaction: Removing or replacing sensitive data with non-sensitive equivalent. Why it matters in BFSI: Required for compliance before releasing training data.

False Positive: Legitimate data incorrectly flagged as PII. Why it matters in BFSI: Causes over-redaction and data loss. Whitelisting handles this.

Audit Trail: Log of all redaction actions (what was redacted, when, why). Why it matters in BFSI: Regulators require proof of systematic PII handling.

Fun Facts

On Presidio's NLP Advantage: A major fintech discovered their custom regex-based redaction missed 30% of email addresses because they used unusual formats (firstname+lastname@domain). Presidio's NLP context caught them. The lesson: context-aware detection beats pattern matching. Regex is brittle; NLP is robust.

On False Positives and Whitelisting: A US bank auto-redacted all 10-digit sequences thinking they were phone numbers. Problem: they had legitimate account reference numbers in that format. Models performed 40% worse after redaction. They built a whitelist for account numbers. Performance recovered. The lesson: validate redaction impact on your data. Over-redaction hurts models.

For Further Reading

Presidio Documentation (Microsoft, 2025) - https://microsoft.github.io/presidio/ - Official guide on PII detection and anonymization. Start here for implementation details and supported entity types.

spaCy Named Entity Recognition (spaCy Project, 2025) - https://spacy.io/usage/linguistic-features#named-entities - NLP context for improving PII detection. Essential for understanding how to combine Presidio + spaCy.

GDPR Annex I: Data Processing Checklist (European Commission, 2024) - https://gdpr-info.eu/annex-01/ - Regulatory requirements for minimizing PII in datasets. Required reading for compliance.

NIST Guidelines on De-Identification (National Institute of Standards and Technology, 2024) - https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-188.pdf - US federal guidance on PII removal and data minimization strategies.

Privacy Preserving ML with Differential Privacy (OpenMined, 2024) - https://courses.openmined.org/courses/take/our-privacy-preserving-machine-learning-course - Future approach beyond simple redaction. Understand where the field is headed.

Next up: Week 6 Wednesday explores drift detection—monitoring whether your model's behavior is changing over time and triggering reviews when patterns shift. Using Evidently AI to detect distribution changes and alert your team before predictions go bad.

This is part of our ongoing work understanding AI deployment in financial systems. If you've built redaction pipelines and dealt with false positives, share your whitelist strategies.

— Sanjeev @ AITechHive