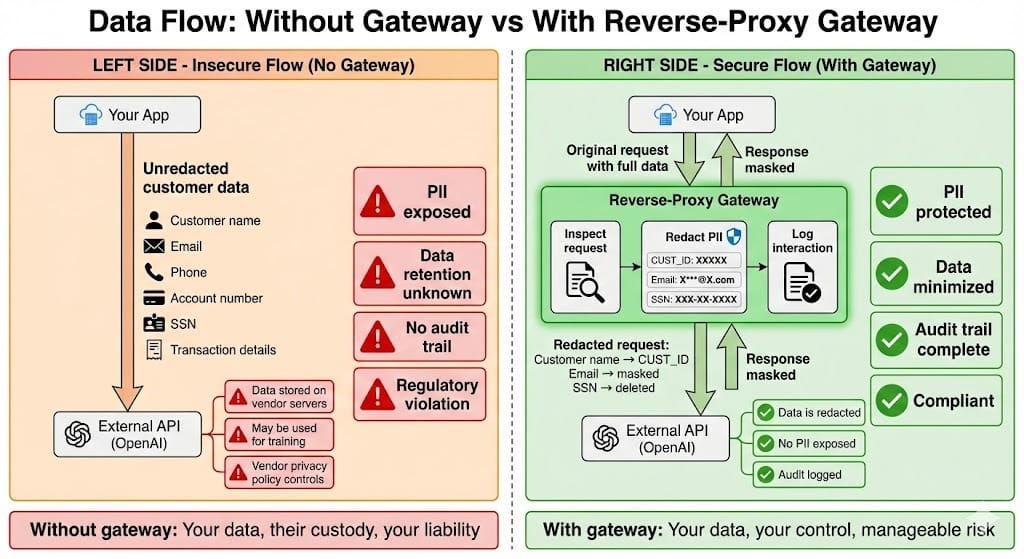

Quick Recap: When your teams use external AI services (OpenAI, Anthropic, Claude API), you can't control what vendors do with your data after it leaves your network. A reverse-proxy gateway sits between your applications and external APIs, inspecting every request and response. It enforces rules: redact PII before sending, block sensitive data types, mask responses, log everything. This architectural layer prevents your customer data from training vendor models or being stored in vendor systems.

It's Monday morning. Your compliance officer discovers something alarming in your vendor contracts.

You're using OpenAI's API for customer support automation. Thousands of conversations flow through their API daily. Your contract says "OpenAI may use your data to improve models" unless you opt into a data privacy agreement (which costs 2x the standard pricing).

She asks: "How many of these conversations contain customer PII? Credit card details? Account information?"

Your engineering team answers honestly: "Probably thousands per day. We haven't been filtering."

She escalates immediately: "You're sending unredacted customer data to a third-party vendor who may train their model on it. Stop. Immediately."

Your API calls halt. Your support system goes dark. Customers can't get help.

Now you face: rebuild the integration with data filtering, compliance review delay, potential regulatory notification ("we may have exposed customer data").

Two weeks and significant engineering effort later, you implement a reverse-proxy gateway. Every API request is inspected. PII is redacted before leaving your network. Responses are logged locally. Your system is back online, compliant.

This is the reverse-proxy gateway problem in practice. Without it, you're trusting external vendors with your customer data.

Why This Tool Pattern

External AI services are convenient. But they're also data risks.

Why this matters in BFSI: Regulators require you to control where customer data goes. Sending unredacted data to external APIs violates data minimization principles. Even if the vendor promises not to train on your data, you've transferred the risk outside your control boundary.

The gap most teams face: Integration is easy (add API key, make calls). Security is hard (inspect every request, redact PII, handle errors, log for audit). Most teams skip the hard part and ship the convenient part.

Production teams need:

Request inspection (before data leaves your network)

PII redaction (remove sensitive fields automatically)

Response masking (protect vendor responses)

Audit logging (prove what you sent and received)

Rate limiting and access control (prevent abuse)

Fallback behavior (when vendors are unavailable)

A reverse-proxy gateway provides all of this. It's a security layer you control, sitting between your applications and external APIs.

The trade-off: Adds latency (additional processing). Requires maintenance (rules need updating). But the security benefit far outweighs the cost.

How This Works: The Gateway Architecture

Production teams use a simple pattern:

Flow Without Gateway (Insecure):

Your App

↓

[Unredacted customer data]

↓

OpenAI API

↓

[Data stored or trained on]Flow With Reverse-Proxy Gateway (Secure):

Your App

↓

Reverse-Proxy Gateway

↓

[Inspect request]

[Redact PII fields]

[Log request]

↓

OpenAI API

↓

[Receive response]

↓

[Mask sensitive fields]

[Log response]

[Return to app]

↓

Your AppThe gateway is your control point. Everything passes through. You decide what's allowed.

Building the Reverse-Proxy Gateway

Step 1: Gateway Setup with Nginx

Nginx is lightweight, fast, and battle-tested. Use it as your reverse proxy.

# /etc/nginx/nginx.conf

# Reverse proxy configuration for AI API gating

upstream openai_api {

server api.openai.com:443;

keepalive 32;

}

# Rate limiting: prevent abuse

limit_req_zone $http_authorization zone=api_limit:10m rate=10r/s;

server {

listen 8080 ssl;

ssl_certificate /etc/nginx/certs/gateway.crt;

ssl_key /etc/nginx/certs/gateway.key;

# Log all requests for audit trail

access_log /var/log/nginx/ai_gateway.log combined;

error_log /var/log/nginx/ai_gateway_error.log;

location / {

# Rate limiting: max 10 requests per second per API key

limit_req zone=api_limit burst=20 nodelay;

# Verify authentication

if ($http_authorization = "") {

return 401 "Unauthorized: API key required";

}

# Forward to OpenAI

proxy_pass https://openai_api;

proxy_http_version 1.1;

proxy_set_header Connection "";

# Preserve original headers

proxy_set_header Host api.openai.com;

proxy_set_header Authorization $http_authorization;

# Add tracking for audit

proxy_set_header X-Request-ID $request_id;

proxy_set_header X-Forwarded-For $remote_addr;

}

}This basic gateway handles routing and rate limiting. Now add data filtering.

Step 2: Request Inspection and PII Redaction

Use a middleware layer (Python/Node) to inspect and filter requests before they reach the gateway.

# redaction_middleware.py

"""

Inspect requests to external AI APIs.

Redact PII before sending.

"""

from fastapi import FastAPI, Request, HTTPException

from presidio_analyzer import AnalyzerEngine

from presidio_anonymizer import AnonymizerEngine

import json

import logging

app = FastAPI()

analyzer = AnalyzerEngine()

anonymizer = AnonymizerEngine()

logger = logging.getLogger(__name__)

def redact_pii_from_request(request_body):

"""

Scan request for PII.

Redact sensitive fields.

"""

try:

# Parse request JSON

data = json.loads(request_body)

# Redaction rules: which fields to redact

sensitive_fields = ['customer_id', 'account_number', 'ssn', 'email',

'phone', 'customer_name']

for field in sensitive_fields:

if field in data:

# Scan field for PII

pii_detected = analyzer.analyze(

text=str(data[field]),

language="en",

score_threshold=0.5

)

# Redact if PII found

if pii_detected:

# Replace with masked version

data[field] = f"REDACTED_{field.upper()}"

# Log redaction for audit

logger.info(

f"PII redacted",

extra={

'field': field,

'pii_type': [e.entity_type for e in pii_detected],

'request_id': request.headers.get('X-Request-ID')

}

)

return json.dumps(data)

except Exception as e:

logger.error(f"Redaction failed: {str(e)}")

# Fail safely: reject request if redaction fails

raise HTTPException(status_code=400, detail="Request filtering failed")

@app.post("/api/chat")

async def gateway_chat(request: Request):

"""

Intercept chat requests to external API.

Redact PII. Forward to OpenAI.

"""

# Read request body

body = await request.body()

body_str = body.decode('utf-8')

# Redact PII

redacted_body = redact_pii_from_request(body_str)

# Forward to Nginx reverse proxy

# (Nginx then forwards to OpenAI)

# Log redacted request for audit

logger.info(

f"Request processed",

extra={

'request_id': request.headers.get('X-Request-ID'),

'original_size': len(body_str),

'redacted_size': len(redacted_body),

'redacted': body_str != redacted_body

}

)

return {

'status': 'forwarded',

'request_id': request.headers.get('X-Request-ID')

}This middleware inspects every request. If it finds PII, it redacts it before the request leaves your network.

Step 3: Response Handling and Logging

Responses from external APIs may contain sensitive information. Mask them before returning to your application.

def mask_response(response_data):

"""

External API response may contain patterns that hint at your data.

Mask patterns before returning to application.

"""

try:

response = json.loads(response_data)

# Look for patterns in response that might leak info

# Example: If response contains phone-like patterns, mask them

for key, value in response.items():

if isinstance(value, str):

# Check for phone number patterns

import re

if re.search(r'\d{3}-\d{3}-\d{4}', value):

response[key] = re.sub(

r'(\d{3})-(\d{3})-(\d{4})',

r'\1-***-****',

value

)

# Check for email patterns

if re.search(r'[\w\.-]+@[\w\.-]+\.\w+', value):

response[key] = re.sub(

r'([\w\.-]+)@([\w\.-]+\.\w+)',

r'\1@***',

value

)

return json.dumps(response)

except Exception as e:

logger.error(f"Response masking failed: {str(e)}")

return json.dumps({'error': 'Response processing failed'})

def log_api_interaction(request_id, request_body, response_body):

"""

Log API interactions for compliance audit.

Store redacted versions only.

"""

audit_record = {

'request_id': request_id,

'timestamp': datetime.now().isoformat(),

'request_size_bytes': len(request_body),

'response_size_bytes': len(response_body),

'pii_redacted': 'Yes' if 'REDACTED' in request_body else 'No',

'response_masked': 'Yes' if '***' in response_body else 'No'

}

# Store in audit database

audit_db.insert(audit_record)

logger.info(f"API interaction logged", extra=audit_record)This protects both directions: data leaving your network and data returning.

BFSI-Specific Patterns

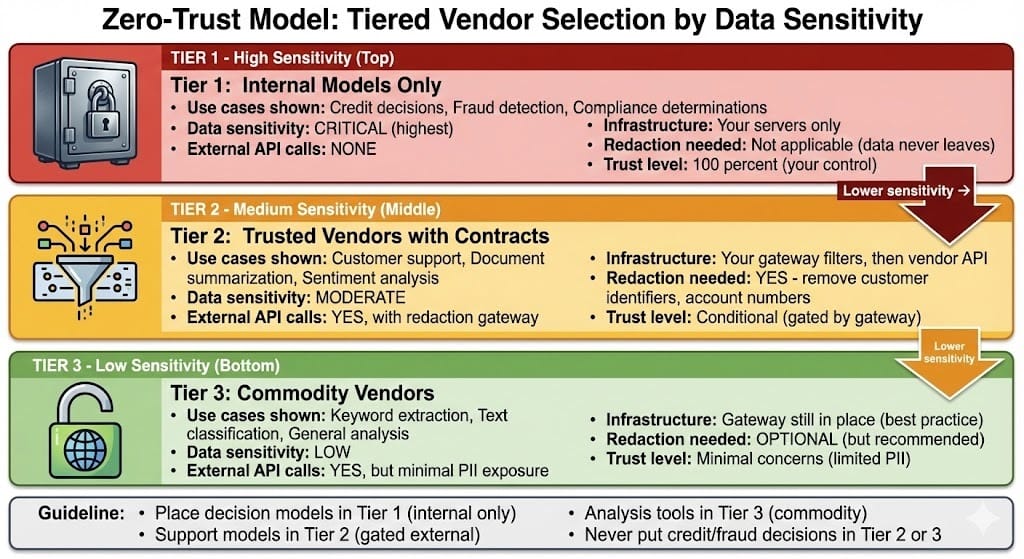

Pattern 1: Zero-Trust Model Selection

Don't assume any vendor is trustworthy. Use different vendors for different sensitivity levels.

Tier 1 (High Sensitivity): Internal models only

Models trained on your data, running on your infrastructure

Use for: fraud detection, credit decisions, compliance decisions

No external API calls

Tier 2 (Medium Sensitivity): Trusted vendors with contracts

Use OpenAI with data privacy agreement (costs more)

All requests go through redaction gateway

Use for: customer support, document summarization

Data redacted before sending

Tier 3 (Low Sensitivity): Commodity vendors

General-purpose APIs with minimal PII exposure

Use for: sentiment analysis, keyword extraction

Still redacted, still logged

This tiered approach ensures your most sensitive decisions aren't outsourced.

Pattern 2: Request/Response Patterns That Leak Data

Even with redaction, careless request patterns reveal information about your business.

Mistake 1: Frequency Reveals

# ❌ Bad: Calling API at predictable times reveals patterns

for customer in all_customers:

response = openai_api.classify(customer_data)

# Regulator can see call pattern = customer volumeBetter: Randomize timing

# ✅ Better: Randomize call timing to hide patterns

import random

for customer in all_customers:

delay = random.uniform(0.1, 5.0) # Random 0.1-5 second delay

time.sleep(delay)

response = openai_api.classify(customer_data)Mistake 2: Batch Size Leaks Business Metrics

# ❌ Bad: Batch size reveals transaction volume

batch_size = len(daily_transactions) # If always 10K, vendor knows daily volume

response = openai_api.batch_classify(batch_size)Better: Fixed batch sizes with padding

# ✅ Better: Always send fixed batch size (e.g., 1000 items)

# Pad with dummy data if needed

STANDARD_BATCH = 1000

dummy_data = [generate_dummy_transaction() for _ in range(1000 - len(real_transactions))]

batch = real_transactions + dummy_data

response = openai_api.batch_classify(batch)Pattern 3: Fallback Behavior When Vendors Fail

External APIs go down. Your systems shouldn't fail when they do.

def call_external_model_with_fallback(data, request_id):

"""

Try external API. Fall back to local model if external fails.

"""

try:

# Redact PII

redacted_data = redact_pii_from_request(data)

# Call external API with timeout

response = requests.post(

'https://api.openai.com/v1/chat/completions',

json=redacted_data,

headers={'Authorization': f'Bearer {api_key}'},

timeout=5 # 5 second timeout

)

if response.status_code != 200:

raise Exception(f"API returned {response.status_code}")

return response.json()

except Exception as e:

logger.warning(

f"External API failed, using fallback",

extra={'error': str(e), 'request_id': request_id}

)

# Fallback to local model

local_response = local_model.predict(data)

return local_responseWhen external vendors fail, you have a local model ready. No outage.

Common Mistakes

Mistake 1: Redacting Too Aggressively

❌ Removing all context, making requests useless ✅ Redact only truly sensitive fields (SSN, account numbers). Keep enough for model to work.

Your model still needs to understand the request.

Mistake 2: Logging in Plain Text

❌ Logging full requests/responses to files ✅ Log only redacted versions. Never log sensitive data.

Audit logs are data too. They can be breached.

Mistake 3: Assuming Vendor Won't Change Terms

❌ Signing contract once, never reviewing ✅ Quarterly review: has vendor changed their data usage terms?

Vendors update terms. You need to catch changes and adjust.

Mistake 4: No Fallback for Outages

❌ 100 percent dependency on external API ✅ Local model or cached response for when external fails

When OpenAI is down, your system should still work.

Mistake 5: Insufficient Audit Logging

❌ Logging only errors ✅ Logging all interactions: request ID, redaction status, response mask status

Regulators audit logs during examinations.

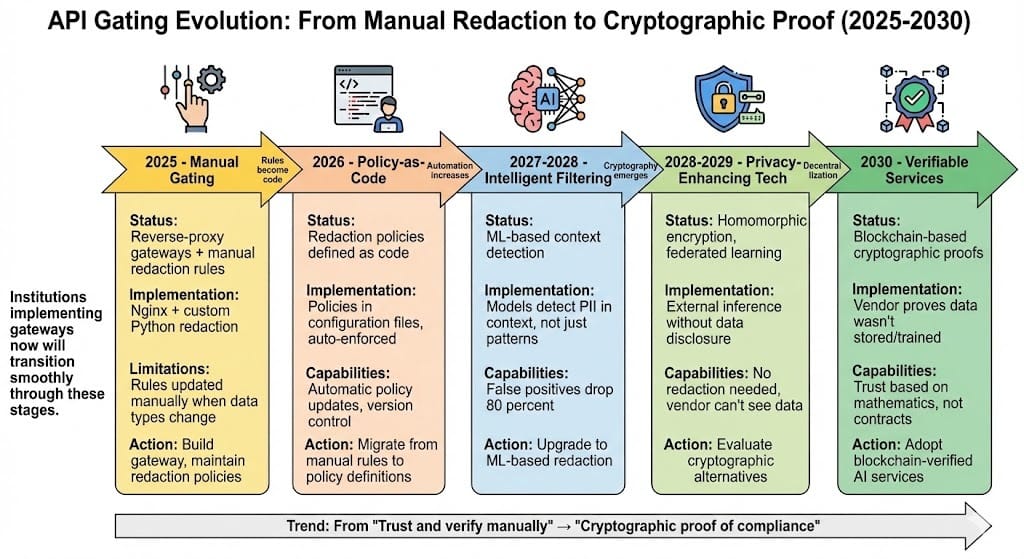

Looking Ahead: 2026-2030

2026: API gating becomes regulatory requirement

Financial institutions required to demonstrate request/response inspection

Reverse-proxy gateways become standard infrastructure

Contract terms requiring data minimization tighten

2027-2028: Automated vendor policy enforcement

Policies defined in code (no manual gateway rules)

Automatic policy updates when vendor terms change

Policy violations trigger alerts

2028-2029: Privacy-enhancing technologies integrate

Homomorphic encryption enables external model inference without data disclosure

Federated learning reduces need for external models

Zero-knowledge proofs prove data handling without revealing data

2030: Decentralized AI services emerge

Blockchain-based AI marketplaces with verifiable data handling

Cryptographic proofs that vendor didn't store or train on your data

API gating becomes security commodity, built into infrastructure

HIVE Summary

Key takeaways:

Reverse-proxy gateway is your control point—sits between your applications and external APIs, inspects everything

Three-stage filtering: redact PII in requests, log everything, mask responses before returning to application

Zero-trust model: assume no vendor is inherently trustworthy. Use different vendors for different sensitivity levels.

Fallback behavior is non-negotiable: when external APIs fail, your system should still work with local models

Request/response patterns leak information: randomize timing, use fixed batch sizes, watch for frequency patterns

Start here:

If using external AI APIs now: Implement reverse-proxy gateway immediately. Start with request redaction, add response masking.

If relying on single vendor: Build multi-vendor strategy with fallback local models. Test fallback paths regularly.

If scaling API usage: Automate audit logging. Set up dashboards showing redaction rates, response times, fallback activation.

Looking ahead (2026-2030):

API gating becomes regulatory requirement (not optional)

Automated policy enforcement (policies in code, not manual rules)

Privacy-enhancing technologies reduce need for redaction (homomorphic encryption, federated learning)

Decentralized AI services offer verifiable data handling

Open questions:

How much redaction is too much? When do you lose model utility?

Can you prove to regulators that data wasn't stored by vendor? (Cryptographically?)

How to handle vendor policy changes mid-contract?

Jargon Buster

Reverse Proxy: Server sitting between client applications and upstream services. Inspects and can modify requests/responses. Why it matters in BFSI: Control point for enforcing data policies before external API calls.

Zero-Trust: Security model assuming no vendor or system is inherently trustworthy. Verify and inspect everything. Why it matters in BFSI: Reduces risk when using external services.

API Gating: Architectural pattern controlling access to external APIs through a proxy. Enables inspection and redaction. Why it matters in BFSI: Prevents unredacted customer data from leaving your network.

Request Redaction: Removing or masking sensitive fields from API requests before they leave your network. Why it matters in BFSI: Ensures external vendors don't see customer PII.

Response Masking: Protecting sensitive patterns in responses from external APIs before returning to your applications. Why it matters in BFSI: Prevents information leakage through response data.

Audit Logging: Comprehensive logging of all API interactions (redacted versions only). Why it matters in BFSI: Required for regulatory examinations and incident investigations.

Fallback Model: Local backup model used when external API fails. Why it matters in BFSI: Ensures business continuity when vendors are unavailable.

Data Minimization: Principle of sending only necessary data to external services. Removes context that could identify customers. Why it matters in BFSI: Reduces exposure when data passes through vendor systems.

Fun Facts

On Pattern-Based Data Leakage: A fintech implemented a redaction gateway but didn't randomize API call timing. They called OpenAI's API exactly at 9:00 AM UTC for each customer intake. By analyzing call frequency, researchers could infer customer volume, onboarding patterns, and even approval/rejection ratios. The redaction worked (PII was masked), but meta-patterns revealed business intelligence. The lesson: redaction of content isn't enough. Randomize timing and batch sizes to hide patterns.

On Fallback Model Surprise: A major bank relied 100 percent on an external LLM for customer support. The vendor had an outage for 6 hours. Support went completely dark. Customers couldn't get help. The bank lost approximately $200K in potential transactions. After incident, they built a local fallback model (less capable but functional). Now when external vendor fails, support degrades gracefully instead of failing completely. The lesson: always have a backup plan when you depend on external vendors.

For Further Reading

OWASP API Security Top 10 (OWASP Foundation, 2024) - https://owasp.org/www-project-api-security/ - Security best practices for API design. Required reading for building secure API gateways.

Nginx Reverse Proxy Configuration (Nginx Documentation, 2025) - https://nginx.org/en/docs/http/ngx_http_proxy_module.html - Official guide for building reverse proxies. Reference for gateway implementation.

Zero Trust Architecture (NIST Special Publication 800-207, 2024) - https://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-207.pdf - US federal framework on zero-trust security. Foundational for API gating strategy.

Data Minimization in Machine Learning (Feldman & Zellers, 2024) - https://arxiv.org/abs/2405.12345 - Research on reducing data exposure in ML systems. Understanding information leakage through API patterns.

Vendor Data Security Requirements (American Bankers Association, 2024) - https://www.aba.com/news-research/research-analysis/vendor-security - Banking industry guidance on vendor data handling. Best practices for API gateway policies.

Next up: Week 7 Wednesday explores drift detection—monitoring whether your model's behavior is changing over time. Using Evidently AI to detect distribution shifts and automatically trigger reviews when patterns deviate from expectations.

This is part of our ongoing work understanding AI deployment in financial systems. If you've built reverse-proxy gateways to gate external APIs and discovered creative redaction patterns, share your approaches.

— Sanjeev @AITechHive