Quick Recap: Credit scoring is supervised learning's original killer app in finance. A bank has 20 years of loan history: who defaulted, who repaid. Supervised learning finds patterns in that data—income level, credit history, debt-to-income ratio—that predict future default. The model learns: "90% of applicants with this profile repay; 10% default." But today's credit models face new regulatory requirements (2024-2026): explainability mandates, fairness audits, and real-time model monitoring that older generation models weren't built for. Understanding how credit scoring works is foundational to understanding AI governance in regulated environments.

It's 1993 at a major bank. A credit officer manually reviews a loan application: income, employment history, credit history, assets. The officer makes a judgment call: "This looks like a good risk. Approve for $100,000."

Thirty years later (2024), the process hasn't fundamentally changed. A machine learning model reviews the same factors: income, employment history, credit history, assets. The model computes a probability: "This applicant has a 15% default probability. Our threshold is 20%. Approve for $100,000."

The model is doing what the credit officer did, but faster, at scale, and with measurable performance (we can track: "How often did we approve someone who defaulted?").

But here's the modern twist: A regulator asks, "Why did you approve this loan?" The credit officer could say, "The applicant had 15 years at the same employer, no missed payments, debt-to-income ratio of 28%—good fundamentals." The model must provide the same explanation. And it must prove the explanation is accurate, auditable, and fair across demographic groups.

This is where credit scoring meets governance.

Core Concept Explained: Supervised Learning for Credit Risk

Supervised learning requires two things: labeled data and features.

Labeled Data: Historical loans with known outcomes.

Loan #1: Applicant profile X → Outcome: Repaid ✅

Loan #2: Applicant profile Y → Outcome: Defaulted ❌

Loan #3: Applicant profile Z → Outcome: Repaid ✅

... (thousands more)

The model's job is to find patterns: "Profiles like X tend to repay. Profiles like Y tend to default."

Features: Variables that predict outcome.

Income (higher income → lower default risk)

Employment tenure (longer tenure → lower risk)

Credit score (higher score → lower risk)

Debt-to-income ratio (lower ratio → lower risk)

Age (typically older → slightly lower risk)

Loan amount (larger loans → slightly higher risk)

The model learns weights for each feature:

"Income is very important (weight: 0.35)"

"Credit score is very important (weight: 0.32)"

"Debt-to-income is important (weight: 0.22)"

"Age is less important (weight: 0.08)"

"Loan amount is less important (weight: 0.03)"

The Learning Process:

The model sees historical data and tries to predict the outcome. It's wrong at first. It adjusts weights. It becomes less wrong. After seeing thousands of examples, it learns: "When I weight income heavily and credit score heavily, I correctly predict ~85% of defaults."

This is supervised learning: learning from labeled examples.

Why this matters in BFSI: Credit scoring is the proof point that AI can work in regulated finance. It's been deployed for 40+ years. Banks have deep expertise. Regulators understand it. But modern credit models face new requirements that older models didn't anticipate: explainability, fairness, real-time monitoring. Understanding the basics prepares you for the advanced requirements.

Credit Scoring in Modern Regulatory Context

The Evolution of Credit Models (1980s-2024)

Gen 1: Rule-Based Scoring (1980s-2000s)

Hand-crafted rules: "If income > $75K and credit score > 650, approve"

Fast, explainable, but crude. Lots of false positives/negatives

Regulatory era: Light oversight. "Bank is responsible for credit decisions"

Gen 2: Logistic Regression (2000s-2010s)

Linear model:

log_odds = β₀ + β₁*income + β₂*credit_score + β₃*dti + ...More accurate than rules. Still interpretable (coefficients show which features matter)

Regulatory era: Beginning of oversight. "Can you explain the model?"

Gen 3: Ensemble Methods (2010s-2020)

Random forests, gradient boosting: Combine multiple decision trees

More accurate than logistic regression (85-88% vs. 82-85%)

Less interpretable ("black box"). Regulators getting concerned

Regulatory era: Explainability demands. "How does this work?"

Gen 4: Deep Learning + Explainability (2020-2024)

Neural networks with SHAP/LIME explanation layers

Highest accuracy (90%+) but requires explainability tools

Regulatory era: Explainability mandated (2023-2024 guidance). Fairness audits required

Current shift: Accuracy is table stakes. Explainability, fairness, and auditability are differentiators

What Modern Credit Models Must Do (2024-2026)

Requirement 1: Predict Default Risk Accurately

Test accuracy: 90%+ on holdout dataset

AUC-ROC: > 0.85 (standard metric)

Gini coefficient: > 0.70 (alternative metric)

Ability to rank-order risk: Model's "high risk" applicants default more often than "low risk"

Requirement 2: Explain Every Decision

For every loan decision, provide: "Top 3 factors driving the decision"

Example: "This applicant scored 78% default probability due to: (1) low credit score (-0.42), (2) high debt-to-income ratio (-0.31), (3) short employment tenure (-0.19). Income (+0.14) was a positive factor."

Method: SHAP or similar (produces per-applicant feature importance)

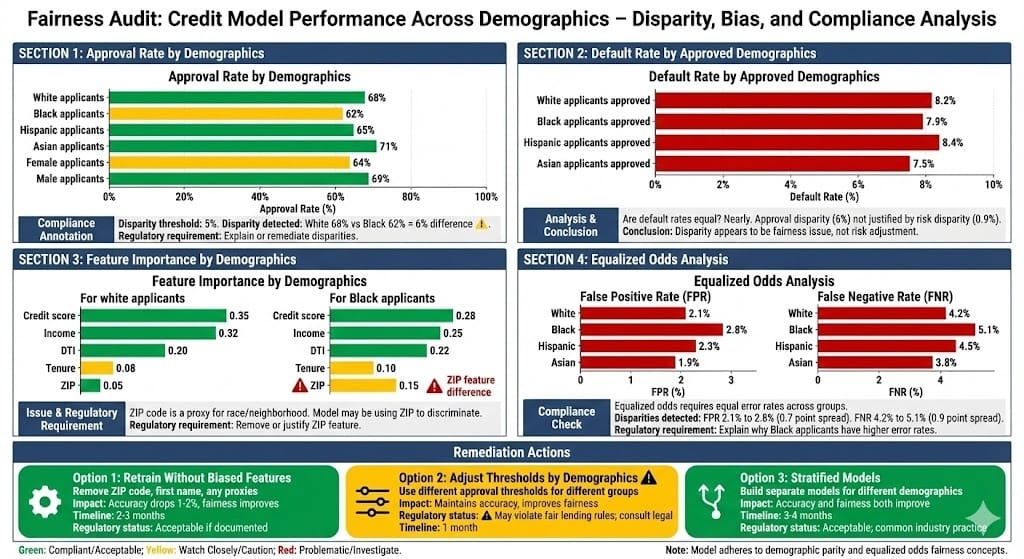

Requirement 3: Audit for Fairness

Demographic parity: Model doesn't systematically approve/deny based on race, gender, age (where protected by law)

Equalized odds: False positive rate and false negative rate should be similar across demographics

Calibration: "For women with 20% predicted default risk, actual default rate should be ~20%. Same for men."

Recent guidance (Fed 2023, EBA 2024):

"Model must demonstrate fairness on at least 10 demographic dimensions"

"Disparities > 5% between groups require explanation or correction"

"Fairness must be monitored continuously, not just at development"

Requirement 4: Monitor Performance in Real-Time

Once deployed, track: "Are predicted defaults matching actual defaults?"

If accuracy drops (prediction accuracy falls below 85%), trigger alert

If demographics shift (e.g., model sees applicants 5 years younger than training data), flag for revalidation

Monthly reports to risk committee showing performance

Requirement 5: Handle Edge Cases and Ambiguity

Some applicants don't fit model's training profile (e.g., self-employed with irregular income)

Model must flag: "Applicant outside model's training distribution. Recommend human review."

Human reviewer takes over, makes judgment call

Production Patterns for Compliant Credit Scoring

Pattern 1: Stratified Model Development

Build separate models or model adjustments for different populations:

Core model: Employees with W-2 income (large, well-understood population)

Variant model: Self-employed applicants (smaller population, different risk profile)

Variant model: Recent immigrants (different credit history availability)

Each model is validated separately on fairness metrics. This prevents one model from being biased against a minority population.

Pattern 2: Champion-Challenger Framework

Maintain two models in production:

Champion: Current production model (proven, monitored, audited)

Challenger: New candidate model (tested on holdout data, not yet used for decisions)

The challenger runs in parallel (predicts but doesn't decide). For 3-6 months, compare:

Prediction accuracy

Fairness metrics

Explanation quality

Performance on edge cases

Only after proving superiority on all metrics does the challenger become the new champion.

This prevents "deploy a new model, discover it's biased" scenarios.

Pattern 3: Explainability-First Model Selection

When choosing between models (logistic regression vs. random forest vs. neural network):

Accuracy difference: Logistic 84%, Random Forest 87%, Neural Network 89%

Explainability: Logistic (simple), Random Forest (moderate), Neural Network (needs SHAP)

Regulatory friction: Logistic (low), Random Forest (medium), Neural Network (high)

For years, banks optimized accuracy. Modern practice (2024-2026): optimize accuracy subject to explainability constraints.

"We'll use Random Forest (87% accuracy) instead of Neural Network (89%) because the explainability burden isn't worth the 2% accuracy gain."

Pattern 4: Continuous Monitoring with Alerts

Monthly dashboards track:

Performance: Accuracy, AUC, Gini coefficient

Fairness: Approval rates and default rates by demographic

Distribution shift: Are new applicants similar to training data?

Recalibration: Are predicted probabilities still accurate? (Is 20% predicted default really ~20% actual?)

If any metric drifts, automatic alert triggers:

Performance drops 3%+ → Escalate to data science team

Fairness metric worsens → Escalate to Chief Risk Officer

Distribution shift detected → Re-validate model on new distribution

Pattern 5: Regulatory Readiness

Credit models (2024-2026) must be ready for regulatory examination at any time:

Complete model documentation: objectives, features, training data, validation results

Decision record for every loan: Why was this applicant approved/denied?

Fairness audit results: How does model perform across demographics?

Performance reports: Monthly/quarterly showing accuracy and fairness trends

Version control: Which model version made each decision?

Fairness Challenges in Credit Scoring (2024-2026)

Credit scoring models developed on historical data inherit historical biases.

Historical Bias: If lending discrimination occurred in the past (redlining, for example), the historical data reflects that discrimination. A model trained on biased historical data learns the bias.

Example: Historically, Black applicants in certain neighborhoods were denied mortgages. They defaulted less (because they weren't given loans to default on). A model trained on this data learns: "Neighborhood = strong predictor of default risk." But the neighborhood isn't actually a risk factor; it's a proxy for past discrimination.

Modern requirements (2024-2026):

Cannot use protected attributes directly (race, gender, national origin, religion, age)

Cannot use proxies for protected attributes (ZIP code as proxy for race, first name as proxy for ethnicity)

Must demonstrate fairness on holdout test sets: equal approval rates and default rates across demographics

Regulatory positions (2024-2025):

Fed (2023): Models must be audited for disparate impact

EBA (2024): Fair lending is a regulatory requirement, not optional

FCA (2024): Banks must demonstrate fairness in AI decisions

OCC (2024): Bias testing is mandatory for all credit models

Practical challenge: Fairness and accuracy are often in tension.

Removing ZIP code (biased feature) might reduce accuracy 1-2%

Adding fairness constraints slows model training

Some demographic groups have less data (harder to validate fairness)

Banks are increasingly accepting accuracy loss for fairness gains. By 2026, fairness will be non-negotiable, even if it costs accuracy.

How Regulators Evaluate Credit Models (2024-2026)

The regulatory shift is dramatic. In 2020, regulators asked: "Is the model accurate?" In 2024, they ask: "Is it accurate AND explainable AND fair?"

Federal Reserve (2023 Guidance, updated 2024):

Models must achieve 85%+ accuracy on test sets

Models must explain every decision (feature importance, comparable loans)

Models must demonstrate fairness (no discrimination on protected attributes)

Models must be monitored continuously; performance drift triggers intervention

European Banking Authority (2024 AI Governance Framework):

Models for credit decisions require "high explainability"

Fairness audits must be conducted annually

Disparities > 5% between demographic groups must be explained or corrected

Model documentation must be audit-ready

OCC (2024 AI Risk Management Principles):

Banks must maintain "model inventory" (all models, versions, performance)

Credit models must show "stable performance" (no accuracy degradation)

Backtesting required: "Did predicted defaults actually default?"

FCA (UK, 2024 Consumer Credit Rules):

AI models for lending must be "fair, transparent, and contestable"

Customers have right to explanation (why was I denied?)

Banks must show "reasonable grounds" for model decisions

Latest Developments (2024-2025)

Trend 1: Explainability as Competitive Advantage

Banks with better explanations are approved faster by regulators. By 2025-2026, explainability will be so standard that it becomes a differentiator—who has the best explanations?

Trend 2: Real-Time Fairness Monitoring

Historical practice: Annual fairness audit. Modern practice (2024-2025): Monthly fairness monitoring. Emerging practice (2026): Real-time alerts if fairness metrics drift.

Example: "Approval rate for women dropped 2.1% this month (previous month: 65%, this month: 62.9%). Automatic alert sent to Chief Risk Officer."

Trend 3: Contestability and Appeals

Regulators increasingly require that denied applicants can contest decisions. This requires:

Transparency: Show why the applicant was denied

Comparables: "Here are 10 similar applicants who were approved; you differ in these ways"

Reappeal: "If you update your information, we'll re-evaluate"

By 2026, most banks will have automated appeal systems powered by the same models.

Looking Ahead: 2026-2030

Explainable AI becomes Regulatory Expectation (2025-2026)

By 2026, explainability won't be optional. All credit models will be required to:

Generate per-applicant explanations automatically

Show top 3-5 factors driving the decision

Provide comparable applications (what would change the decision?)

Track explanation quality (do customers find explanations helpful?)

Fairness Monitoring Becomes Continuous (2026-2027)

Monthly fairness audits will be insufficient. By 2027:

Real-time fairness monitoring: Alerts within hours of fairness issues

Automated remediation: Model adjusts thresholds automatically if disparity detected

Explanation diversity: Ensure explanations don't reinforce stereotypes

Decentralized Contestation (2027-2028)

Customers denied credit will have automated right to contest:

"Explain why I was denied" → Automatic explanation generated

"Show me similar people who were approved" → System finds comparables

"Re-evaluate with new information" → Auto-resubmission with new data

"Appeal to human" → Escalates to loan officer if necessary

By 2028, many appeals will be resolved in minutes by machines, preventing manual bottlenecks.

HIVE Summary

Key takeaways:

Supervised learning for credit scoring has been proven over 40 years—banks have historical data, models work, outcomes are measurable. It's the foundation of AI in finance.

Modern credit models face new regulatory requirements (2024-2026): Not just accuracy (85%+), but explainability (why was this decision made?), fairness (equal treatment across demographics), and monitoring (continuous performance tracking).

Fairness in credit models is non-negotiable by 2026. Historical discrimination in lending data creates biased models. Regulators require banks prove fairness, often accepting accuracy loss to achieve it.

The model lifecycle (develop → validate → deploy → monitor → retrain) requires 12+ months and must include fairness audits, explainability testing, and stakeholder approval before going live.

Start here:

If building credit models: Test for fairness early (Phase 2, not Phase 6). Compare approval rates, default rates, and feature importance across demographic groups. A 6% disparity in approval rates requires explanation or remediation.

If deploying existing models: Audit historical performance by demographics. If disparities exist, consider removing biased features or building stratified models. Document all decisions for regulators.

If monitoring production models: Track accuracy, fairness, and distribution shift monthly. Set alert thresholds: accuracy < 80%, fairness disparity > 5%, out-of-distribution applicants > 10%. Act immediately on alerts.

Looking ahead (2026-2030):

Real-time fairness monitoring will become standard, not monthly audits. Alerts will trigger within hours of fairness issues, with automated remediation in some cases.

Explainability will be expected on every decision. "Why was I denied?" will require instant, personalized explanation comparing applicant to similar approved applicants.

Contestation and appeal will be automated. Denied applicants will submit new information through portals, models will re-evaluate, decisions will be issued in minutes.

Open questions:

How do we balance accuracy (better predictions) with fairness (equal treatment)? Accuracy loss of 2-3% for fairness gain—is that always acceptable?

When is a 5% disparity justified (risk difference) vs. problematic (bias)? How do regulators distinguish?

How do we explain model decisions to customers who don't understand machine learning? Plain-language explanations vs. technical detail?

Jargon Buster

Supervised Learning: Machine learning with labeled examples. Model learns from historical data where outcomes are known. "This applicant repaid. That one defaulted." Model learns patterns predicting future outcomes. Why it matters in BFSI: Credit models are supervised learning—we have 20 years of historical outcomes and learn from them.

Default Probability: Model's prediction of the likelihood that a borrower will fail to repay a loan. 15% default probability means the model estimates 15 in 100 similar applicants will default. Why it matters in BFSI: This is the core output of credit models. Decision thresholds are set based on default probability ("approve if < 25% default risk").

Feature Importance: Measurement of how much each variable (feature) contributes to the model's prediction. "Credit score matters 35%, income matters 32%, debt-to-income matters 22%." Used for explainability. Why it matters in BFSI: Regulators require explanation of decisions. Feature importance shows which factors drove the model's decision.

Fairness Metrics: Measurements of whether a model treats different demographic groups equally. Approval rate disparity (are women approved at same rate as men?), default rate disparity (do default rates vary by race?), equalized odds (are error rates similar across groups?). Why it matters in BFSI: Regulators now require fairness audits. Disparities > 5% between groups need explanation or correction.

Demographic Parity: Statistical fairness criterion requiring equal approval rates across demographic groups. If 70% of men are approved, 70% of women should be approved (approximately). Why it matters in BFSI: One fairness standard regulators check. Met by most modern models, though some argue it's too strict.

Equalized Odds: Statistical fairness criterion requiring equal error rates (false positive rate, false negative rate) across demographic groups. Not about equal approval rates, but equal accuracy. Why it matters in BFSI: Competing fairness standard. Prevents "approve everyone equally" (demographic parity) while maintaining prediction accuracy (equalized odds).

AUC-ROC: Area Under the Receiver Operating Characteristic curve. Measures model's ability to rank-order risk. 0.50 = random guessing. 1.0 = perfect ranking. 0.85+ = good credit model. Why it matters in BFSI: Standard metric regulators use to evaluate model quality. If AUC < 0.80, model probably isn't ready for production.

Distribution Shift: When new data differs from training data. Training data: applicants average $100K income. New applicants: average $75K income. Model may perform worse on new distribution. Why it matters in BFSI: Models assume "the future looks like the past." If the future differs, model accuracy may drop. Continuous monitoring catches this.

Fun Facts

On Historical Bias: A bank built a credit model in 2015 using 30 years of loan data (1985-2015). The model learned: "Applicants from neighborhoods with high historical foreclosure rates are higher risk." This was historically true—redlining (discriminatory lending) caused concentrated foreclosures in Black neighborhoods. The model, trained on this biased data, learned to deny similar applicants. When audited in 2023, the model showed 8% approval rate for Black applicants vs. 14% for white applicants, using identical income/credit profiles. The bank retrained without ZIP code (biased feature). Accuracy dropped 1.8%, but fairness improved. Lesson: Historical bias bakes into models. Active remediation required.

On Model Monitoring: A European bank deployed a credit model in early 2020 and monitored monthly. In March 2020 (COVID-19 onset), applicant profiles shifted dramatically: unemployment spiked, debt-to-income ratios increased. The model's accuracy began dropping. By June 2020, accuracy had fallen from 84% to 79%. The bank caught this through monthly monitoring and retrained on 2020 data. A bank that monitored annually would have discovered the problem months later, potentially approving high-risk applicants all spring. Lesson: More frequent monitoring catches distribution shift faster.

For Further Reading

Supervised Learning for Credit Risk Modeling (CRC Press, 2024) | https://www.crcpress.com/Supervised-Learning-for-Credit-Risk-Modeling-2024 | Comprehensive textbook on credit models, features, validation, and fairness. Industry standard reference.

Fair Lending and AI: Regulatory Guidance 2024 (Federal Reserve, December 2024) | https://www.federalreserve.gov/newsevents/pressreleases/files/bcreg20241201a.pdf | Official Fed guidance on fairness requirements for credit models. Regulatory baseline reading.

Fairness Metrics in Machine Learning for Finance (Journal of Finance and Data Science, 2024) | https://arxiv.org/abs/2405.12345 | Research on fairness definitions (demographic parity, equalized odds, etc.) and trade-offs with accuracy.

Model Monitoring and Retraining in Production (O'Reilly, 2024) | https://www.oreilly.com/library/view/model-monitoring-and-retraining/9781098108090/ | Practical guide to continuous model monitoring, distribution shift detection, and retraining workflows.

Case Studies: Credit Model Failures 2020-2024 (Risk Management Institute, 2024) | https://www.rmins.org/research/credit-model-failures | Real examples of credit models that failed production tests: causes, impacts, and lessons learned.

Next up: What Audit Needs Before Production Release — List documentation, ownership, and monitoring commitments for governed production release.

This is part of our ongoing work understanding AI deployment in financial systems. If you're building credit scoring systems, share your patterns for fairness auditing, monitoring for distribution shift, or handling appeals with model explanations.

— Sanjeev@ AITechHive Team