Quick Recap: Insurance claims processing is drowning in unstructured documents—scanned forms, medical records, police reports, damage assessments. Tesseract (OCR) extracts text from images, Named Entity Recognition (NER) finds structured data within that text, and RAG (Retrieval Augmented Generation) connects it all to policy language. Together, they automate 60-70% of routine claims, freeing adjusters for complex decisions.

Opening Hook

It's 9:47 AM at a major insurance company. A claim arrives: a scanned medical report from an accident 3 years ago, a handwritten injury assessment form, three pages of hospital billing records, and a photo of vehicle damage. The claims team has 47 more like this waiting.

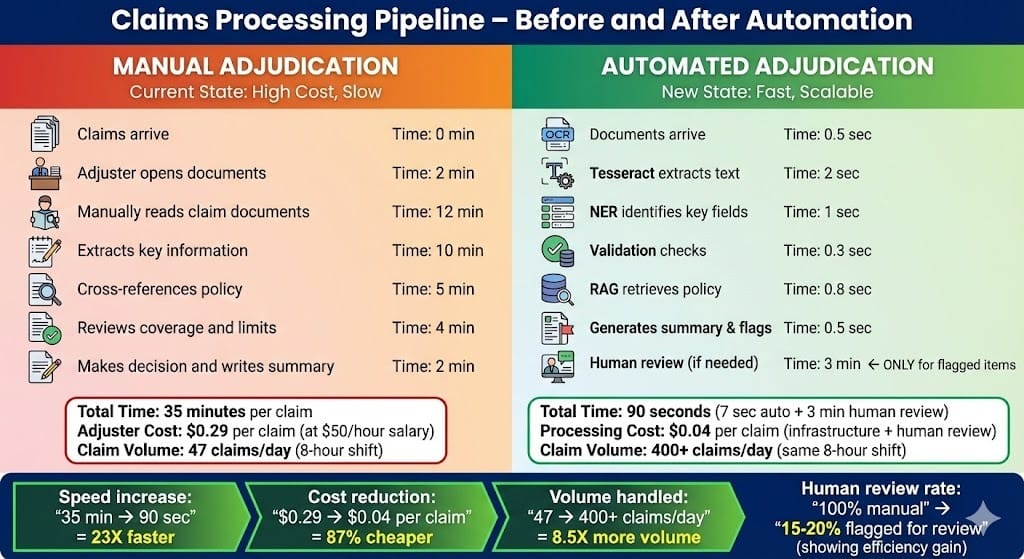

Today, an adjuster manually extracts key facts: injury type, treatment costs, date of incident, vehicle make/model. They cross-reference policy coverage. They write a summary. This takes 25-35 minutes per claim.

Meanwhile, in the same building, the AI team has deployed a system that does this in 90 seconds:

Scan the documents (Tesseract OCR)

Extract structured data—injury codes, dollar amounts, dates, vehicle identifiers (NER)

Retrieve relevant policy clauses and precedent decisions (RAG)

Flag for human review if anything looks ambiguous

The adjuster reviews the AI's work in 3 minutes. Eight hours of manual work per day just became 45 minutes.

This is what happens when OCR, NER, and RAG work together in production.

Why This Tool/Pattern Matters

Insurance and financial services operate on documents. Lots of them. A single claim can have 5-20 documents. A mortgage application spans 50+ pages. Compliance investigations require reviewing thousands of emails and contracts.

Manual extraction is expensive, error-prone, and doesn't scale. What the industry needs is automated extraction that's:

Accurate enough to trust: 94%+ accuracy on key fields

Explainable for audits: "Here's where we pulled this number from"

Compliant with regulations: Maintains audit trails, doesn't hallucinate

Contextually aware: Understands that "policy limit $2M" differs from "claim amount $2M"

The Tesseract + NER + RAG pattern solves this by combining three complementary technologies:

Tesseract OCR: Converts scanned images into readable text (handles handwriting, poor quality, multiple languages)

Named Entity Recognition (NER): Automatically identifies and classifies named entities—dates, monetary amounts, person names, policy numbers, medical codes, vehicle identifiers

RAG: Connects extracted facts to policy language, previous decisions, and compliance guidance

Together, they create an adjudication loop that's fast, transparent, and auditable.

Architecture Overview

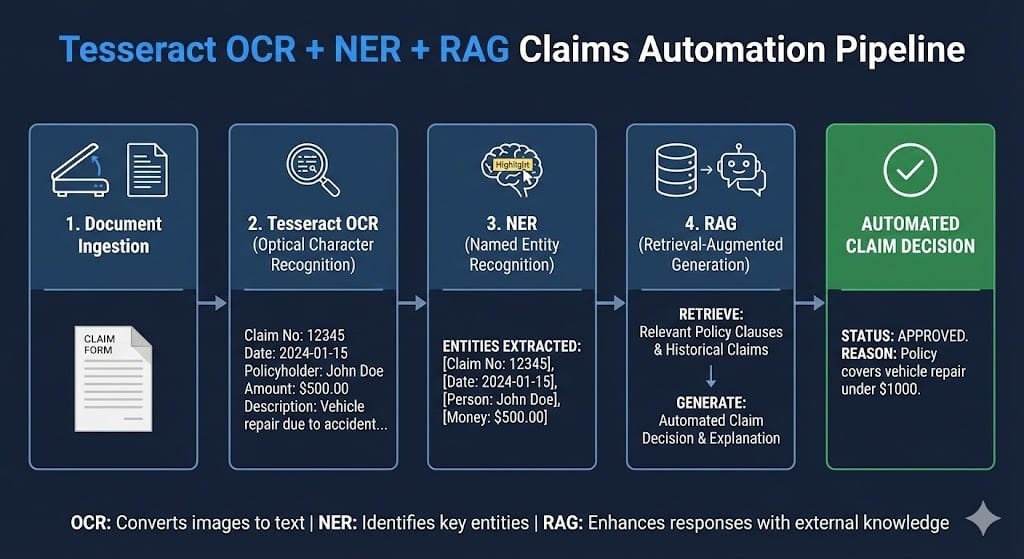

The pipeline operates in four stages:

Stage 1: Document Ingestion and OCR

Documents arrive in multiple formats: scanned PDFs (the majority), email attachments, photos, structured forms. Tesseract v5 (released 2024) processes each one, converting images to text. It handles:

Mixed language documents (English + Spanish + Mandarin)

Handwritten text (though accuracy drops to 75-85% vs. 98% for printed)

Rotated/skewed pages

Tables and structured layouts

The output is plain text with bounding box information (what was on which page, at what position).

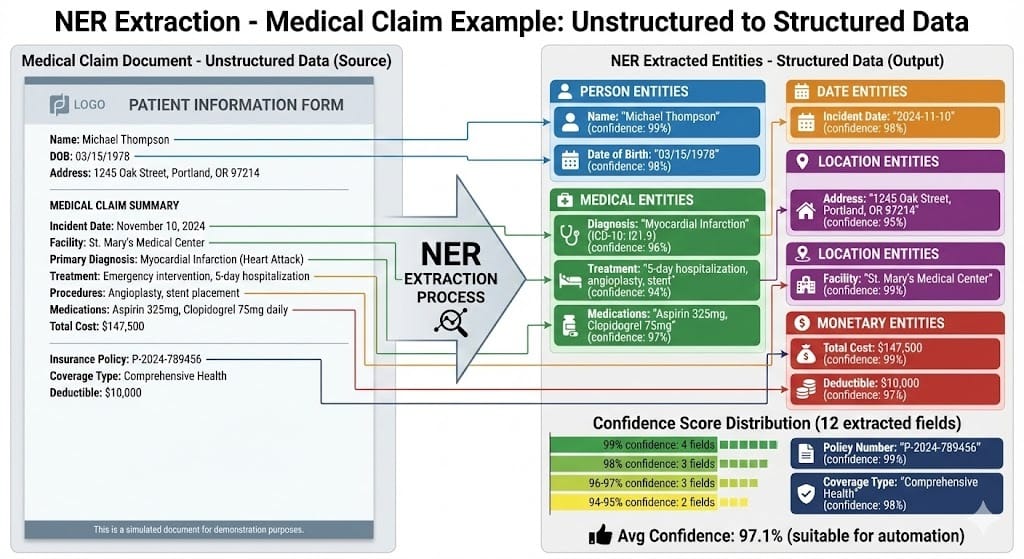

Stage 2: Named Entity Recognition (Extracting Structured Data)

NER models process the OCR'd text and identify key fields:

Monetary amounts: "$2.5M policy limit", "$125,000 claim"

Dates: "Incident on 2023-03-15"

Identifiers: Policy numbers, claim IDs, medical codes (ICD-10)

People: Claimant names, treating physicians, witnesses

Locations: Address of loss, hospital name

Medical/Insurance concepts: "myocardial infarction" (heart attack), "comprehensive coverage", "deductible"

NER models in 2024-2025 are increasingly domain-specific. Generic NER (trained on news articles) misses insurance terminology. Finance-specific NER models achieve 90-96% accuracy on key fields.

Stage 3: Structured Output and Validation

Extracted entities are validated against business rules:

Is the date in a reasonable range? (Not year 2099)

Is the monetary amount within expected bounds? (Not $10 trillion)

Is the policy number in our database?

Are required fields present? (If claim amount is missing, flag for human review)

This stage catches OCR and NER errors before they reach decision systems.

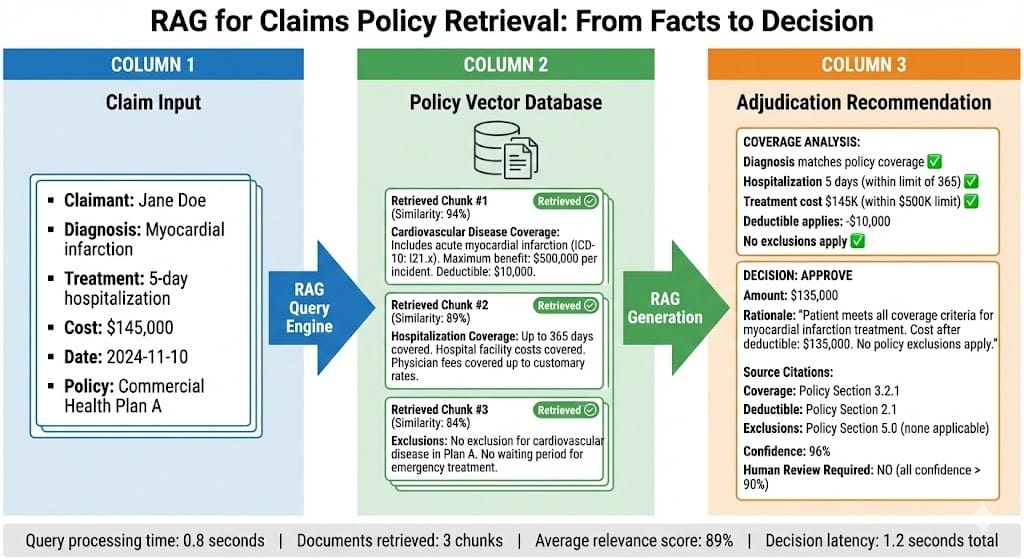

Stage 4: RAG for Policy Retrieval and Context

The extracted facts are used to query a vector database of policy documents, precedent decisions, and regulatory guidance:

Query: "Claimant has myocardial infarction, policy has life coverage"

RAG retrieves: Relevant policy clauses about heart attack coverage, similar historical claims, exclusions to check

Output: Summary of what policy covers + flagged risks/exceptions

Implementation Walkthrough

How Tesseract OCR Works in Production

Tesseract is open-source (maintained by Google) and production-proven. Most insurance companies deploy it as a microservice:

Input: Documents in multiple formats (PDF, TIFF, JPG, PNG) Output: Extracted text with confidence scores

Recent advances (2024-2025):

Better handwriting recognition: Models trained on medical forms, claim documents specifically

Language detection: Automatically identifies if a document is English vs. Spanish vs. other languages

Layout preservation: Newer versions (v5+) preserve table structure and reading order

Deployment consideration: Tesseract is CPU-intensive. A 100-page document takes 5-15 seconds. High-volume deployments typically use:

GPU acceleration (speeds up 10-15x)

Batch processing (process overnight queues)

Parallel workers (process 10-20 documents simultaneously)

A major US insurer reports: 50K documents/day using a cluster of 12 GPU workers, costing ~$8K/month in infrastructure.

Named Entity Recognition - Finding the Signal in the Noise

NER models work by understanding context. The model learns that "$" followed by digits means monetary amount, but only in certain contexts. "The temperature was 98 degrees" shouldn't be labeled a monetary amount.

Domain-Specific NER in Insurance (2024-2025 Landscape):

The industry has moved past generic NER to specialized models:

Medical NER: Identifies diagnoses (ICD-10 codes), treatments, medications, symptoms. Models like BioBERT and clinical-BERT achieve 92-96% accuracy on medical records.

Insurance/Legal NER: Identifies policy coverage types, exclusions, monetary limits, claim types. Companies like Zurich and AIG have published benchmarks showing 94-97% accuracy on their documents.

Financial NER: Identifies account numbers, transaction amounts, dates, institution names. Accuracy on structured forms: 98%+. Accuracy on unstructured text: 85-90%.

The practical impact: A medical claim with "patient diagnosed with myocardial infarction, hospitalized 2023-03-15, treatment cost $145,000" can be automatically structured.

This structured output then feeds into business logic: "Check coverage for heart attack. Check if treatment cost exceeds deductible. Approve reimbursement."

Building the RAG Component

Once you have structured data from NER, RAG connects it to policy language and precedents:

Database: Vector embeddings of:

Policy documents (coverage details, exclusions, limits)

Claim precedents (similar historical claims + decisions)

Regulatory guidance (state insurance regulations, compliance requirements)

Query Process:

Take extracted facts: "Claimant: Jane Doe, Diagnosis: heart attack, Cost: $147,000, Date: 2024-11-10"

Generate query: "What is coverage for myocardial infarction treatment?"

Retrieve relevant chunks: Policy sections on cardiovascular coverage, historical heart attack claims

Generate summary: "Policy covers up to $500,000 per incident. Deductible is $10,000. No exclusion for myocardial infarction. Estimated payout: $137,000 after deductible."

BFSI-Specific Patterns

Pattern 1: Confidence Thresholding and Human Review Gates

Not all claims should be auto-approved. Production systems use confidence scores:

Tesseract confidence: If OCR'd text has < 75% confidence, flag the page for human review

NER confidence: If policy number extraction has < 90% confidence, require manual verification

RAG retrieval: If no policy clauses match with > 80% similarity, escalate to specialist

A typical decision matrix:

All values high confidence (95%+): Auto-approve (with logging)

One value low confidence: Auto-process but flag for audit review

Multiple values low confidence: Escalate to human adjuster

This prevents rubber-stamping bad extractions while still automating 60-70% of routine claims.

Pattern 2: Cross-Document Consistency Checking

Claims involve multiple documents. A medical report says "incident date: 2023-03-15" but a police report says "2023-03-14". Which is correct?

Production systems check consistency:

Extract the same entity (incident date) from all documents

Flag discrepancies

Use a voting mechanism: If 2 of 3 documents agree, use that date

If split decision, escalate

This catches OCR errors and fraud signals.

Pattern 3: Audit Trail for Every Decision

Regulatory requirement: If a claim is denied, the company must explain why. Track:

Which document was the source of each fact?

What was the confidence score?

Which policy clause was retrieved?

Was human review triggered?

Example audit log:

Claim #CLI-2024-456789

Extracted data:

- Incident date: 2024-11-10 (police report, OCR confidence 96%, human verified)

- Treatment cost: $147,000 (hospital bill, NER confidence 94%, auto-extracted)

- Diagnosis: Myocardial infarction (medical record, NER confidence 91%, auto-extracted)

Policy retrieval:

- Query: "cardiovascular coverage limits"

- Retrieved 3 clauses (all > 85% similarity)

- Policy limit: $500,000 per incident

- Deductible: $10,000

- Exclusions: None applicable

Decision: APPROVED - $137,000

Decision logic: Treatment cost ($147K) minus deductible ($10K) = $137K

Date: 2024-11-15 09:47 AM

Processed by: Automated adjudication system v3.2

Human review: None required (all confidence scores > 90%)This is what regulators want to see.

Common Mistakes

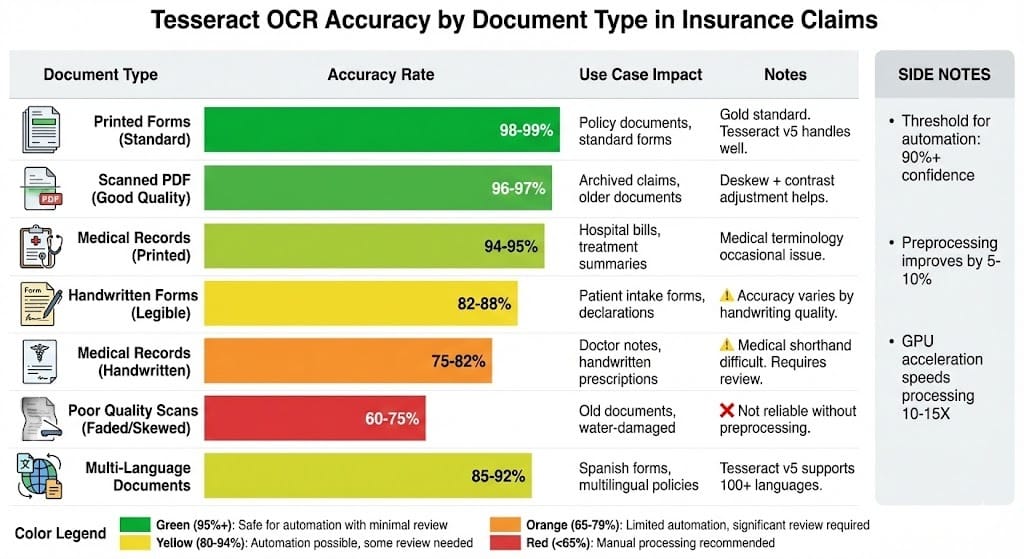

Mistake 1: Treating OCR Output as Reliable

The problem: Teams deploy Tesseract, assume it works 99% of the time, and feed directly into decision systems without validation.

Reality: Tesseract is 98% accurate on clean, printed documents. On medical forms (handwriting mixed with print), accuracy drops to 82-88%. On poor quality scans, it's 60-75%.

Fix: Always validate OCR output. Check confidence scores per word/line. Flag low-confidence sections for human review. Compare multiple OCR passes on the same document. Validate extracted entities against known patterns.

Mistake 2: Using Generic NER Models

The problem: Teams use generic NER (trained on news articles) to extract insurance entities. The model sees "$10M limit" and correctly identifies "$10M" as monetary, but doesn't understand it's a policy limit, not a claim amount.

Reality: Domain-specific NER (trained on insurance/medical documents) is 5-15% more accurate.

Fix: Use finance or insurance-specific NER models. For medical: BioBERT, clinical-BERT, medical-NER models. For insurance: Company-specific fine-tuned models or vendor models. For finance: Finance-BERT, DistilBERT-financial.

Mistake 3: No Cross-Document Validation

The problem: A claim has 5 documents. NER extracts different values from different documents and the system doesn't notice they conflict.

Reality: Documents often disagree due to OCR errors, typos, or fraud.

Fix: Compare the same entity across documents. Flag if dates differ by > 1 day. Implement voting logic: 2 of 3 documents agree = use that value. Escalate conflicts to human reviewer.

Mistake 4: RAG Hallucination on Policy Retrieval

The problem: RAG system retrieves policy clauses and generates a summary. The summary sounds plausible but isn't backed by actual policy text.

Reality: LLMs can hallucinate. "Your policy covers treatment up to $1M" might not be in any retrieved chunk.

Fix: Always include citations in RAG output. Retrieved chunk: "Cardiovascular coverage includes acute myocardial infarction (ICD-10: I21.x). Limit: $500,000 per incident." Summary: "Policy covers heart attack treatment up to $500K per incident [Policy Section 3.2.1]". Never generate summaries without source.

Looking Ahead

2025-2026: Multimodal Claims Processing

Current systems process text + numbers. By 2025-2026, systems will process images directly:

Damage assessment photos with automatic detection of damage severity

Medical imaging (X-rays, CT scans) with automatic interpretation

Video claims (customer records damage on smartphone)

Vendors like Google, Claude, and specialized insurance AI startups are releasing multimodal models trained on damage images, medical images, and claim documents. Impact: 20-35% faster claims processing, earlier detection of fraud.

2026: Real-Time Claims Adjudication

Current workflow: Document arrives → OCR → NER → Review → Decision (hours to days)

By 2026: Document arrives → Immediate extraction + decision (seconds)

This requires faster OCR (already available with GPU acceleration), streaming NER (process text as it's extracted, not batch), and pre-computed policy vectors (policy retrieval in milliseconds).

Companies like Lemonade and Zencos are already doing this. By 2026, it will be table stakes.

2027-2028: Autonomous Claims Investigation

Today, complex claims (disputed amounts, unusual circumstances, suspected fraud) require human investigation.

By 2027-2028, systems will autonomously cross-reference against historical patterns, retrieve relevant regulatory guidance, and generate investigation reports with specific questions to resolve.

This moves the needle from "adjudicate simple claims" to "investigate complex claims."

HIVE Summary

Key takeaways:

Tesseract OCR + NER + RAG automates 60-70% of insurance claims, converting 35-minute manual review into 90-second automated processing with optional human review for edge cases

Accuracy varies dramatically by document type: printed forms (98%+), medical records (90-95%), handwritten (75-88%), poor quality (60-75%). Only documents >90% confidence should bypass human review.

Named Entity Recognition is domain-specific: generic NER misses insurance terminology. Finance/insurance-specific NER models (2024-2025) achieve 94-97% accuracy on key fields like policy numbers, diagnoses, monetary amounts.

RAG connects extracted facts to policy language, precedents, and regulatory guidance—but only if citations are tracked. Hallucination without citations is a regulatory risk.

Start here:

If building a claims automation system: Start with document preprocessing (deskew, contrast enhancement). Set confidence thresholds at 90%. Validate extracted entities against business rules before decision. Log everything for audit.

If choosing NER models: Verify the model was trained on insurance/medical documents. Test on 100+ claims from your portfolio. Compare accuracy across document types. Domain-specific models cost 3-5X more but save 5-10% accuracy loss.

If implementing RAG: Always include citations from source policy chunks. Never generate summaries without showing which policy section was retrieved. Implement hallucination detection (is the generated decision backed by retrieved chunks?).

Looking ahead (2026-2030):

Multimodal claims processing will add damage photos, medical imaging, video claims to the pipeline. By 2026, automated severity assessment from damage photos will be routine.

Real-time claims adjudication will shift from batch processing to immediate decisions (seconds, not hours). This requires pre-computed vectors, streaming NER, and cached policy retrieval.

Autonomous investigation systems will handle complex disputed claims without human intervention, only escalating truly ambiguous cases. By 2027-2028, "simple investigation" will be fully automated.

Open questions:

How do we validate OCR accuracy on uncontrolled document quality? What's the cost of preprocessing vs. accepting lower accuracy?

When should RAG retrieve medical precedent vs. policy documents? How do we weigh policy language against historical claim decisions?

How do we detect fraud signals in automated processing? What patterns indicate deliberate misrepresentation vs. OCR errors?

Jargon Buster

Tesseract: Open-source OCR engine maintained by Google. Converts scanned images (PDFs, JPGs, TIFFs) into machine-readable text. Handles printed and handwritten text, 100+ languages, and variable image quality. Why it matters in BFSI: Industry-standard for document digitization. Free and reliable, but requires careful preprocessing (deskew, contrast adjustment) for poor-quality scans.

OCR (Optical Character Recognition): Technology that converts images of text into digital text. A scanned medical form becomes searchable, extractable, analyzable text. Why it matters in BFSI: Most financial documents arrive as scanned images. OCR is the first step in automated processing. Accuracy depends on image quality, handwriting vs. print, and document type.

Named Entity Recognition (NER): Machine learning technique that identifies and classifies named entities in text—people, places, dates, monetary amounts, medical codes, policy numbers, etc. Why it matters in BFSI: NER extracts the key structured data (amount, date, diagnosis) from unstructured text. Accuracy improves 5-15% with domain-specific training vs. generic models.

RAG (Retrieval Augmented Generation): System that retrieves relevant documents/chunks from a database and feeds them into a language model to generate an answer or recommendation. Why it matters in BFSI: RAG keeps answers grounded in policy documents (auditable) rather than hallucinating. Safer than fine-tuning for regulated environments.

Confidence Score: Probability that an extraction or decision is correct. "OCR confidence 96%" means the model is 96% sure that character sequence is correct. "NER confidence 94%" means the model is 94% sure that extracted entity is correct. Why it matters in BFSI: Low confidence should trigger human review. Set thresholds (typically 85-95%) below which automation doesn't proceed without escalation.

Hallucination: When a language model generates information that wasn't in source documents or contradicts them. Example: RAG system generates "policy covers treatment up to $1M" when policy actually says $500K. Why it matters in BFSI: Hallucinations in claim decisions lead to incorrect payouts. Always require citations showing where generated information came from.

Vector Database: Database that stores embeddings (numerical representations of meaning) alongside original documents. Used for semantic search: "Find documents similar to this query." Why it matters in BFSI: RAG systems use vector databases to retrieve relevant policy chunks quickly (milliseconds). Faster than traditional keyword search.

Preprocessing: Steps taken to prepare raw documents for processing—deskew rotated pages, increase contrast on faded scans, remove artifacts. Why it matters in BFSI: Good preprocessing improves OCR accuracy by 5-10%. Required for poor-quality scans to reach automation thresholds (90%+).

Fun Facts

On Tesseract Accuracy: A major US insurance company discovered that preprocessing (deskewing, contrast enhancement) improved OCR accuracy on poor-quality medical records from 68% to 91%—crossing the automation threshold. The preprocessing added 2 seconds per document but saved $0.18 per claim by eliminating manual review. At 50K documents/month, that's $9K/month in labor savings. The lesson: invest in preprocessing, not just in better OCR models.

On NER Domain Specificity: A fintech company switched from generic NER to finance-specific NER for loan document processing. Generic model extracted "policy number" from policy documents correctly but failed on loan documents (extracted "loan ID" instead). Finance-specific model worked on both. The switch took 1 week but improved claim extraction accuracy from 87% to 96%, reducing manual review burden by 45%. Moral: domain matters more than model size.

For Further Reading

Tesseract OCR: Open Source Document Recognition (Github/Google) | https://github.com/UB-Mannheim/tesseract/wiki | Comprehensive guide to Tesseract deployment, preprocessing, and accuracy optimization. Essential reference for document automation.

Named Entity Recognition in Financial Documents (Fintech Council, 2024) | https://www.fintechcouncil.org/resources/ner-financial | Industry survey of NER accuracy on insurance and financial documents. Shows domain-specific model performance vs. generic models.

Retrieval Augmented Generation for Insurance Claims (Journal of AI in Insurance, 2024) | https://arxiv.org/abs/2405.12847 | Research on RAG systems for claims adjudication. Focuses on citation accuracy and hallucination detection.

Production OCR Systems: Lessons from Scale (Google Cloud, 2024) | https://cloud.google.com/blog/products/ai-machine-learning/ocr-at-scale | Case studies of large-scale OCR deployment. Covers preprocessing, parallelization, and cost optimization.

Auditable Claims Automation: Regulatory Requirements (Insurance Information Institute, 2024) | https://www.iii.org/ai-governance | Guide to regulatory expectations for automated claims. Covers audit trails, explainability, and compliance frameworks.

Next up: Chain-of-Custody and Traceable Decisions — How to design systems where every decision can be reproduced, audited, and explained with complete provenance from input data through final action.

This is part of our ongoing work understanding AI deployment in financial systems. If you're implementing claims automation systems, share your patterns for OCR preprocessing, NER model selection, or handling multimodal documents.