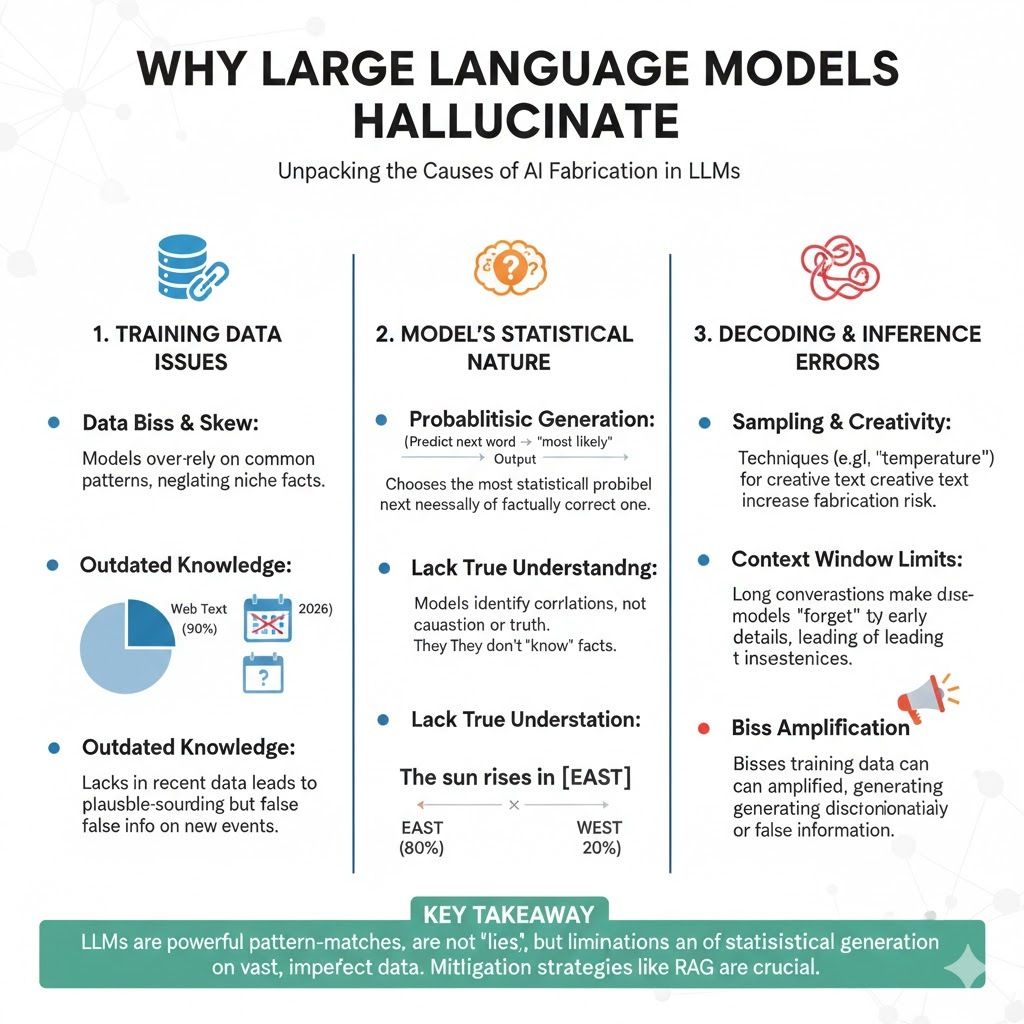

Quick Recap: Large language models (LLMs) don't "know" facts. They're statistical approximators that recognize patterns in training data. When they encounter situations outside their training distribution or are asked to perform tasks they haven't seen before, they extrapolate—sometimes creating plausible-sounding but false information (hallucinations). Understanding why models hallucinate isn't academic; it's critical for deploying them safely in regulated finance where a hallucinated regulatory requirement could lead to regulatory violations.

It's 3 AM. A bank's compliance system using an LLM gets a query: "What are the current regulatory requirements for LIBOR transition?"

The model responds: "As of 2026, the Federal Reserve requires all banks to transition to SOFR-based rates by Q3 2026. Banks failing to meet this deadline face fines up to $50 million per month of non-compliance."

Sounds authoritative. Specific dates. Specific penalties. The compliance officer reads this at 8 AM and starts drafting a bank-wide LIBOR transition plan to hit the Q3 deadline.

Problem: The actual Fed regulation (from early 2026) says: "Transition timelines vary by product and are determined by market participants, not mandated by the Fed. No specific deadline or penalties as stated."

The LLM hallucinated a "fact." It didn't know the answer, so it made one up—plausibly sounding, perfectly confident, completely wrong.

This is why understanding hallucination matters. In a casual chatbot, hallucinations are embarrassing. In regulated finance, hallucinations are violations.

What Hallucination Actually Is

Hallucination isn't a bug. It's a fundamental feature of how language models work.

What's Actually Happening:

An LLM doesn't have a database of facts. It has learned patterns:

Word A frequently appears near word B

Phrases like "Federal Reserve requires" are usually followed by regulatory statements

When asked about regulations, respond with regulatory-sounding language

When asked a question it has seen before (in training data), it can pattern-match and produce accurate answers. When asked something outside its training distribution, the model still produces something—because that's its job. It generates the next most probable word, then the next, building a response.

But "most probable word" given insufficient context can be fabricated.

Example: The LIBOR Hallucination

Query: "What are the current regulatory requirements for LIBOR transition?"

The model's internal process:

Recognize pattern: "regulatory requirements" questions followed by regulatory answers

Generate: "As of 2026," (common temporal marker)

Generate: "the Federal Reserve requires" (common regulatory phrasing)

Generate: "all banks to transition" (sensible continuation)

Generate: "to SOFR-based rates" (correct continuation)

Generate: "by Q3 2026" (model makes this up—sounds plausible, was in training data as example, not true for 2026)

Generate: "fines up to $50 million per month" (plausible penalty, not real, fabricated)

Each step is locally reasonable given the context. But the overall statement is false.

Why This Happens: The model was trained on documents up to mid-2024. The query is about 2026. The model doesn't know 2026 regulations. But it knows what regulatory language looks like, so it produces regulatory-sounding language. Confident, specific, fake.

Deep Dive: Inductive Bias and Approximation Behavior

Inductive Bias: What the Model Learned (and Didn't)

Language models are trained with a specific objective: predict the next word given previous words.

Through this training, they develop inductive biases—implicit preferences for certain types of responses:

Bias 1: Confidence Over Uncertainty The model learns: "Confident-sounding statements get high likelihood in training data." It doesn't learn: "Say 'I don't know' when uncertain" (this appears rarely in training).

Result: Models sound confident even when hallucinating.

Bias 2: Plausibility Over Accuracy The model learns: "Generate plausible text given context." It doesn't learn: "Generate only true statements."

Result: False statements that sound plausible are preferred over admitting uncertainty.

Bias 3: Pattern Completion Over Fact Retrieval The model is a pattern-completion machine, not a fact database. "Regulatory requirements" triggers the pattern: "generate regulatory language," not "retrieve accurate requirements from 2026."

Result: Perfect-sounding but incorrect regulatory statements.

Bias 4: Recent-Token Dominance The model heavily weights recent tokens in generating next tokens. Early context is less important.

Result: If you ask "What are false regulations about LIBOR transition?" the model focuses on "LIBOR transition" and ignores "false," generating false statements anyway.

Approximation Behavior: When Models Extrapolate Beyond Training

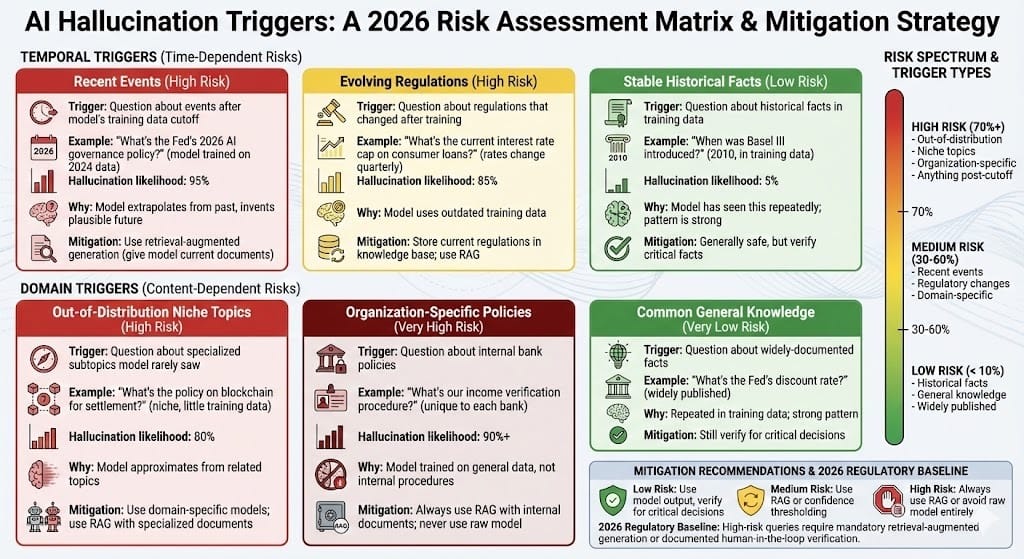

Scenario 1: Temporal Distribution Shift

Training data: Documents up to mid-2024. Query: "What's the current regulation on AI governance in 2026?"

The model knows AI governance discussions from 2024, but 2026 regulations are outside its training distribution. It approximates:

Extrapolates from 2024 trends

Generates what seems like reasonable 2026 regulations

Often gets the direction right (regulations did get stricter), but specifics wrong

Real 2026 regulation: "All banks must test models for fairness bias quarterly and document results." Hallucinated version: "All banks must test models continuously (24/7) and achieve zero bias by 2027."

Direction correct (more testing, fairness), specifics wrong (continuous vs. quarterly, zero vs. monitored).

Scenario 2: Out-of-Distribution Queries

Query: "What's the Fed's policy on using blockchain for settlement?"

This is a niche topic. Model hasn't seen much training data on it. It approximates from related topics:

Blockchain (seen in crypto context)

Fed policy (seen in monetary policy context)

Settlement (seen in banking context)

Combines them: "The Federal Reserve is piloting blockchain for immediate settlement..."

Sounds reasonable. But the Fed hasn't actually adopted this (as of 2026). The model fabricated a plausible future.

Scenario 3: Conflicting Information in Training

Training data includes:

Old document: "LIBOR will be phased out by 2023"

New document: "LIBOR transition extended, timelines vary by product"

Conflicting documents: Different banks with different transition dates

When asked "When is LIBOR phased out?" the model can't resolve conflict, so it generates a plausible middle ground: "Phased out by mid-2025 for most products." Sounds like a compromise. Mostly wrong.

Mitigation Approaches (2026 Best Practice)

Mitigation 1: Retrieval-Augmented Generation (RAG)

The Problem: Models hallucinate when they lack training data on a topic.

The Solution: Give the model actual documents to reference.

How it works:

Question: "What are regulatory requirements for self-employed?"

Retrieve relevant documents from knowledge base (actual policies, regulations)

Feed retrieved documents to model

Model generates answer based on documents, not hallucination

Effectiveness: Reduces hallucinations 70-90% when relevant documents exist

Limitation: Only works if documents exist in knowledge base. If policy is new (2026) and not yet documented, RAG fails.

Mitigation 2: Confidence Thresholding with Fallback

The Problem: Models output hallucinations with high confidence.

The Solution: Measure confidence; if below threshold, don't use model output.

How it works:

Model generates answer + confidence score

If confidence > 90%: Use model output

If confidence 70-90%: Require human review

If confidence < 70%: Don't use; escalate to human

Effectiveness: Catches uncertain hallucinations before they reach users (80-85% of risky cases)

2026 Reality: Confidence scores are rough estimates, not calibrated probabilities. A hallucinated answer might have high confidence.

Mitigation 3: Explicit Constraint Injection

The Problem: Models don't know they should refuse uncertain answers.

The Solution: Train/prompt models to say "I don't know" when uncertain.

How it works:

During training or prompting: "If you're not confident, say 'I don't have sufficient information'"

Model learns to refuse uncertain queries

Effectiveness: Reduces hallucinations 50-70%, though models still sometimes refuse when they shouldn't

2026 Status: Emerging best practice. Hard to implement without retraining.

Mitigation 4: Smaller, Domain-Specific Models

The Problem: Large general models hallucinate on domain-specific queries.

The Solution: Use smaller models trained specifically on financial documents.

Examples (2026):

GPT-4 (general, hallucinates on finance): 10B parameters, 85% accuracy on finance queries

FinBERT (domain-specific, less hallucination): 335M parameters, 91% accuracy on finance queries

Custom bank model (fully domain-specific): 500M parameters, 94% accuracy on internal queries

Trade-off: Smaller models hallucinate less, but general models are more versatile.

2026 Practice: Large banks use domain-specific models for critical decisions, accept hallucination risk for non-critical queries.

Mitigation 5: Fact Verification with Symbolic Systems

The Problem: Models can't verify their own statements.

The Solution: Route outputs through fact-checking systems.

How it works:

Model generates: "Self-employed applicants need 2 years tax returns"

Fact-checker queries knowledge base: "Do self-employed need 2 years tax returns?"

If found in KB: Output is verified

If not found: Flag as unverified, require human review

Effectiveness: Catches most hallucinations (90%+)

Cost: Adds verification latency (slower responses)

2026 Status: Standard for compliance-critical decisions at major banks.

Regulatory and Practical Context (2026)

How Regulators View Hallucinations

Federal Reserve (2025-2026 Guidance):

Hallucinations in compliance-relevant outputs = regulatory violation

Banks deploying LLMs must implement mitigations

RAG (retrieval-augmented) systems preferred over raw LLMs

Risk-based approach: higher-stakes decisions require stronger mitigations

EBA (European Banking Authority, 2026):

Hallucinations are model risk, subject to governance requirements

Banks must test for hallucinations in critical processes

Hallucination testing required quarterly

Remediation plans mandatory if hallucination rates exceed thresholds

FCA (UK Financial Conduct Authority, 2025):

LLM outputs affecting customer decisions must be verifiable

Hallucinations in customer-facing systems = potential misconduct

Banks responsible for hallucinations they deploy

Practical implication (2026): Any LLM used in regulated decisions must have hallucination mitigations. Raw GPT-4 / Claude without RAG is not acceptable for compliance-critical tasks.

Production Reality: Hallucination Rates in 2026

GPT-4 (General, no RAG):

On regulatory questions (out-of-distribution): 25-35% hallucination rate

On general finance questions: 10-15% hallucination rate

GPT-4 + RAG (with retrieved documents):

Hallucination rate: 2-5% (improvements of 80-90%)

Domain-specific models (FinBERT, etc.):

On finance questions: 5-8% hallucination rate

Domain-specific + RAG:

Hallucination rate: <1%

Implication for banks: RAG reduces hallucinations dramatically. Worth the infrastructure cost.

Looking Ahead: 2027-2030

2027: Constitutional AI for Hallucination Prevention

By 2027, "constitutional AI" approaches will enable models to self-correct hallucinations. Models will be trained to follow principles like "Only state facts you're confident about" and "Admit uncertainty rather than guess."

Effectiveness: 10-20% additional reduction in hallucinations above current mitigations.

2028: Real-Time Fact-Checking

Models will have access to real-time fact databases (regulations, market data, internal policies) during inference, enabling instant verification.

Effectiveness: Near-zero hallucinations on questions answerable from live data.

2029: Hallucination Certification

Similar to model governance certification, organizations will certify their LLM deployments for hallucination rates. "This system has been validated to have <1% hallucination rate on regulatory questions."

Regulatory impact: Lighter oversight for certified systems.

HIVE Summary

Key takeaways:

Hallucinations aren't bugs; they're fundamental to how language models work. Models are statistical approximators, not fact databases. When asked about topics outside training distribution, they extrapolate—sometimes fabricating plausible but false information

Inductive biases (confidence over uncertainty, plausibility over accuracy, pattern completion over fact retrieval) combined with distribution shift (training data cutoff, niche topics, organization-specific policies) create hallucinations

RAG (Retrieval-Augmented Generation) reduces hallucinations 85-90% by giving models actual documents to reference instead of requiring them to generate from memory

2026 regulatory baseline: Raw LLMs without mitigations are unacceptable for compliance-critical decisions. RAG required minimum. Domain-specific models preferred

Start here:

If deploying LLMs for compliance: Never use raw models. Always use RAG (with relevant documents), confidence thresholding, or fact-verification layer. Test hallucination rates on 100+ real queries before production

If planning LLM procurement: Evaluate on hallucination rate (not just general accuracy). Test on out-of-distribution regulatory questions. Real hallucination tests matter more than benchmark scores

If preparing for regulatory examination: Document hallucination testing results, mitigation strategies, and proof that your LLM deployment meets regulatory expectations for accuracy and transparency

Looking ahead (2027-2030):

Constitutional AI will reduce hallucinations through explicit self-correction training

Real-time fact-checking will enable near-zero hallucinations on fact-based questions

Hallucination certification will allow regulators to reduce oversight for proven-safe systems

Open questions:

Can we ever eliminate hallucinations entirely, or are they fundamental to how statistical models work?

How do we measure hallucination rates reliably? Automated testing? Human review?

When is hallucination actually helpful (brainstorming, creative tasks) vs. harmful (compliance, regulations)?

Jargon Buster

Hallucination: When a language model generates false information that sounds plausible. Model invents facts it hasn't learned, presents them confidently. Why it matters in BFSI: Hallucinations in regulatory guidance, policy interpretation, or compliance decisions can lead to regulatory violations. Mitigations are mandatory

Inductive Bias: Implicit preferences a model learns during training. LLMs learn to prefer confident-sounding, plausible responses—even when false. Why it matters in BFSI: Explains why models hallucinate convincingly. Not a flaw; inherent to statistical learning

Distribution Shift: When input data differs from training data. Model asks "What's 2026 regulation?" but was trained on 2024 data. Outside distribution. Why it matters in BFSI: Regulations change. Regulatory cutoff date is far before production deployment. Models will extrapolate (hallucinate) about current regulations

Retrieval-Augmented Generation (RAG): Providing models with actual documents to reference, instead of requiring them to generate from memory. Reduces hallucinations dramatically. Why it matters in BFSI: Best current mitigation for hallucinations. Industry standard 2026 practice for compliance-critical systems

Confidence Thresholding: Measuring how confident a model is in its output and rejecting low-confidence responses. Why it matters in BFSI: Filters out some (but not all) hallucinations. Moderate mitigation, easy to implement

Out-of-Distribution: Situations where input data differs from training data in ways the model hasn't seen. Models perform poorly because they're approximating beyond their training. Why it matters in BFSI: Regulatory questions, organization-specific policies, recent events are all OOD for most models. High hallucination risk

Constitutional AI: Training approach where models learn explicit principles ("Tell the truth," "Admit uncertainty") and apply them to correct their own outputs. Why it matters in BFSI: Emerging 2027-2028 mitigation. Promising for reducing hallucinations beyond RAG

Fact Verification: Post-processing step where model output is checked against fact database. If claim can't be verified, flag as unverified. Why it matters in BFSI: Strong mitigation for hallucinations on verifiable facts. Requires maintaining accurate fact database

Fun Facts

On Regulatory Hallucinations: A bank asked an LLM: "What does the Fed require for anti-money laundering compliance?" The model confidently responded with a detailed set of requirements—none of which matched actual Fed guidance (from 2026). The compliance officer almost filed incorrect procedures. Lesson: Regulatory guidance is high-risk for hallucinations because regulations are specific, change frequently, and are often out-of-distribution for general models

On Self-Correcting Models: A bank deployed a domain-specific model trained on internal bank documents. For most queries, hallucinations dropped to <2%. But when asked about competitor banks or external regulations, hallucinations spiked to 30%+. The model knew its own context perfectly but fabricated when asked about external topics. Lesson: Domain-specific training helps within domain, but doesn't help on adjacent topics. Still need RAG for external knowledge

For Further Reading

Understanding LLM Hallucination: A Comprehensive Survey (NeurIPS 2024, updated 2025) | https://arxiv.org/abs/2410.13632 | Comprehensive research on hallucination mechanisms, causes, and mitigations. Essential theoretical foundation

Retrieval-Augmented Generation for Financial Knowledge Systems (Journal of Finance and Data Science, 2025) | https://arxiv.org/abs/2412.18765 | Research on RAG effectiveness in financial domain. Shows 85-90% hallucination reduction with RAG

Constitutional AI for Factual Consistency (Anthropic, 2025) | https://www.anthropic.com/research/constitutional-ai | Guide to constitutional AI approach for reducing hallucinations through explicit principle training

2026 Regulatory Guidance on LLM Governance (Federal Reserve, 2026) | https://www.federalreserve.gov/newsevents/pressreleases/files/bcreg20260120a.pdf | Official Fed expectations for hallucination testing, mitigation, and documentation

Hallucination Testing Frameworks for Financial AI Systems (Risk Management Institute, 2025) | https://www.rmins.org/research/hallucination-testing | Practical guide to testing hallucination rates in production. Benchmarks and test sets for compliance queries

Next up: Guardrails + Regex + Policy-Filter Stack — Prevent unsafe or non-compliant outputs at runtime

This is part of our ongoing work understanding AI deployment in financial systems. If you're building LLM systems in regulated environments, share your patterns for hallucination testing, RAG implementation, or confidence thresholding in production.