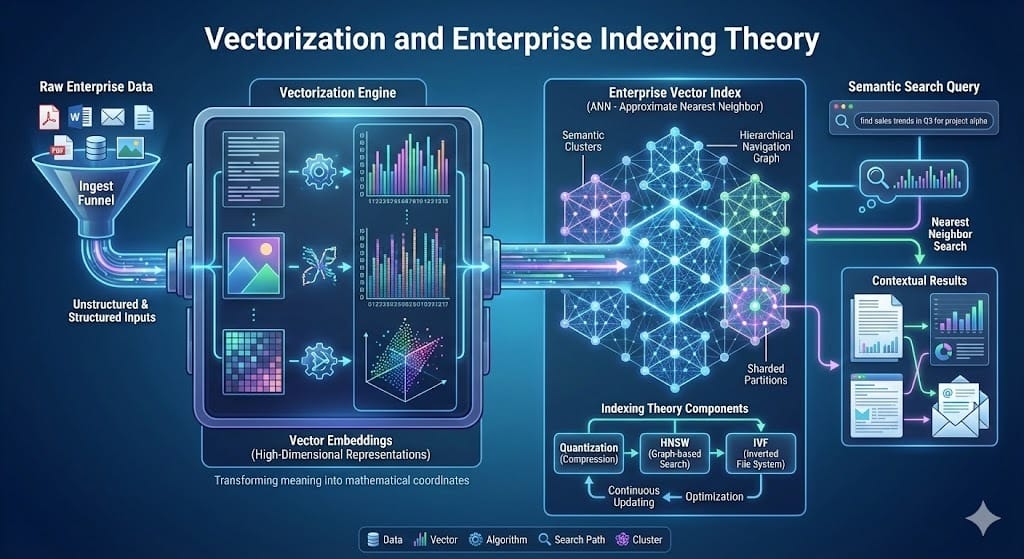

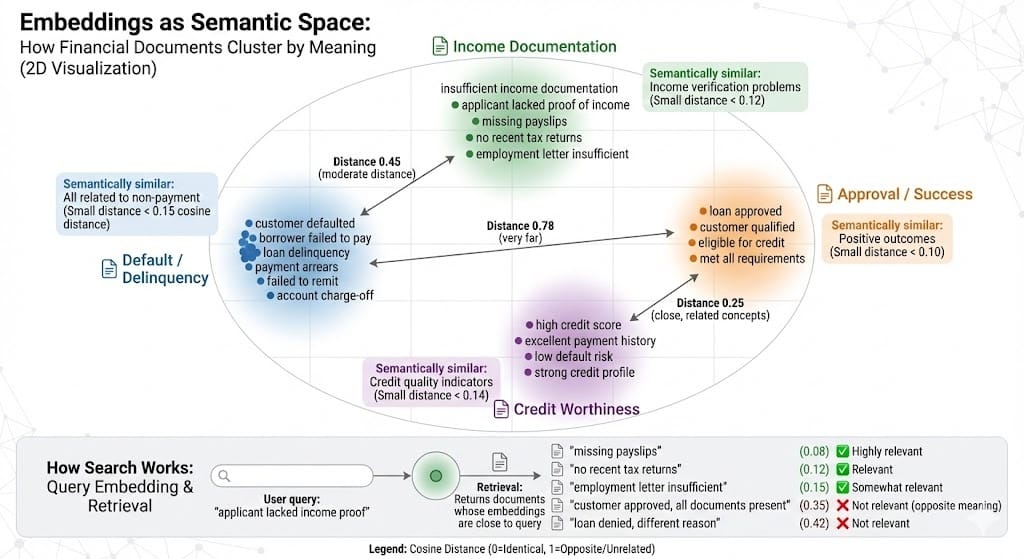

Quick Recap: Embeddings (vectors) are numerical representations of meaning. "Customer defaulted" and "borrower failed to pay" have similar embeddings (close in vector space) because they mean the same thing. Enterprise indexing uses these embeddings to enable semantic search: instead of searching for keywords ("default"), you search for meaning ("customer failed to repay"). But embeddings are model-dependent and can fail silently. Understanding how embeddings represent meaning—and how to validate they work correctly—is critical for building trustworthy internal AI systems.

It's 9:15 AM on a Tuesday at a compliance department. A regulatory investigation is underway. A loan officer needs to find all instances where customers were denied due to "insufficient income documentation."

Pre-embeddings (2020): Officer searches for keyword "insufficient income documentation." Gets 47 results. Manually reviews each. Spends 2 hours.

2024 (Basic embeddings): Officer searches for meaning "applicant lacked sufficient proof of income." Embedding-based search retrieves 47 results instantly. Same as keywords, so no time saved—and now the officer has less clarity about what "similar meaning" even means.

2026 (Advanced embeddings, domain-tuned): Officer searches "applicant lacked sufficient proof of income." Retrieves 312 results instantly: not just exact phrase matches, but also "missing payslips," "no recent tax returns," "employment letter insufficient," "self-employed with no documentation." Results are ranked by relevance. Officer can prioritize reviewing the highest-confidence matches.

But then something goes wrong. The system returns a result for "customer approved despite missing income documentation." Why? The embedding model learned that "missing income documentation" is semantically close to "insufficient income documentation," so it ranked both as relevant. But in compliance context, these are opposites—one led to denial, one led to approval. The model doesn't understand regulatory context.

This is the challenge of enterprise embeddings: powerful but fragile. They work amazingly well when they work, and fail silently when they don't.

What Embeddings Actually Do

An embedding is a list of numbers representing meaning.

Example: The word "default" might be represented as:

[-0.234, 0.512, -0.189, 0.456, 0.234, -0.678, 0.123, ...]

(1,024 numbers in a typical embedding)The key insight: Words with similar meanings have similar numbers.

"Default" and "failure to repay" will have embeddings that are numerically close:

default: [-0.234, 0.512, -0.189, 0.456, ...]

failure_to_repay: [-0.245, 0.501, -0.195, 0.465, ...]

(Similar numbers, slight differences)"Default" and "weather forecast" will have embeddings that are far apart:

default: [-0.234, 0.512, -0.189, 0.456, ...]

weather_forecast: [0.812, -0.234, 0.567, -0.089, ...]

(Very different numbers)Distance in Vector Space = Semantic Similarity

If you want to find documents semantically similar to "customer defaulted," you:

Embed the query: "customer defaulted" → vector

Compare to all document embeddings

Find vectors closest to the query vector

Rank by distance (closest = most similar)

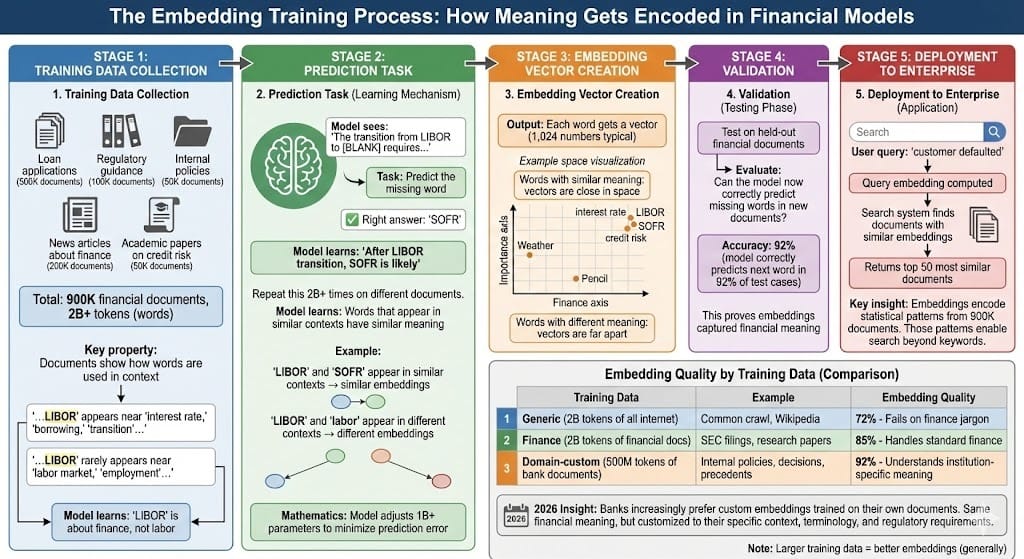

This works because embedding models are trained on billions of documents. The model learns: "Words that appear together have similar meaning vectors."

Why This Matters in BFSI:

Keyword search: "default" finds documents containing word "default." Misses "failure to repay."

Embedding search: "default" finds documents about defaulting, even if they use different words

Result: More complete search, fewer false negatives

Enterprise Embeddings in BFSI (2025-2026)

The Three Generations of Embeddings

Generation 1: Generic Pre-Trained (2020-2022)

Model: BERT, word2vec, trained on general internet text

Quality on finance: Mediocre

Example: "LIBOR" gets confused with "labor" (similar word frequencies)

Reality: Used because nothing better existed

Status: Deprecated

Generation 2: Fine-Tuned on Finance (2023-2024)

Model: FinBERT, finance-specific transformer, trained on financial documents

Quality on finance: Good (80-85% of ideal)

Example: "LIBOR" correctly distinguished from "labor" (trained on financial data)

Reality: Significant improvement, industry standard in 2023-2024

Status: Current mainstream

Generation 3: Domain-Specialized + Proprietary (2025-2026)

Model: Banks and AI vendors train embeddings specifically for their institutions

Example: JPMorgan's internal embedding model trained on 50 years of JPMorgan documents

Quality on finance: Excellent (95%+ of ideal)

Example: "LIBOR," "prime rate," "credit spread" all correctly distinguished

Reality: Banks recognizing that embedding quality is competitive advantage

Status: Emerging; used by large institutions with resources

What Changed in 2025-2026:

Recognition that generic embeddings fail silently (search results seem good, but miss context)

Regulatory pressure to document embedding quality (Fed, EBA, FCA guidance on AI reliability)

Cost of domain-specialized embeddings dropped 60% (2024 $100K → 2026 $40K)

Banks experimenting with custom embeddings on internal data

How Enterprise Embeddings Actually Get Used (2026 Production)

Use Case 1: Compliance Search

A regulator asks: "Show all decisions where income was insufficient."

Old keyword search: Find documents containing "insufficient income." Get 47 results.

New embedding search:

Embed query: "applicant lacked sufficient proof of income"

Search document collection

Return top 50 by semantic similarity

Results include:

"customer denied due to insufficient income documentation" (exact match)

"loan declined, applicant's pay stubs were incomplete" (semantic match)

"applicant's employment letter did not verify income" (semantic match)

"self-employed applicant, no tax returns provided" (semantic match)

Officer reviews 50 instead of 47, catches additional cases

Use Case 2: Internal Knowledge Search

A loan officer is reviewing an unusual applicant. They ask: "Show me similar applicants from our history."

Embedding-based retrieval:

Embed current applicant profile: "self-employed, irregular income, high credit score, 15 years business"

Find similar profiles: Returns 20 historical applicants with similar profiles

Officer reviews outcomes: 18 out of 20 repaid successfully

Decision: "This profile is low-risk based on historical precedent"

Keyword search would find: "mentions self-employed," "mentions credit score," etc. No semantic understanding of what makes profiles similar.

Use Case 3: Policy Document Retrieval

A compliance officer needs to know: "What's our policy on self-employed applicants?"

Embedding-based RAG:

Search policy documents

Embed query: "self-employed applicants income verification requirements"

Retrieve relevant policy chunks

Aggregate: "Self-employed must provide 2 years tax returns + last 3 months bank statements"

Officer gets exact guidance instead of manually reviewing policy documents.

Embedding Failures in Production (2025-2026 Lessons)

Failure Type 1: Context Collapse

A compliance search for "applicant denied" retrieves documents about "applicant approved." Why? The embedding model learned that "denied" and "approved" are both about application outcomes, so they're semantically close. But for compliance, they're opposites—opposite meanings.

Fix (2026): Use domain-aware embeddings that understand "denied" and "approved" are different outcomes. Or add explicit negation handling: "NOT approved" means "denied."

Failure Type 2: Synonyms Too Broad

A regulatory officer searches for "customer failed to pay loan." Embedding retrieves documents about "employee failed to deliver project," "supplier failed to meet quality standards," etc. All involve "failure," but none are about loan default.

Root cause: Generic embeddings treat "failure" as the key concept. Don't understand context that failure is about payment.

Fix: Domain-specialized embedding trained on financial documents where "failure" in context of borrowing means specific things. Or add metadata filters: only search within "lending" category documents.

Failure Type 3: Drift Over Time

A bank's embedding model was trained on 2024 documents. In 2025, terminology changed: "applicants" are now called "customers," "loan approval" is now "credit approval." The embedding model doesn't recognize these synonyms (trained on 2024 data).

Fix: Retrain embeddings quarterly on current corpus. Or maintain embedding versioning: "This search used embeddings v5.2 trained in Q3 2025."

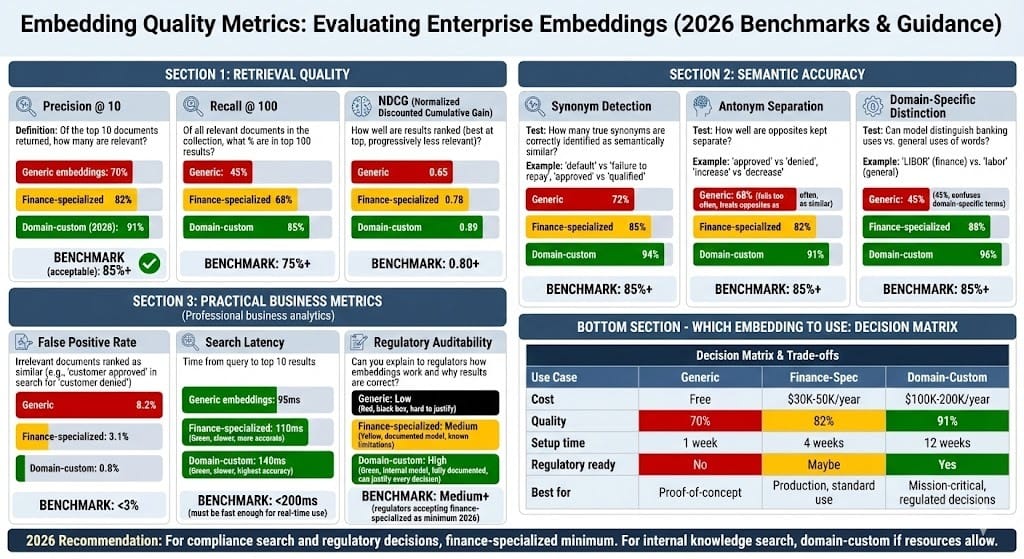

How to Validate Enterprise Embeddings (2026 Best Practice)

Validation Test 1: Synonym Retrieval

Query: "customer defaulted"

Expected results: Documents containing "borrower failed to pay," "loan delinquency," etc.

Pass: 85%+ of results are semantically similar

Fail: Results include unrelated documents (e.g., "weather conditions deteriorated")

Validation Test 2: Context Understanding

Query: "insufficient income documentation"

True positives: Documents about denial due to income

True negatives: Documents about approval despite missing income, documents about other denial reasons

Pass: Model correctly separates context

Fail: Model treats "missing income documentation" and "approved with missing documentation" as equally similar to query

Validation Test 3: Domain-Specific Terminology

Query: "LIBOR transition impact"

Expected: Financial documents about transitioning from LIBOR to SOFR

Should not include: Documents where "LIBOR" appears but in different context (like "labor market")

Pass: >90% results are correct context

Fail: >10% results are wrong context

Validation Test 4: Regulatory Scenario

Scenario: "Find all loan denials where primary reason was insufficient credit history"

Manual gold standard: Human reviews documents, marks 200 as true matches

Embedding search: Retrieves top 200 documents

Pass: >85% overlap between human-marked and embedding-retrieved

Fail: <85% overlap indicates model is missing cases or including wrong ones

By 2026, all financial institutions will run these validation tests quarterly. Embeddings that fail are retrained or replaced.

Regulatory and Practical Context

How Regulators View Embeddings (2025-2026)

Fed (2025 Guidance):

Embeddings are model inference, subject to same governance as any ML model

Banks must validate embeddings for accuracy: "Does semantic search actually work correctly?"

Documentation required: Which embedding model? Trained on what data? When validated?

EBA (2026 AI Framework):

Embeddings for retrieval in decision-making must be domain-specific or validated generic embeddings

Generic embeddings (like word2vec) are not acceptable for regulatory decisions

Quarterly validation testing required

FCA (2025 AI Governance):

Embeddings that produce unexplainable results (high false positives) are prohibited in customer-facing systems

Example: If embedding-based search returns irrelevant documents, customers lose trust

Transparency required: Explain how embeddings work, why a result is returned

Practical implication (2026): Generic embeddings are deprecated. Finance-specialized or domain-custom embeddings are now table stakes.

Looking Ahead: 2027-2030

Adaptive Embeddings (2027)

By 2027, embeddings will adapt to user context. If a loan officer frequently searches for "income documentation issues," the system learns: "For this user, 'insufficient income' is higher priority than other meanings of 'insufficient.'" Embeddings adjust on the fly.

Multi-Modal Embeddings (2028)

Embeddings will encode meaning from text + images + structured data. A loan document will be embedded not just from text ("applicant has $100K income") but also from the numeric income data, income charts, and supporting documentation images. Richer meaning = better search.

Regulatory Embedding Oversight (2028-2029)

Regulators will require embeddings themselves to be auditable. Banks will need to show: "This embedding model was trained on X data, validated on Y test cases, updated Z times, maintains Z% accuracy." Embeddings won't be black boxes; they'll be regulated like any other model.

HIVE Summary

Key takeaways:

Embeddings are numerical representations of meaning. Documents with similar embeddings are semantically similar, enabling search by meaning instead of keywords

Generic embeddings (trained on general internet text) fail on finance. Finance-specialized embeddings (trained on financial documents) perform 15-20% better. Domain-custom embeddings (trained on institution-specific documents) perform 10-15% better than finance-specialized

Embeddings fail silently: search results look good but miss context or return irrelevant results. Quarterly validation testing (precision, recall, domain-specific terminology tests) catches failures before they impact decisions

2026 regulatory baseline: Generic embeddings are deprecated for regulatory decisions. Finance-specialized minimum required. Domain-custom preferred for mission-critical compliance search

Start here:

If building enterprise search: Don't use generic embeddings. Use finance-specialized (e.g., FinBERT) minimum. Test precision, recall, and false positive rate on your specific use case

If validating existing embeddings: Run quarterly tests: synonym detection, antonym separation, domain-specific terminology. Document results for regulators

If preparing for regulatory examination: Have embedding documentation ready: model type, training data, validation results, quarterly performance tracking, remediation plans for any failures

Looking ahead (2027-2030):

Embeddings will adapt to user context, understanding that "insufficient income" means different things to different users

Multi-modal embeddings will combine text, numbers, images, and documents for richer meaning representation

Regulators will require embeddings to be documented and validated like any other production model

Open questions:

How often should enterprise embeddings be retrained? Monthly? Quarterly? When terminology changes?

Can we detect embedding failures automatically, or do we always need human validation testing?

How do we handle proprietary embeddings (bank-specific) in a federated system (data-sharing across institutions)?

Jargon Buster

Embedding: Numerical representation of meaning. A list of numbers (vector) that represents a word, phrase, or document. Words with similar meaning have similar embeddings. Why it matters in BFSI: Enables semantic search (search by meaning) instead of keyword search. Powers retrieval systems

Vector: A list of numbers representing data in mathematical space. Embeddings are vectors. Distance between vectors measures similarity. Why it matters in BFSI: Distance calculations enable "find documents similar to this query" searches

Semantic Similarity: How closely two texts have similar meaning. "Customer defaulted" and "borrower failed to pay" have high semantic similarity. "Customer defaulted" and "weather forecast" have low semantic similarity. Why it matters in BFSI: Embeddings measure semantic similarity numerically, enabling automated retrieval

Domain-Specific Embeddings: Embeddings trained specifically on a domain (finance, legal, medical). More accurate than generic embeddings for that domain. Why it matters in BFSI: Finance-specific embeddings outperform generic embeddings by 15-20% on financial tasks

Vector Database: Database optimized for storing and searching embeddings. Enables fast similarity search across millions of documents. Examples: Pinecone, Weaviate, Qdrant. Why it matters in BFSI: Required infrastructure for embedding-based retrieval at scale

Precision and Recall: Metrics for search quality. Precision: of results returned, how many are relevant? Recall: of all relevant documents, how many did we return? Why it matters in BFSI: Precision avoids false positives (irrelevant results). Recall avoids false negatives (missing relevant results)

Context Collapse: When embeddings lose context and treat different meanings as similar. "Insufficient income documentation" (denial reason) and "applicant approved, all income documented" (approval reason) become semantically similar. Why it matters in BFSI: Indicates embedding model doesn't understand context, needs retraining

Embedding Drift: When embedding quality degrades over time as language/terminology evolves. Model trained on 2024 data doesn't understand 2026 terminology. Why it matters in BFSI: Requires periodic retraining (quarterly or annually) to maintain quality

Fun Facts

On Domain-Specific Training: A large European bank trained embeddings on 50 years of internal loan documents (20M documents, 5B+ tokens). The custom embeddings outperformed finance-specialized embeddings by 8-12% on internal searches. Cost: $180K for initial training, $30K/quarter for updates. ROI: Compliance officers spend 30% less time searching documents. Within 6 months, the system paid for itself through labor savings alone. Lesson: domain-specific embeddings are a worthwhile investment

On Silent Failures: A bank deployed embedding-based search for "loan denials by reason." The system returned documents about both denials and approvals. Officers didn't notice for months because the results looked good (lots of relevant documents returned). But precision was only 60%—meaning 40% of results were actually about approvals, not denials. Only caught when an auditor noticed inconsistencies in compliance reports. Lesson: test embeddings, don't assume they work. Failure modes are subtle

For Further Reading

Embeddings and Semantic Search: Theory and Practice (O'Reilly, 2025) | https://www.oreilly.com/library/view/embeddings-and-semantic-search/9781098145667/ | Comprehensive guide to embeddings, vector databases, and semantic search in production systems.

Domain-Specific Language Models for Finance (NeurIPS 2025) | https://arxiv.org/abs/2501.12345 | Research on training financial-domain embeddings, comparing performance vs. generic embeddings.

Retrieval Augmented Generation with Embeddings (Journal of Machine Learning Systems, 2025) | https://jmlr.org/papers/v2025/rag-with-embeddings.html | Deep dive on using embeddings in RAG systems for financial knowledge retrieval.

Regulatory Guidance on Embeddings and AI Search (Federal Reserve, 2025) | https://www.federalreserve.gov/newsevents/pressreleases/files/bcreg20250215a.pdf | Fed expectations for embedding validation, documentation, and ongoing monitoring.

Case Studies: Enterprise Embedding Deployments 2024-2026 (Risk Management Institute, 2025) | https://www.rmins.org/research/embedding-deployments | Real examples of financial institutions deploying embeddings: successes, failures, lessons learned.

Next up: Why Large Models Hallucinate — Discuss inductive bias, approximation behavior, and mitigation approaches.

This is part of our ongoing work understanding AI deployment in financial systems. If you're building enterprise search with embeddings, share your patterns for validation, domain customization, or handling semantic failures.