Quick Recap: Software gets better when you fix bugs. Models get worse when the world changes. A software update that doesn't touch core logic stays stable forever. A model deployed today degrades tomorrow even if you never change it. Understanding this difference changes how you manage production systems.

Your software team ships a bug fix to the API on Monday. The fix solves a problem. Code stays fixed. Three years later? Still fixed.

Your ML team ships a new fraud model on Monday. The model works great. Detects fraud 94% accurately. Regulators approve it.

Three months later? Same model, same code, same training data—but fraud tactics evolved. Model accuracy drops to 88%. Fraudsters adapted. Code didn't change. Model degraded anyway.

This shouldn't be possible. But it is.

Software: Code stays the same → behavior stays the same.

Models: Code stays the same → behavior gets worse.

Software engineers fix bugs once. ML engineers fight degradation forever.

This difference shapes everything about how you manage production AI.

Why Models Degrade (Even When Nothing Changes)

Root cause: The world changes. Models assume the world stays the same.

When you trained the model (2022):

Interest rates near zero

Fraud tactics focused on card testing

Customer demographics: tech workers 35% of applicants

Employment patterns: traditional W-2 jobs dominant

Today (2025):

Interest rates at 5%+

Fraud tactics shifted to account takeover via AI

Customer demographics: tech workers 52% of applicants

Employment patterns: gig economy expanded to 30%

Model learned 2022. Serving 2025 customers. World changed. Model degraded.

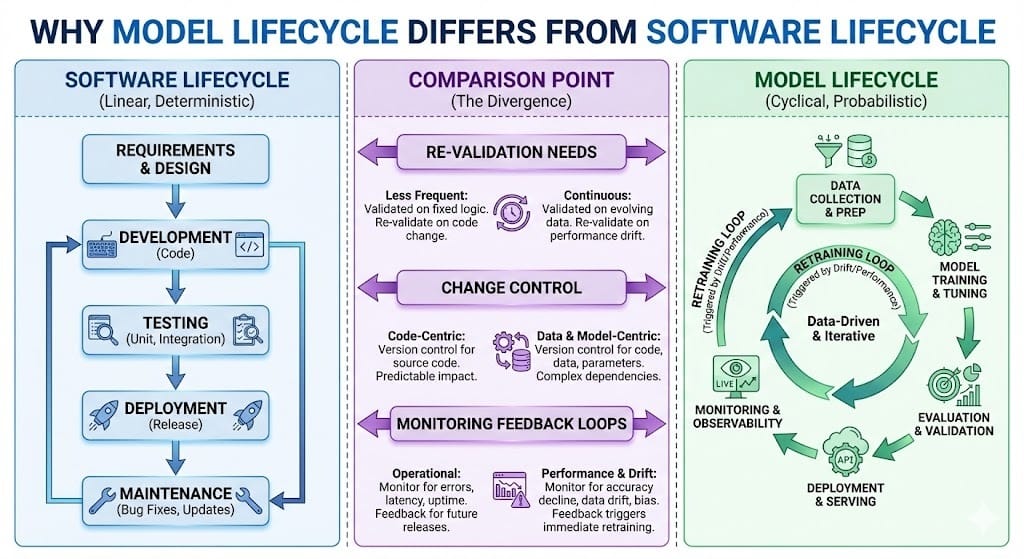

Three Fundamental Differences

Difference 1: Software Assumes Stability

Software design: "Once we fix this bug, it stays fixed."

Reality: Bug stays fixed (if no new bugs introduced).

Assumption: External world is mostly stable. API endpoints don't change behavior. Customer requirements stay constant.

Difference 2: Models Assume... Nothing

ML design: "Once we train this model, it adapts to new data... by degrading."

Reality: Model learns historical patterns. When patterns change, accuracy drops.

Assumption: World stays similar to training data. Customer behavior doesn't shift. Fraud tactics don't evolve.

Difference 3: Software Requires Code Changes to Degrade

To break software, someone must:

Write new buggy code

Deploy it

Shipping introduces the problem

To break a model, nothing needs to happen:

No code change required

No deployment required

World changes on its own

Model degrades on its own

Impact on Management

Software Lifecycle

Deployment: Release version 1.0.

Monitoring: Check for bugs. If found, write fix. Test fix. Deploy fix.

Regression Prevention: Don't ship breaking changes. Test backward compatibility.

Change Control: Only change code if you find a bug or need a feature.

Revalidation: Not needed (unless code changes).

Long-term: Version 1.0 works for years with only occasional bug fixes.

Model Lifecycle

Deployment: Release version 1.0 (trained on 2022-2023 data).

Monitoring: Check for performance degradation. Even without code changes, model gets worse.

Regression Prevention: Can't prevent degradation. World changes on its own.

Change Control: Model may need retraining every quarter without any code changes.

Revalidation: Must happen continuously. Even if code is identical, model behavior changes.

Long-term: Version 1.0 works great for 3 months. By month 6, accuracy has dropped. By month 12, needs replacement.

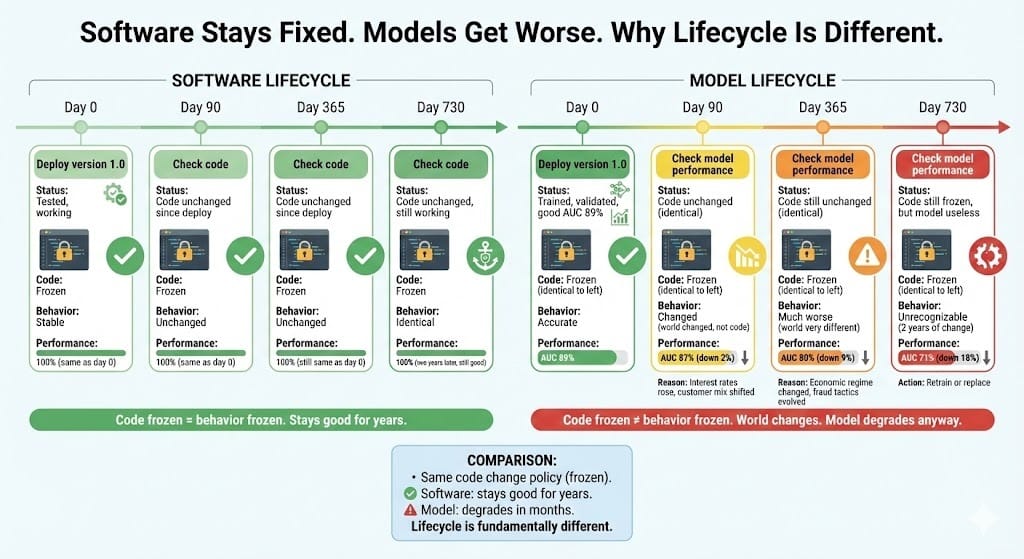

BFSI-Specific Reality

The Credit Model Case Study

Month 0 (2022, model deployed):

Trained on 2021-2022 data

Interest rates near zero

AUC 89%

Performance stable

Customers happy

Month 3:

Interest rates unchanged

Model code unchanged

AUC still 89%

Everything normal

Month 6:

Interest rates start rising

Model code still unchanged

Customer default patterns shift

AUC drops to 87%

"Something wrong? No code changed."

Month 9:

Interest rates at 4%

Model code still unchanged

Defaults correlate differently with rates

AUC drops to 84%

"Why is model worse? We didn't change anything!"

Month 12:

Interest rates at 5%

Model code identical to day 0

Economic regime completely different

AUC drops to 80%

Model is broken

But no code was touched

Month 15 (action finally taken):

Team realizes: world changed, model didn't

Retrain on 2023-2024 data

Incorporate rate sensitivity

New AUC 91%

Performance recovers

Lesson: Stable code ≠ stable behavior for models. External world changes. Model must adapt. Requires continuous retraining, not just bug fixes.

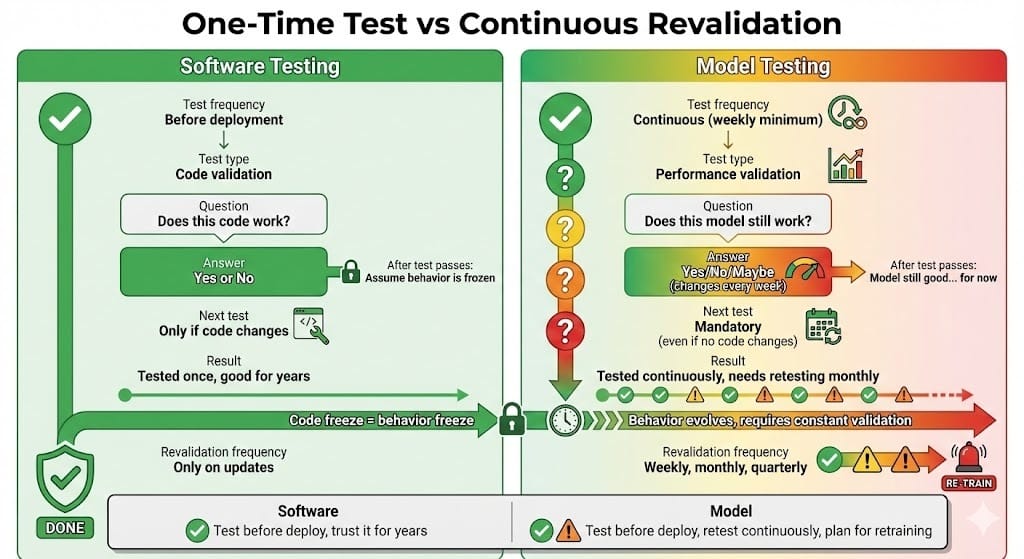

The Re-validation Problem

Why Testing Is Different

Software Testing:

Test: "Does this code work?"

Answer: Yes or No

Doesn't change: If code is frozen, behavior is frozen

Model Testing:

Test: "Does this model work?"

Answer: "Yes, but for how long?"

Changes constantly: Even frozen model gets worse over time

Re-validation Schedule

Software typically needs testing once:

When code changes

When dependencies update

Quarterly security scan

Models need testing continuously:

Weekly performance check (is AUC declining?)

Monthly deep dive (which segments degrading?)

Quarterly retraining assessment (do we need new model?)

Annually rebuild (or stay at risk)

Feedback Loops Make It Worse

Software: Bug is permanent. Fix it once. Done.

Models: Feedback loops accelerate degradation.

Example:

Model denies risky customers

Over time, only safe customers remain (approval bias)

Training data becomes biased (survivors only)

Model learns wrong patterns (future defaults higher than expected)

Degradation accelerates

Common Mistakes

Mistake 1: Deploy Once, Monitor Never

❌ "We deployed the model. Now we manage it like software (occasional patches)." ✅ "We deployed the model. Now we monitor it continuously (weekly checks minimum)."

Models need active management.

Mistake 2: Assume Frozen = Stable

❌ "Model code hasn't changed, so model behavior is stable." ✅ "Model code frozen, but world changed. Performance will degrade anyway."

External world changes force model degradation.

Mistake 3: Test Once, Release Forever

❌ "We validated the model before deployment. It's good for years." ✅ "We validated the model once. We revalidate monthly. It's good until degradation signals a retrain."

Validation has expiration date.

Mistake 4: Ignore Feedback Loops

❌ "Model denies some customers, but that's just the model working." ✅ "Model denies some customers. Training data becomes survivor-biased. Model quality degrades. Need careful re-sampling."

Feedback loops create hidden degradation.

Mistake 5: Software Mindset to ML Problems

❌ "Our software team manages models. They deploy changes and expect stability." ✅ "ML is different. Passive monitoring isn't enough. Active retraining is required."

Different tools for different problems.

Looking Ahead: 2026-2030

2026: Continuous revalidation becomes regulatory requirement

Can't just validate once before deployment

Regulators expect ongoing performance tracking

Quarterly revalidation reports become standard

2027-2028: Automated retraining triggers based on drift

System detects performance degradation

Automatically queues retraining

Humans approve new model before deployment

2028-2029: Model quality frameworks emerge

Standards for how long model is "valid"

Policies: retrain annually, monthly, or continuously

Different policies for different model types

2030: Continuous learning becomes standard

Models learn from production data automatically (carefully)

Human oversight prevents learned biases

Active vs passive performance tracking integrated

HIVE Summary

Key takeaways:

Software stays fixed when you stop changing it. Models degrade when world changes. Fundamentally different lifecycle.

Software: bug fix is permanent. Model: performance degradation is inevitable (even with no code changes).

Re-validation isn't optional. Model validated in 2022 is invalid in 2025. World changed. Model must be retested.

Feedback loops accelerate degradation. Approval bias means training data becomes skewed. Detection of this requires active monitoring.

Change control for models is different. Not about preventing code changes. About detecting world changes and triggering retraining.

Start here:

If monitoring monthly: Increase to weekly. Monthly cycle is too long to catch degradation early.

If testing once before deploy: Add continuous monitoring. Track AUC, precision, recall weekly. Flag degradation trends.

If no retraining schedule: Create one. Plan retraining every quarter (or when performance drops 2-3%).

Looking ahead (2026-2030):

Continuous revalidation becomes regulatory mandate

Automated retraining triggers based on drift detection

Model quality frameworks with explicit validity periods

Continuous learning from production (with safeguards)

Open questions:

How often should models be retrained? Monthly? Quarterly? Annually?

When does degradation become "too much" and require immediate retrain vs wait till next cycle?

Can feedback loops be prevented? (Hard. Requires careful design.)

Jargon Buster

Model Degradation: Performance decrease over time due to distribution shift. Even without code changes. Why it matters in BFSI: Inevitable. Requires continuous monitoring and periodic retraining.

Re-validation: Testing model performance after deployment. Not one-time. Continuous. Why it matters in BFSI: Regulatory requirement. Performance changes over time.

Distribution Shift: Change in input data or outcome patterns. Customer demographics shift. Fraud tactics evolve. Regulatory environment changes. Why it matters in BFSI: Causes model degradation. Must be detected early.

Feedback Loop: Model's decisions create data that trains next version of model. Approval bias is feedback loop. Why it matters in BFSI: Accelerates degradation. Requires careful handling.

Change Control: Process for controlling code/model changes. For software: preventing buggy code. For models: detecting need for retraining. Why it matters in BFSI: Different for models than software. Focus on world changes, not code changes.

Retraining: Taking model and training new version on fresher data. Why it matters in BFSI: Required periodically. Software doesn't need this. Models do.

Model Validity: How long model is acceptable for deployment. Software validity: indefinite (if bug-free). Model validity: months to quarters. Why it matters in BFSI: Models have expiration dates.

Approval Bias: Training data becoming skewed because model denies certain customers. Denials don't appear in future training data (can't verify default risk). Why it matters in BFSI: Hides true default risk. Causes future model degradation.

Fun Facts

On Feedback Loop Damage: A bank's credit model learned to deny customers with certain employment patterns (gig workers). Those denials meant future training data had no gig worker defaults (denied customers = no default data). Model thought gig workers were infinitely risky. Actually: they were just rare in training data. When retraining on new data including gig worker outcomes, true default risk was much lower. Lesson: approval bias is invisible until you look for it. Requires careful re-sampling when retraining.

On Model Validity Timeframe: A fintech deployed a fraud model in Q1 2022. By Q3 2022, fraudsters had adapted. Model accuracy dropped 6% in 3 months. Team didn't notice (checked quarterly). By Q1 2023, accuracy was down 15%. Cost: $500K+ fraud loss. Lesson: world changes fast. Monthly monitoring would have caught this in month two. Re-validation every quarter is not enough for fraud models in high-velocity environments.

For Further Reading

Why Machine Learning Models Degrade (Polyzotis et al., 2018) - https://arxiv.org/abs/1809.03383 - Academic foundation for understanding model degradation and distribution shift.

Managing ML Systems at Scale (Huyen, 2022) - https://huyenchip.com/2022/12/21/twelve-ml-mistakes.html - Practical guide on lifecycle differences between software and ML systems.

Continuous Monitoring and Revalidation in Production ML (Google MLOps, 2025) - https://cloud.google.com/architecture/mlops-continuous-delivery-and-automation - Industry practices for ongoing model validation.

Feedback Loops in Machine Learning (Selbst & Barocas, 2019) - https://arxiv.org/abs/1901.10002 - Research on how model decisions create training bias. Critical for understanding approval bias.

Federal Reserve Guidance on Model Lifecycle Management (Federal Reserve, 2024) - https://www.federalreserve.gov/supervisionreg/srletters/sr2410.pdf - Regulatory expectations for continuous model monitoring and revalidation.

Next up: Week 9 Wednesday explores document intelligence automation—using OCR, named entity recognition, and retrieval-augmented generation to extract structured data from insurance claims, automatically validate information, and route to processing queues.

This is part of our ongoing work understanding AI deployment in financial systems. If you've encountered model degradation and had to explain to stakeholders why a frozen model performs worse over time, share your experience.

— Sanjeev @ AITechHive.com